This is a continuation of my Gnome 3

notes.

Disclaimer - editing system files can break your system, all

information is presented as-is and without warranty.

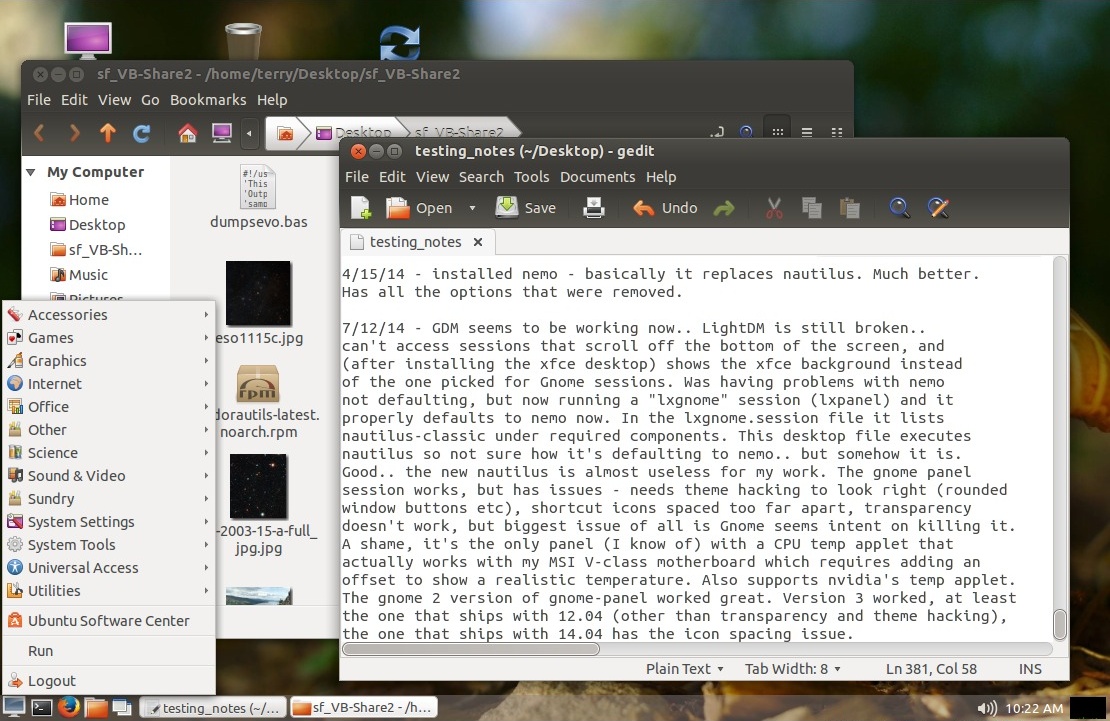

5/19/12

Summary of Configuration Changes

I made to Ubuntu 12.04

The default Unity and Gnome Shell interfaces don't work well for

me, mainly because of a lack of a normal app menu and a task bar

that supports window buttons. Unity is somewhat better but its

taskbar icons represent apps, not windows, and more often than not

I have several instances of the same app running (terminal

windows, file manager windows, edit windows etc). This situation

can be corrected by installing a traditional panel such as

lxpanel, which with some configuration works with both Gnome Shell

and Unity. Other options that provide an old-style Gnome-based

interface with the nautilus desktop include Gnome Classic

(gnome-panel), Cinnamon, Mate, and creating custom Gnome sessions.

Non-Gnome interfaces that can be installed include KDE, LXDE and

XFCE. These are all just different interfaces to the same

underlying operating system (in Linux the operator GUI is really

just another app), for the most part they all run the same apps

and can be installed in parallel. The desired GUI can be selected

from the login screen.

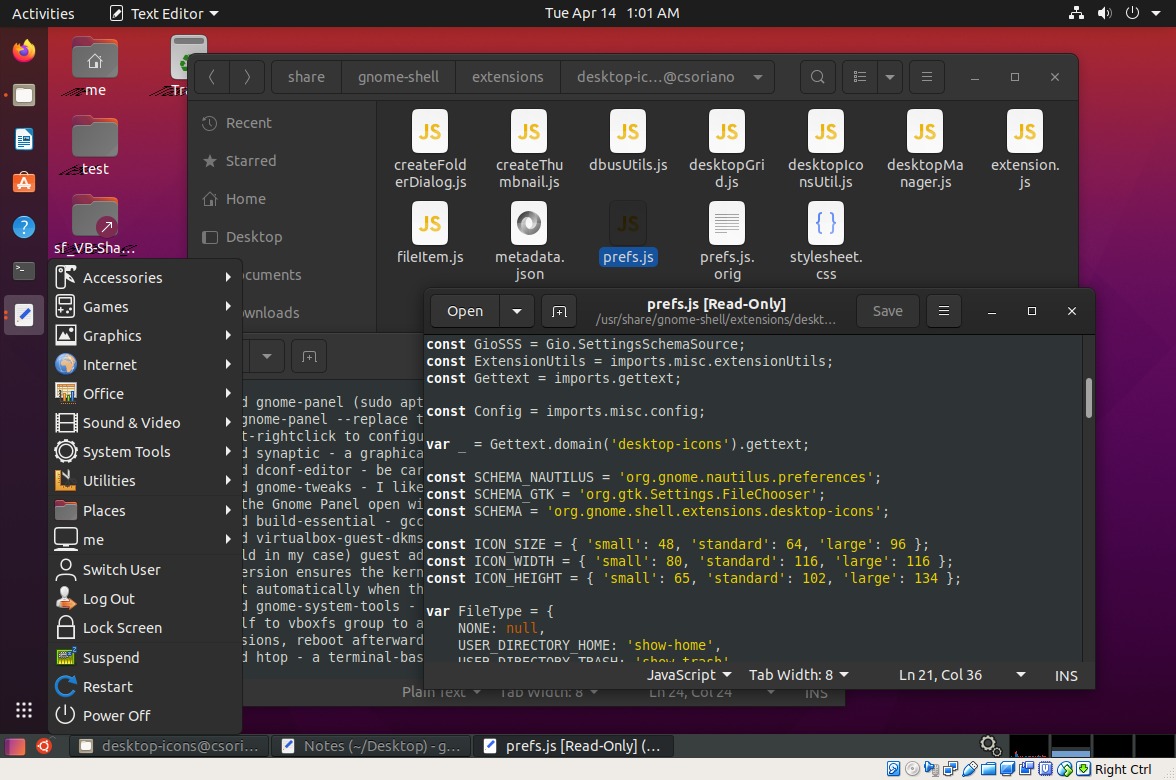

Gnome 3 makes it fairly easy to create custom sessions by adding

files to the /usr/share/gnome-session/sessions and the

/usr/share/xsessions directories. The xsessions directory contains

.desktop files that are made available from the login screen and

specify which .session file to run from the sessions directory.

Not all components (including lxpanel) can be run directly from a

session file, so I add a script to my autostart programs that runs

additional components based on the contents of the DESKTOP_SESSION

variable, which is set to whichever .session file is running. When

playing around with custom sessions it's a good idea to (at least

at first) set up the system so that it does not automatically log

in, otherwise if something goes wrong you might end up in a

non-functional session with no easy way to change to a working

session - if that happens you can boot to recovery mode, navigate

(cd) to /usr/share/xsessions and rename (mv) the non-working

.desktop file. It also helps to create desktop launchers that run

the Gnome logout/restart tool, a terminal and a file manager (or

enable the "Home" icon) so if the panel fails to load you can

still operate the session to fix it or get out of it. Gnome 3

removed the option to create desktop icons but the function can be

accessed by installing gnome-panel and running the

desktop-item-edit applet, I use a nautilus script to make it easy

to run.

This material assumes basic knowledge of how to use a command

line, navigate the file system, and creating scripts. Scripts must

be set to be executable to be able to run them, to create a new

script right-click in the directory you want to create the script

in and select Create Document then Empty File, rename the file to

what the script filename should be, then right click the script

file and select Properties, click on the Permissions tab, then

check Allow executing as a program. By default when executable

scripts are double-clicked a dialog is displayed giving the

options to Run in a terminal, Display, Cancel or Run - to edit

scripts select Display. Only scripts under your home directory can

be directly edited, scripts in system directories require root

permissions to create and edit. Two ways to do that - one way is

after navigating to the directory in a terminal enter gksudo gedit

file_to_edit (presently there's a bug where it creates a second

empty but modified tab, click it gone with no save, see below for

a workaround) - another way is to make a nautilus script that runs

nautilus in root mode then you can create/edit system files

normally. Be very careful when editing files in system

directories, a typo can result in a non-booting system - it's a

good idea to know how to use recovery mode and the command line to

fix things when you break them (nano can be used to edit files

without a GUI). Note - the nautilus file manager hides the true

filename of .desktop files, to see and edit these use a terminal

and run the editor directly - if in a system directory then use

gksudo gedit filename.desktop to create or edit them.

Tinkering with the guts of the system can be daunting at first, but it becomes fairly easy after some practice. Main rule is if you don't know what a file does, don't mess with it. The mods in this section do not change existing files so should be safe enough even if something goes wrong, but remember there's no warranty, if you break it you get to keep all the pieces. If unsure or you don't want to take any chances then don't try it, stick with the pre-configured sessions (install gnome-panel to use Gnome Classic etc).

To get started, use Software Center to install Synaptic, Software

Center is nice for browsing but it's a whole lot easier to use

Synaptic to install packages when you already know what you want,

plus if using Unity you probably don't want it adding a launcher

for everything installed (and for some things a launcher won't

work anyway). You'll probably have to jump through some hoops at

first to enable additional repositories. Once Synaptic is

installed and all the repositories are enabled, installing

packages typically is just a matter of typing in the package name

or key word into the quick search box, checking the package,

clicking apply and confirming the changes. To duplicate something

like my setup install gnome-panel and lxpanel. Other almost

must-have packages include gnome-tweak-tool (which pulls in

gnome-shell), gconf-editor and dconf-tools.

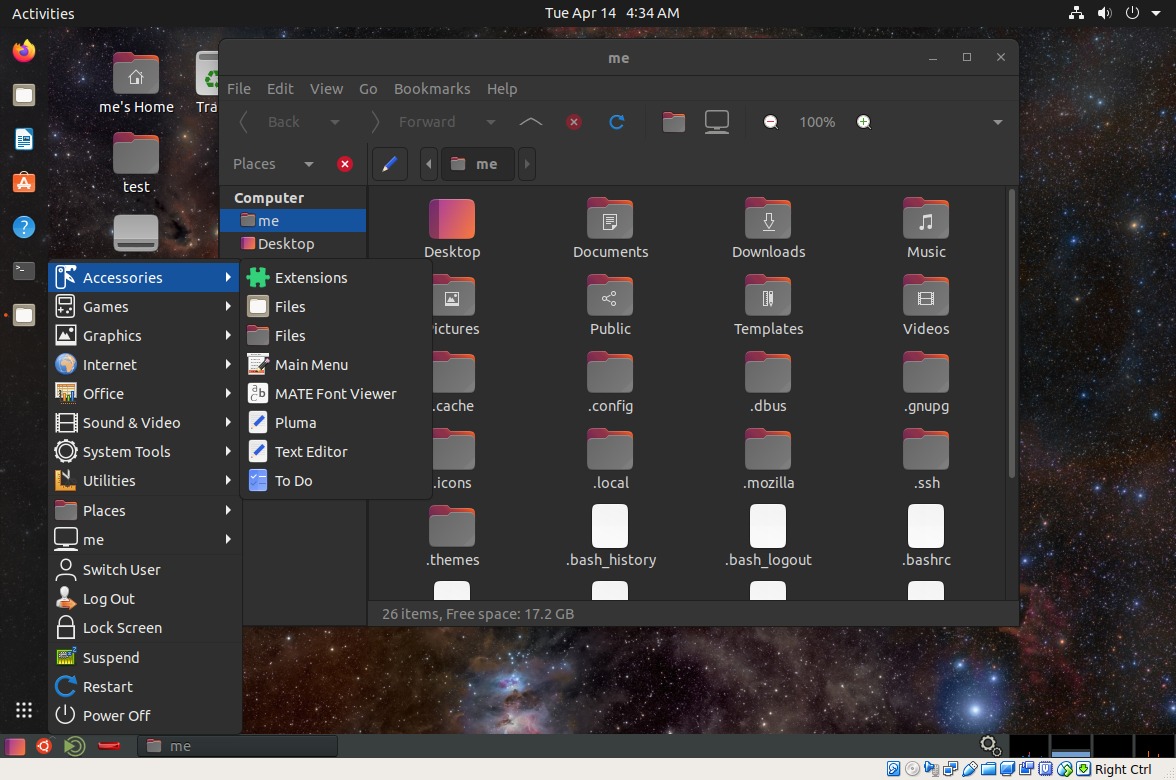

To make things easier I use nautilus scripts, which (once

enabled) can be accessed by right-clicking and selecting Scripts.

To enable nautilus scripts, open the file manager and navigate to

[your home dir]/.gnome2/nautilus-scripts (in these docs your home

dir is often specified as ~) (to access files beginning with a dot

do View then check Show Hidden Files - might want to go to

Preferences and make that permanent), then add a script. I use the

following scripts...

Terminal...

#!/bin/bash

gnome-terminal &

CreateLauncher...

#!/bin/bash gnome-desktop-item-edit --create-new $(pwd) &

BrowseAsRoot...

#!/bin/bash gksudo "nautilus $(pwd)" &

Once scripts have been added to nautilus-scripts, the Scripts

menu is enabled and contains an option to open the scripts

directory.

To work around the gedit bug where it creates an empty modified

edit tab, add the following system script...

gksudo gedit /usr/local/bin/mygedit (or name it however you want

- just not gedit or any other existing command name)

#!/bin/bash gedit "$1" < /dev/null

With this script in place you can do gksudo mygedit file_to_edit

without the extra tab being made.

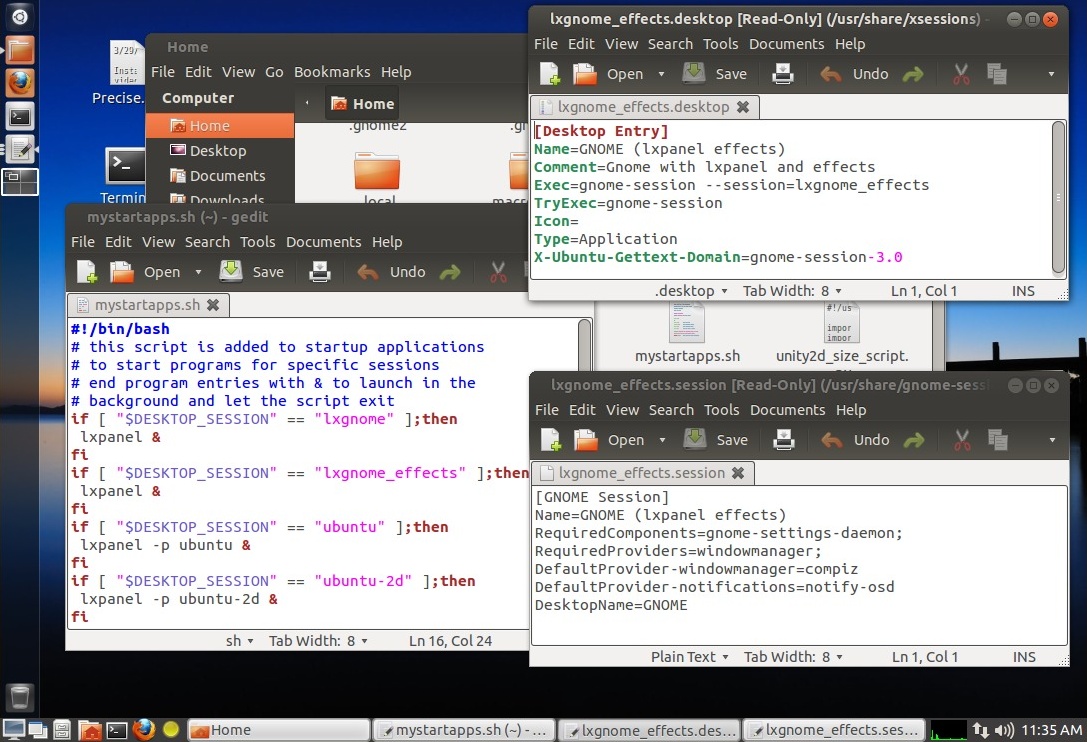

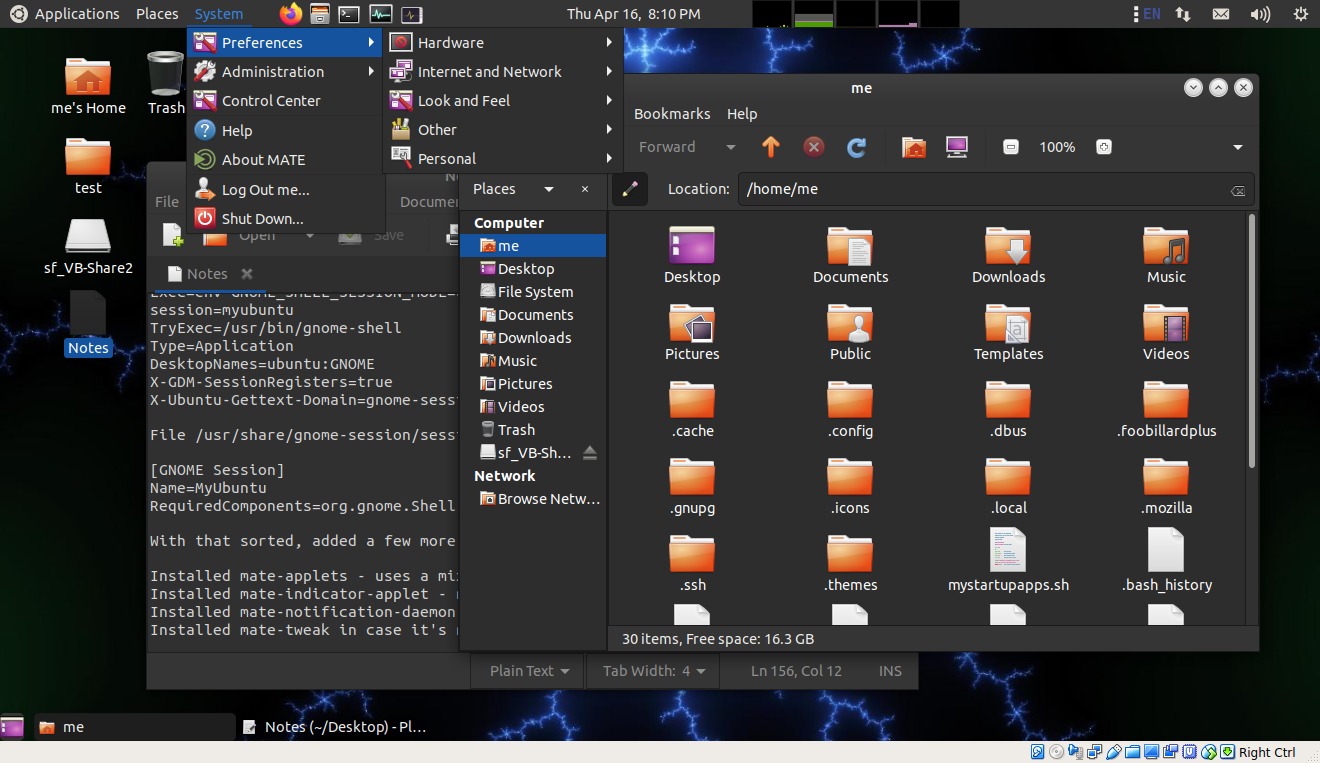

Now to create a custom "LxGnome" session... Add the following

system files... (see above notes about editing system files)

/usr/share/gnome-session/sessions/lxgnome.session

[GNOME Session] Name=GNOME (lxpanel) RequiredComponents=gnome-settings-daemon; RequiredProviders=windowmanager; DefaultProvider-windowmanager=metacity DefaultProvider-notifications=notify-osd DesktopName=GNOME

/usr/share/xsessions/lxgnome.desktop

[Desktop Entry] Name=GNOME (lxpanel) Comment=Gnome with lxpanel Exec=gnome-session --session=lxgnome TryExec=gnome-session Icon= Type=Application X-Ubuntu-Gettext-Domain=gnome-session-3.0

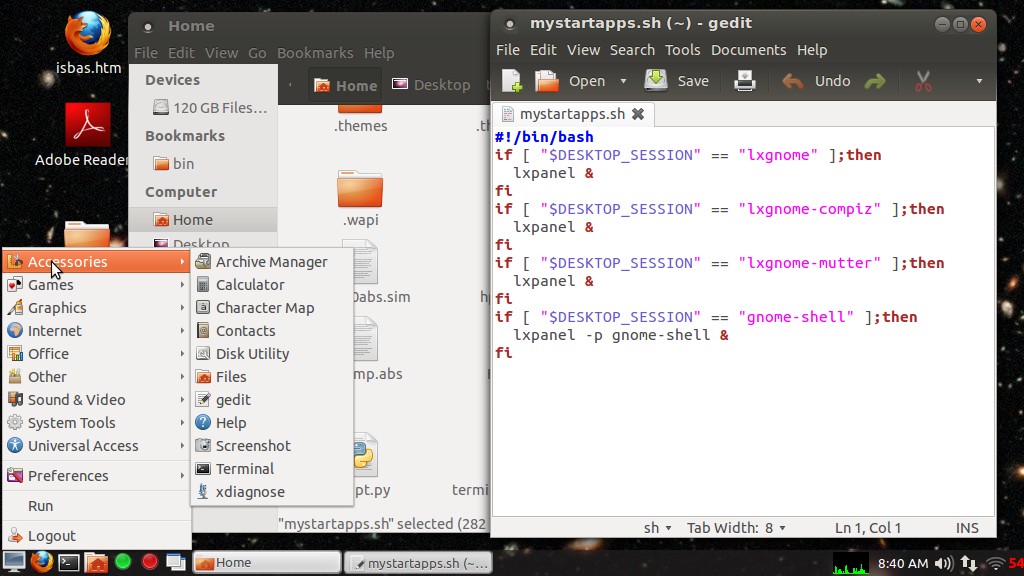

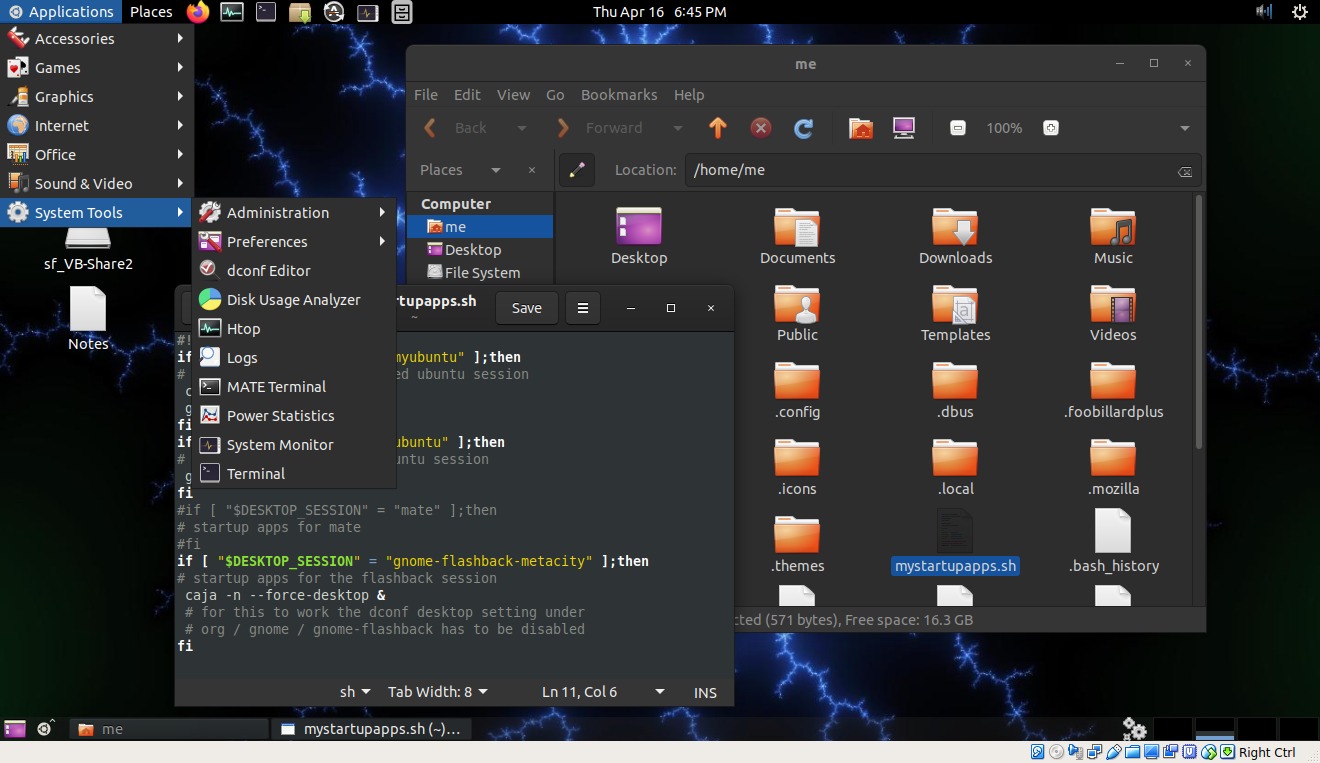

Add the following script anywhere convenient, I put it in my home

directory for easy editing...

mystartapps.sh

#!/bin/bash if [ "$DESKTOP_SESSION" == "lxgnome" ];then lxpanel & fi

Make sure it's executable. Go to the Startup Applications applet

and add an entry for mystartapps.sh - you'll have to specify the

full path to the script, for my system for the command box I

entered: /home/terry/mystartapps.sh

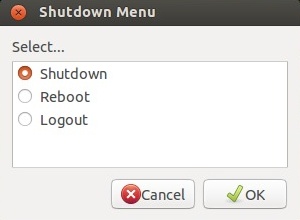

To create custom shutdown and logout icons on the desktop, use

the CreateLauncher script.

For Shutdown use the command line:

/usr/lib/indicator-session/gtk-logout-helper --shutdown

For LogOut use the command line:

/usr/lib/indicator-session/gtk-logout-helper --logout

Choose whatever icons you want for these, instead of trying to

navigate through the hundreds of icons you can just make them,

then launch a terminal on the desktop (using the "Terminal"

nautilus script), do ls to see the filename, then do gedit

filename.desktop to directly edit the launchers, change the Icon=

line for the logout launcher to system-log-out and change the icon

line for the shutdown launcher to system-shutdown.

Now you can try booting into the newly made lxgnome session - log

out and select GNOME (lxpanel). The first run of lxpanel will be a

mess, don't worry, will fix that. For starters the default system

tray and desktop pager applets will probably be botched,

right-click and remove them. Right-click the panel and select

Panel Settings, click the Advanced tab and set File Manager to:

nautilus set Terminal Emulator to: gnome-terminal and

set Logout Command to:

/usr/lib/indicator-session/gtk-logout-helper --logout Click

on the Appearance tab and set Background to a solid color, click

on the color thing and pick something, probably generic gray, for

coolness can make semi-transparent. Click on the Panel Applets and

add back the system tray, which should add a proper volume control

and network manager icons. To set the clock up to show date and

normal AM/PM time, right-click it, select Clock Settings, and set

the format to the string: %a %b %e %l:%M %p Or to show just

the time without the date use the string: %l:%M %p Change

the other lxpanel options to taste.. add and/or rearrange applets,

edit the launchers to what you want etc.

For eye-candy (shadows, animations etc) install the mutter package then edit the lxgnome.session file to change metacity to mutter. Or make new session/desktop files edited appropriately to select whether to use effects or not.

If anything goes wrong and it doesn't work, log back into Unity

(or Gnome Classic) and figure it out - the above documents what I

did to get the kind of GUI I want but your experience might be

different, and there will probably be other aspects of the system

that need tweaking. I think I covered the basics but if there are

any errors or issues in the above instructions, let me know. Also

these techniques can be used with other panel apps besides

lxpanel, which does have some bugs - the virtual desktop pager

doesn't work quite right, the add-to-desktop feature half-locks-up

and requires the resulting launchers be made executable (it was

designed mainly for LXDE, not Gnome) - but for me being able to

configure it to do what I want makes up for the glitches.

LxPanel can also be used with Unity and Gnome Shell. Since the

panel settings probably need to be different, copy the

~/.config/lxpanel/default directory to a new directory or

directories under ~/.config/lxpanel and rename as needed - I name

new configs to match the DESKTOP_SESSION variable so for Unity

call the new directory "ubuntu", for Unity-2D use "ubuntu-2d", for

Gnome Shell use "gnome-shell". The lxpanel -p option is used to

load alternate configurations. Edit mystartapps.sh to something

like this...

#!/bin/bash if [ "$DESKTOP_SESSION" == "lxgnome" ];then lxpanel & fi if [ "$DESKTOP_SESSION" == "ubuntu" ];then lxpanel -p ubuntu & fi if [ "$DESKTOP_SESSION" == "ubuntu-2d" ];then lxpanel -p ubuntu-2d & fi if [ "$DESKTOP_SESSION" == "gnome-shell" ];then lxpanel -p gnome-shell & fi

Include only the sessions you want lxpanel to run in. BTW the

& symbols at the end of the lxpanel lines tells the script to

launch it and move on, this avoids having the script continuing to

run waiting for the panel to exit, and also permits adding

multiple items if other components are desired for a particular

session.

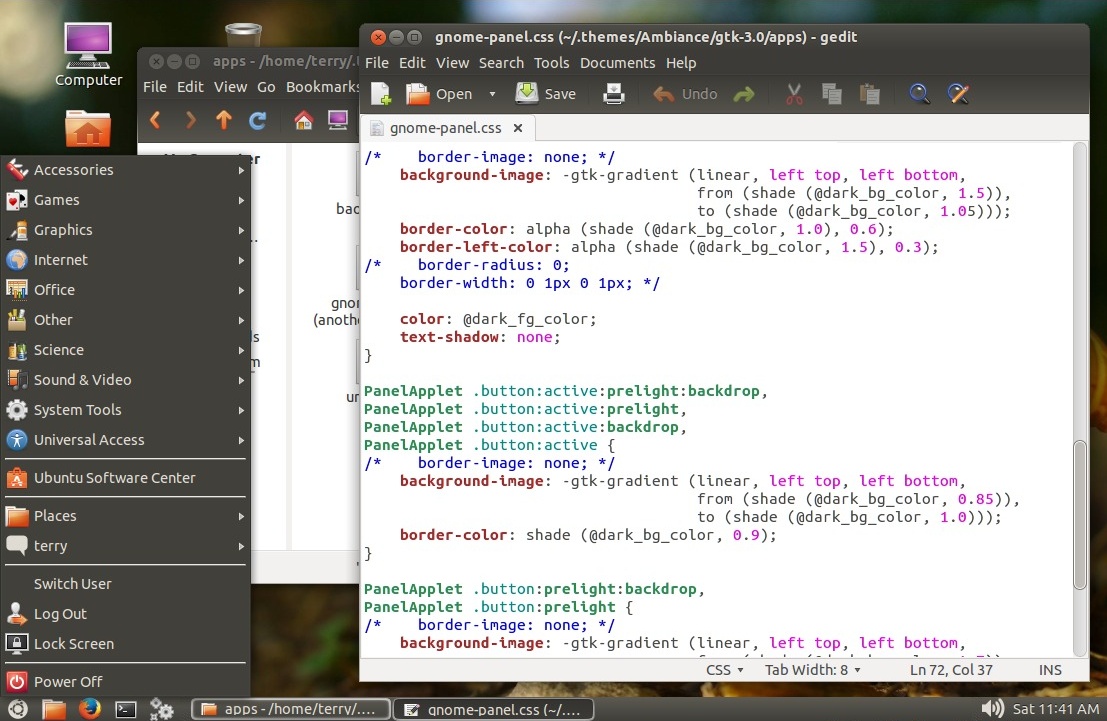

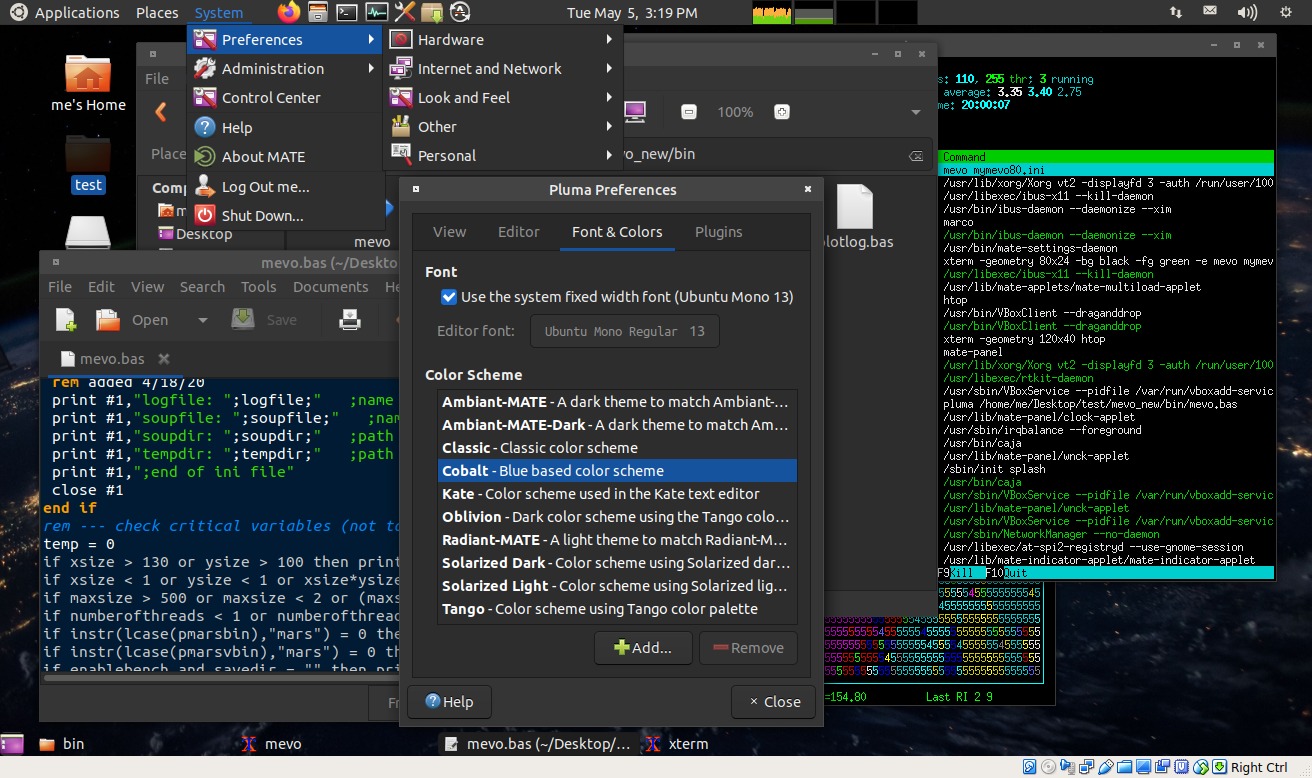

Presently the default Ambiance theme looks a bit funny to me, so

I made my own somewhat modified

Ambiance theme. To use it copy the tar.gz file to the

~/.themes directory (make it if .themes doesn't exist) then

extract the archive. Use gnome-tweak-tool ("Advanced Settings") to

select AmbianceMod. This has the effect of making any GTK apps

running as root look like junk, to avoid that rename the theme to

Ambiance instead, then it overrides the stock Ambiance theme and

root things get the stock theme.

This covers most of the tweaks I've made to my Ubuntu 12.04 systems (virtual and real). Other tweaks I've made include moving the window controls back to the right, disabling the overlay scroll bar, and/or disabling the global menu but that only matters when using Unity so I don't bother with that one. On my systems I had to uninstall apport to keep it from displaying useless crash warnings when nothing was wrong, that might be fixed now. There are numerous web pages explaining how to make basic Ubuntu 12.04 tweaks so I won't get into that here.

A Test Upgrade from 10.04 to

12.04

4/28/12 - Running from a USB stick is a safe way to test

compatibility on my main system but I want a real install to play

with.. so upgraded my HP Mini 110 that was running Ubuntu 10.04. I

got the current 32-bit 12.04 ISO, used Startup Disk Creator to put

it on a USB stick, booted it on my Mini, ran the install icon,

chose the option to upgrade the existing 10.04 installation

(already selected), used the same user name I was already using

and let it do its thing. I didn't have internet connected at the

time so it couldn't replace outdated installed apps that weren't

already on the ISO, so it told me I'd have to reinstall some

things - that's fine, I didn't want to be accessing the overloaded

repositories during installation and I needed to clean out old

cruft anyway. Rebooted into Unity, connected ethernet long enough

to run the restricted drivers thing which installed the broadcom

wireless driver. Tried to use Software Center to reinstall wine

but it didn't work... just put a non-functional icon on the

launcher. Opened a terminal and used sudo apt-get update then sudo

apt-get install wine - had to accept a license for mscorefonts, I

guess Software Center can't handle text-mode installation

questions (or something else bugged out). Repeated sudo apt-get

install for dosemu gnome-panel and other stuff I need or got

removed that I still want. Logged out and logged into Gnome

Classic - some minor cosmetic issues (already noted) but otherwise

works fine. Did a good job of preserving my data and custom apps,

all my wine/dos apps, usr/local/bin stuff, associations, desktop

icons nautilus-scripts etc all work fine, all I had to do was some

minor editing to a few of my corewar scripts to compensate for the

new version of xterm. The unclean shutdown bug I was getting in

VirtualBox and on an EXT4-formatted thumbdrive doesn't seem to be

a problem on this upgraded EXT3 system, dmesg | grep EXT shows no

errors... whatever causes that doesn't happen on this system.

Sound works and the speakers stop when the headphone jack is

inserted, video works, etc. So far it looks like a successful

upgrade.

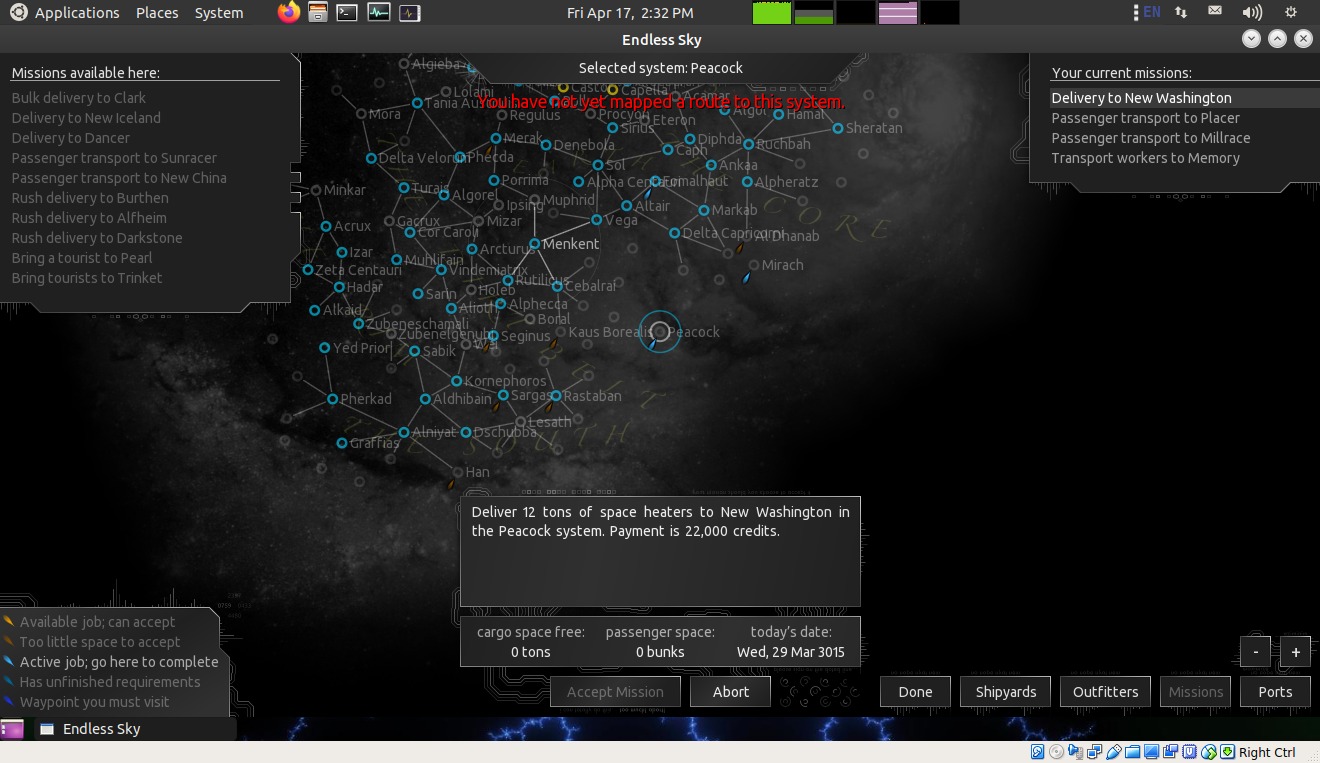

4/29/12 - "lxgnome" on my HP Mini 110, using the mutter window

manager and the stock Ambiance theme...

...Nice. For me anyway.. lxpanel doesn't have integrated social

networking and all that stuff like the Unity taskbar, but I don't

use that stuff and I'd much rather have a panel with a normal app

menu and window list. This is using lxpanel's included network

status monitor applet (had to set the config tool to

network-manager to make it work), wireless also shows up with the

systray applet, at first that config (the default when first

running lxpanel) was buggy but after removing systray, adding

lxpanel's own volume and adding systray back it seems to work OK

now. To get effects at first I tried enabling Metacity

compositing, but that was too glitchy... icons leave trails when

moved. Compiz looks good but has a bit of momentary glitching when

restoring minimized windows. Mutter works very well with nice but

not overbearing effects - minimized windows smoothly collapse to

their proper place on the panel. To make the additional sessions I

simply copied my existing lxgnome .session and .desktop files to

-compiz and -mutter copies and edited to change the names and

window manager, added extra entries to my startup apps script

(while at it made a lxpanel config for gnome-shell). In all the

sessions I can run the Unity 2D launcher as needed to make use of

its facilities... not too crazy about Unity as a sole interface

but when paired with a more conventional panel for task

management, Unity provides some really cool features, especially

for searching for files and videos. All one really needs to do to

restore a traditional environment to Unity is to install lxpanel

(or any conventional panel), add it to the startup apps and do a

bit of configuring... the scripts and stuff are needed only if

using multiple sessions.

5/10/12 - quite a few 12.04 updates have come through in the last

week, fixing among things the unclean shutdown glitch and

improperly-updating compiz window title bars under VirtualBox.

Other VirtualBox graphics glitches remain but it's fine with

no-effects metacity sessions... graphics card emulation only goes

so far but that it can do as well as it does is quite amazing..

some video sites like Crackle work better from my virtual 12.04

system than from my native 10.04 system... buffers better with

fewer or no stream lockups.

On my HP Mini 110 I replaced my Unity-On Unity-Off launchers with

a single launcher/script that just turns it on and off...

#!/bin/bash if ps -e | grep "unity-2d-shell";then killall unity-2d-shell else unity-2d-shell & fi

...one less icon matters with a single-bar setup.

5/14/12 [edited 5/19/12] - I had my first real-life app for Gnome

3 / Ubuntu 12.04 - I needed to configure my HP Mini 110 so that I

could edit microcontroller source code written in Great Cow Basic

(that part is easy - gedit), recompile the code using the

GCB compiler configured to use the gpasm assembler (from the

gputils package) to produce a hex file, rig up a simple

serial-port terminal to talk to the gizmo via a generic USB serial

adapter, and upload the hex binary code to the gizmo through the

USB serial interface. This wasn't for some optional hobby project

but for a mission-critical tester used for a product manufactured

by the company I work for, I had to bring the thing home to work

on the code (on my 10.04 machine) but if other changes are needed

I need to have a programming rig I can take to the factory. Sounds

like a good test.

What I want... edit the source code, right click the source file

and select the GCB compiler, double-click an icon or script to

launch the serial terminal emulator, right click the hex file made

by the compiler and select sendhexUSB to send it to the gizmo,

watch the code being uploaded on the terminal. Which is exactly

what I ended up with but getting there showed a Gnome 3 fail in

its default configuration - an inability to associate files to

arbitrary apps.

So... copied over my existing pictools directory containing GCB

and my compiler scripts - it's a FreeBASIC app so already 32 bit,

should work fine (and does), installed gputils from the

repository, found the source for dterm - my favorite lightweight

serial terminal - and tried to compile it, failed, edited the

Makefile to remove -Werror so warnings won't stop it, compiled and

copied the resulting dterm binary to /usr/local/bin. Now to get it

to work... says /dev/ttyUSB0: permission denied. OK... did some

googling, learned I had to do ls -la /dev/ttyUSB0 and note which

group appeared after root (dialout) and add myself to that group.

Not wanting to goof around from a command line I installed

gnome-system-tools to get the traditional "Users and Groups" GUI,

added myself to the dialout group, logged out and back in, now my

script that runs dterm works and I can interact with the gizmo. So

far so good.

Now to associate *.gcb and *.hex files to the appropriate tool

scripts. I used the Assogiate app to create new file types for

text/x-gcb and text/x-hex, adding text/plain to both and adding

the appropriate file masks and file type descriptions. New file

types show up in Nautilus, right-click to add my associations and

that's where I ran into problems - it only can associate to

"officially" installed apps or things already in its association

database (thank goodness when I upgraded it kept all my many

associations!). [...] More here about this association

feature/bug.

5/20/12 - OK I think I figured out this association stuff...

still don't fully understand how it all works but really the core

issue is being able to make an arbitrary binary, script or command

appear in the list of apps that can be associated, and it turns

out that part is easy. The idea is to put a custom .desktop file

in ~/.local/share/applications containing entries like this...

[Desktop Entry] Type=Application NoDisplay=true Name=Name of the application Exec=/path/to/the/executable %f Terminal=false

The key to making it work is the Exec line has to end with %f or

%U or it won't show up in the list of apps that can be associated.

So now just need an easy way to create the .desktop file without

having to jump through a bunch of hoops, the solution I came up

with is this script...

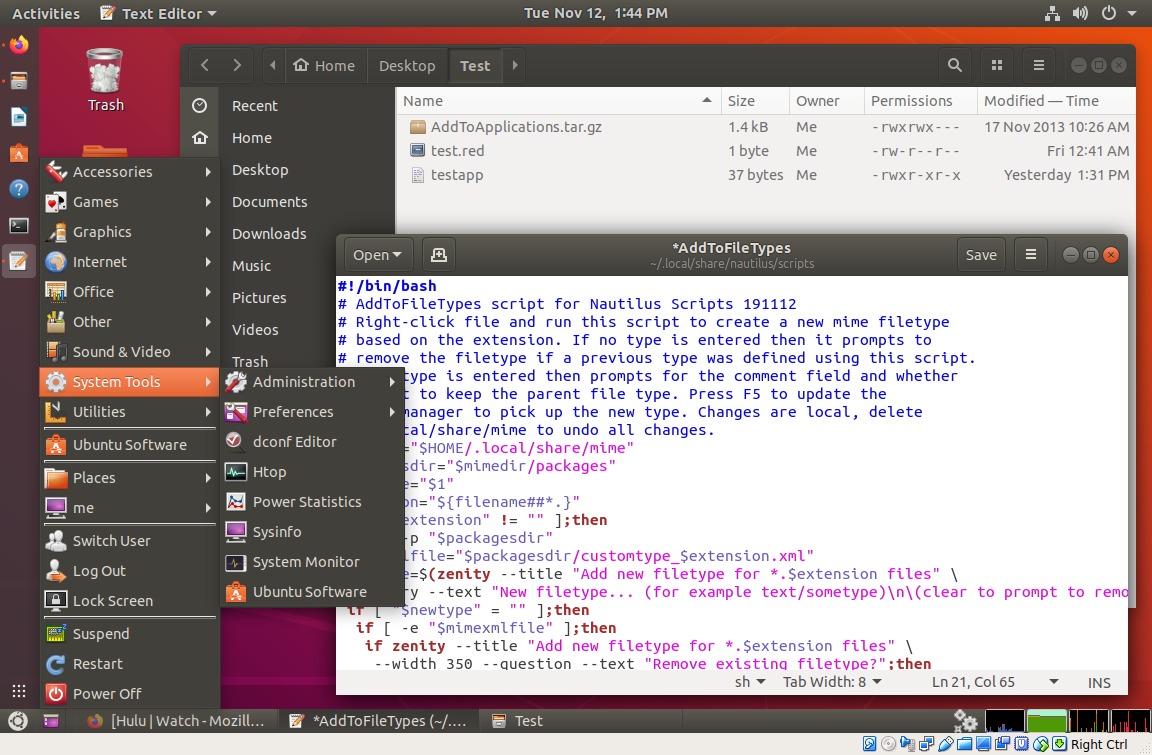

#!/bin/bash # # "AddToApplications" by WTN 5/20/2012 modified 11/17/2013 # # This script adds an app/command/script etc to the list of apps # shown when associating files under Gnome 3. All it does is make # a desktop file in ~/.local/share/applications, it doesn't actually # associate files to apps (use Gnome for that). Delete the added # customapp_name.desktop file to remove the app from association lists. # If run without a parm then it prompts for a command/binary to run. # If a parm is supplied then if executable uses that for the Exec line. # appdir=~/.local/share/applications prefix="customapp_" # new desktop files start with this if [ "$1" != "" ];then # if a command line parm specified.. execparm=$(which "$1") # see if it's a file in a path dir if [ "$execparm" == "" ];then # if not execparm=$(readlink -f "$1") # make sure the full path is specified fi if [ ! -x "$execparm" ];then # make sure it's an executable zenity --title "Add To Associations" --error --text \ "The specified file is not executable." exit fi if echo "$execparm" | grep -q " ";then # filename has spaces execparm=\""$execparm"\" # so add quotes fi else # no parm specified, prompt for the Exec command # no error checking, whatever is entered is added to the Exec line execparm=$(zenity --title "Add To Associations" --entry --text \ "Enter the command to add to the list of associations") fi if [ "$execparm" == "" ];then exit;fi nameparm=$(zenity --title "Add To Associations" --entry --text \ "Enter a name for this associated app") if [ "$nameparm" == "" ];then exit;fi if zenity --title "Add To Associations" --question --text \ "Run the app in a terminal?";then termparm="true" else termparm="false" fi # now create the desktop file - the format seems to be a moving target filename="$appdir/$prefix$nameparm.desktop" echo > "$filename" echo >> "$filename" "[Desktop Entry]" echo >> "$filename" "Type=Application" #echo >> "$filename" "NoDisplay=true" #doesn't work in newer systems echo >> "$filename" "NoDisplay=false" #for newer systems but shows in menus echo >> "$filename" "Name=$nameparm" echo >> "$filename" "Exec=$execparm %f" # I see %f %u %U and used to didn't need a parm, newer systems might be picky # according to the desktop file spec... # %f expands to a single filename - may open multiple instances for each file # %F expands to a list of filenames all passed to the app # %u expands to single filename that may be in URL format # %U expands to a list of filenames that may be in URL format # ...in practice all these do the same thing - %F is supposed to pass all # selected but still opens multiple instances. If %u/%U get passed in URL # format then it might break stuff.. so for now sticking with %f echo >> "$filename" "Terminal=$termparm" #chmod +x "$filename" #executable mark not required.. yet.. # when executable set Nautilus shows name as Name= entry rather than filename # app should now appear in the association list

[updated 11/17/13 to also work with newer versions of Gnome]

To make it easy to use I saved it as "AddToApplications" in the ~/.gnome2/nautilus-scripts directory (executable of course). To add a custom script or binary to the list of apps that can be associated I right-click the file and select Scripts|AddToApplications, give it a name that will appear in the application list (choose carefully to avoid conflicting with existing apps), then click yes or no to specify if the app should be run in a terminal or not. The script will then save a desktop file named "customapp_name.desktop" where name is the name was entered, afterwards the custom app appears in the list of applications that can be associated. If command line parameters are needed then run the AddToApplications script without a selected file or parm, then it prompts for a command line first, the filename being run by association will be added after any parms. Assogiate is still needed to create custom file types based on specific extensions etc but I'm used to that, same with Gnome 2.

5/23/12 - Originally when trying to configure associations on my

Mini 110 I had installed kde-plasma-desktop to make use of its

association utility (and get another alternate desktop environment

to play around with), but it turns out that associations made

using KDE don't show up in Gnome's list of apps that can be

associated, and while at first they appear in the right-clicks of

the associated file types, they're subject to go away if the

associations are further edited. I ended up having to redo the

associations using the AddToApplications script, all good now.

Other utilities that have file association options include Thunar,

PCmanFM and Ubuntu Tweak - but at this point I prefer the

simplicity of the script approach.. I know what my script does,

the other utilities, not so much.

6/2/12 - LxPanel's battery monitor is broken in the current stock

0.5.8 version, at least on my Mini 110. So I downloaded the 0.5.9

source (which mentions that is fixed) and and prepared using the

command ./configure --prefix=/usr so it would overwrite the stock

install. Of course had to keep installing -dev packages until

configure was happy - not a process for the impatient and packages

are often named somewhat differently than what the error message

asks for, might have to guess - but used to it. Once happy did

make then sudo make install... battery monitor works. Sort of,

percentage works, time remaining doesn't. By using --prefix=/usr

the package system still thinks I have lxpanel 0.5.8 so if an

updated version becomes available through the normal channels it

should replace the compiled version.

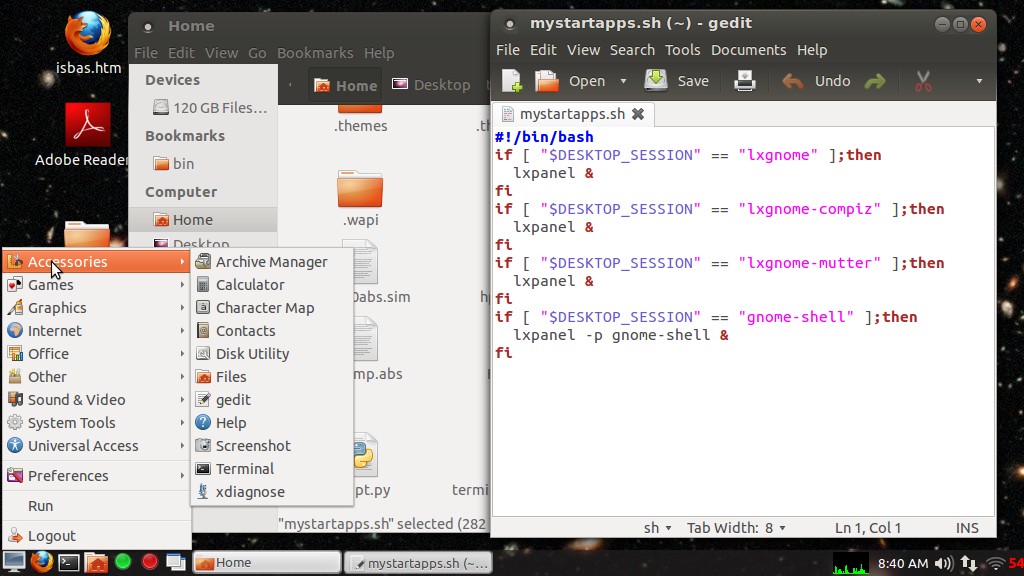

I've been fooling around with my Gnome Shell session...

Thanks to user contributions Gnome Shell now works for folks like

me that prefer a traditional app menu and window list. I was using

LxPanel for the bottom panel in my Gnome Shell session, but it's

been replaced by the super-cool Panel Docklet extension - I

especially like being able to right-click minimized windows and

get a live overview of what the app instances are doing. I had to

do a bit of configuring - the All-in-one places extension needed

some editing to get it to fit vertically, and used the

gnome-shell-extension-prefs utility to set up the system-monitor

extension so it would fit the panel and not disable the stock

battery monitor icon (which properly shows time remaining when

clicked). The preferences utility can be run through the

extensions.gnome.org website or run manually, to make it easier to

access I used Alacarte (Main Menu) to make a menu entry for it.

Unity 2D is optional, triggered by clicking the yellow shortcut

which runs the on/off script I made. Sometimes Unity 2D is handy

for listing recent files, launching apps, searching, etc. Mostly

it runs fine this way but there are some "free" side effects of

running it like a stand-alone app - occasionally it goes away when

an app is closed (so far that only happens if the app was actually

launched from Unity), and it spams the .xsession-errors file every

few seconds with warning messages - a fix for that is to

delete/rename .xsession-errors and replace with a symlink to

/dev/null (ln -s /dev/null .xsession-errors), I hardly ever want

to actually see that stuff anyway.

6/3/12 - One minor but somewhat irritating bug with Gnome 3 is

how when launching Nautilus or more instances of Gedit the pointer

spins for several seconds although it's still functional. The fix

is to edit the desktop files of the affected apps in

/usr/share/applications - in particular nautilus.desktop,

nautilus-home.desktop and gedit.desktop - and change to

StartupNotify=false (instead of true).

I got a better menu going... the Applications Menu extension is

functional but I don't like clicking the categories to expand, so

got the Axe Menu extension (from the author's site as

extensions.gnome.org is down at the moment). Had to make some

settings changes to make it fit the screen but that was easy to

do, right-click the menu thing and tell it not fixed size, reduce

the icon sizes, eliminate some of the left pane categories, set

category box to scroll. One issue - it shows lots of "debian"

categories with invalid icons that I don't want to see, so I made

a little code change...

.....

while ((nextType = iter.next()) != GMenu.TreeItemType.INVALID) {

if (nextType == GMenu.TreeItemType.DIRECTORY) {

let dir = iter.get_directory();

if (dir.get_is_nodisplay()) continue; //added by WTN 6/3/12

this.applicationsByCategory[dir.get_menu_id()] = new Array();

.....

Got the idea from

here. This is one of the coolest things about the extension

system - it makes it easier than ever to change how stuff works.

Sure there's a risk of breaking something but the shell is pretty

good about saying no if something is wrong and if I totally bork

it I can just boot into another session and remove the code - it's

a whole lot safer than directly editing OS code. Now if I can find

better docs...

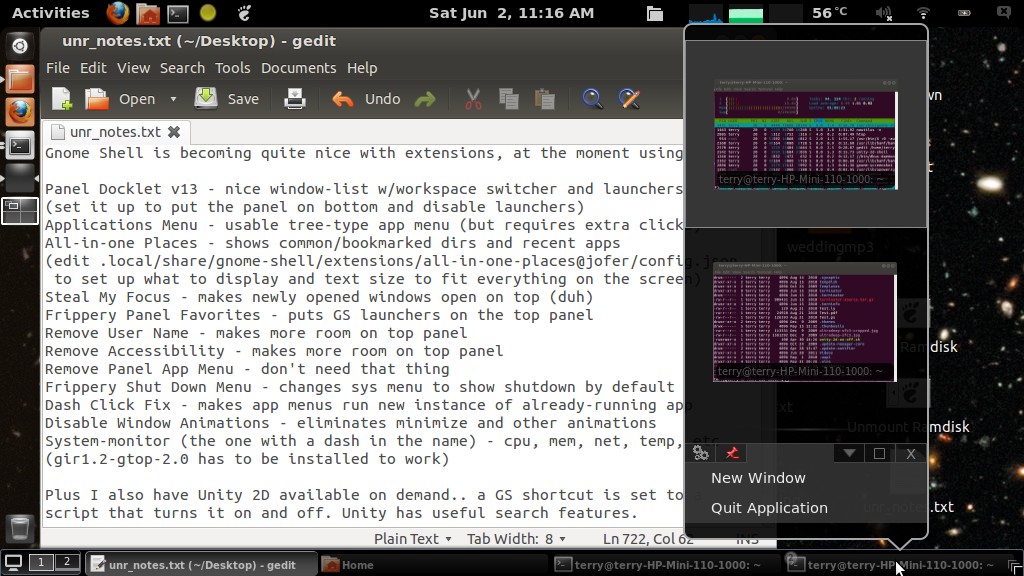

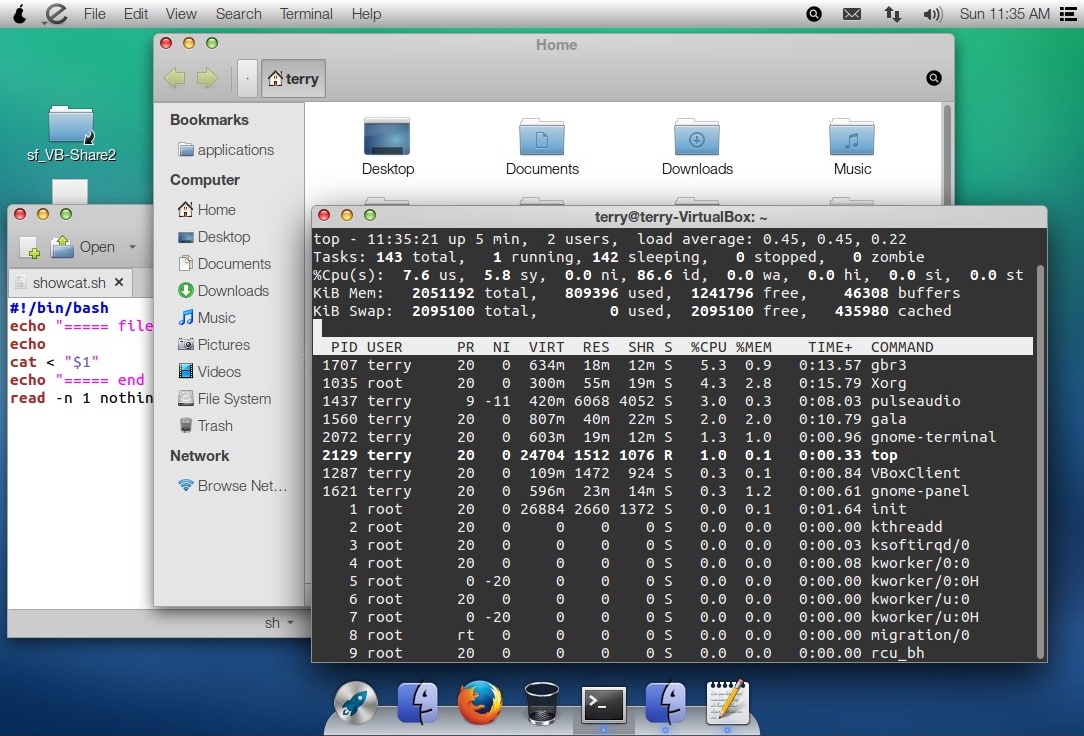

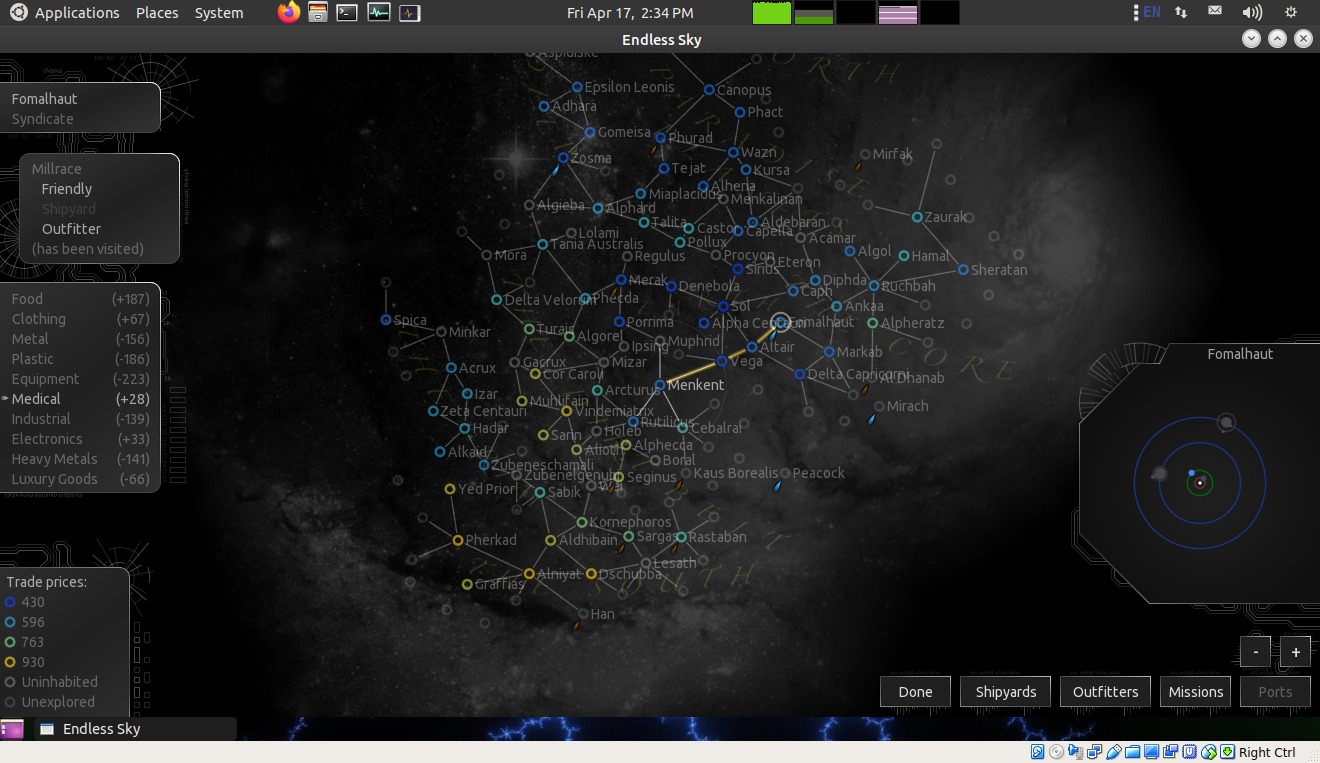

6/4/12 - VirtualBox running Ubuntu 12.04/Gnome Shell with Axe

Menu, Panel Docklet and other extensions...

The setup on my HP Mini 110 is very similar except no Places

section to save vertical space. The Restart Shell option is handy

when running under VirtualBox... fixes the corrupted "Activities"

screen and reinitializes extensions after resizing the VB window

or making code changes.

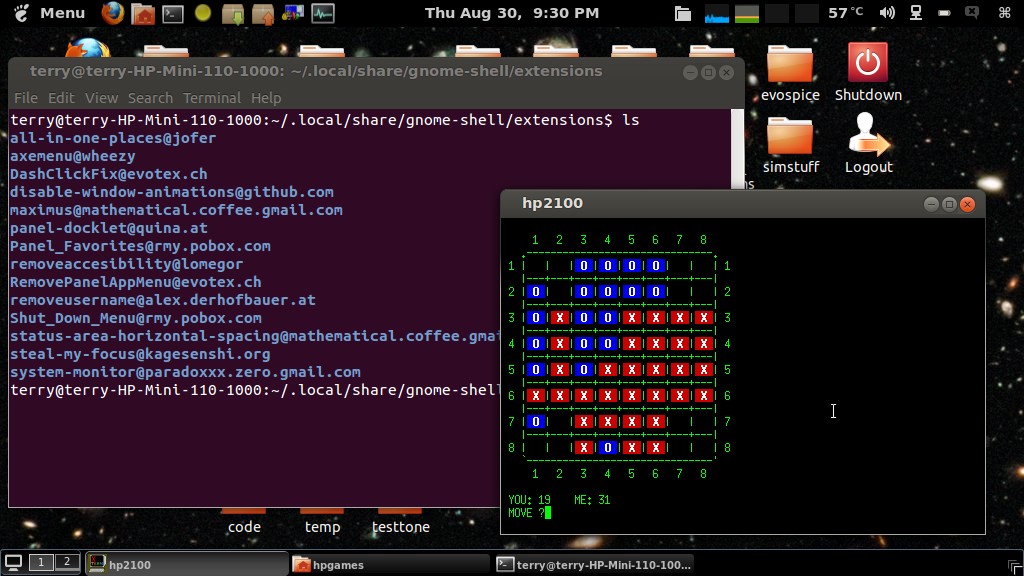

8/30/12 - a screenshot of my present HP Mini 110 Gnome Shell

setup...

The maximus extension is currently disabled - although it

provides more screen space when an app is maximized, it makes it

harder to close or restore maximized apps. The main reason I was

using it was for Firefox, and in that app I can simply press F11

to toggle full screen. The Reversi game is OTHOV, something I made for a hacked version of hpbasic running

in simh simulating a

HP21xx-type

minicomputer.

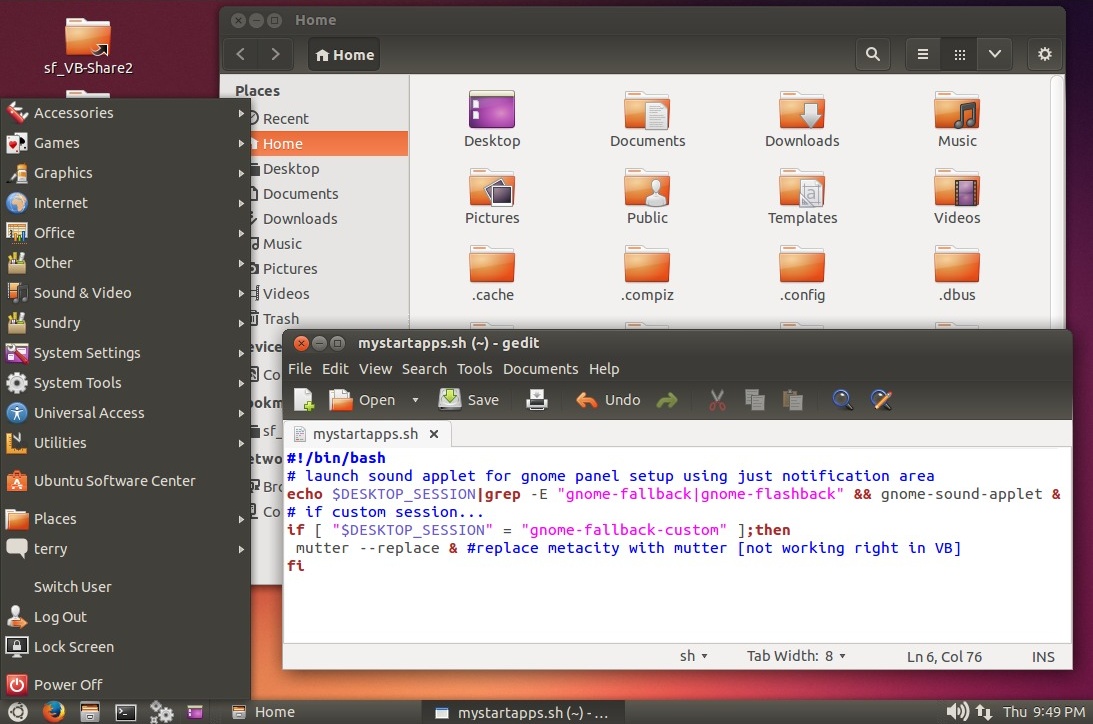

2/16/13 - my current 12.04 "lxgnome_effects" (compiz) session

running under VirtualBox...

The VM screen width was reduced for the screenshot. Normally the

Unity 2D panel on the side isn't running, toggled by the yellow

panel icon.. useful for some things like recent documents and

searching for videos. For that matter, usually I just run this in

a plain lxpanel metacity session (effects slow things down,

especially in a VM) but compiz seems to working well. Also works

with mutter but under VB it causes an irritating background color

flash when selecting menu options. The theme is a modified version

of Ambiance with a dark Nautilus side panel and tweaks to make

tabs and scroll bars look better (to me anyway). LightDM now

supports timed-autologin, yay.. that was almost a dealbreaker

since I like to turn on my computer and it just boot, and GDM is

still semi-broken in 12.04.

My main machine is still running Ubuntu 10.04 and although for

the most part it works very well and I'm in no hurry to upgrade,

desktop support is ending in a couple of months. Support for

non-GUI and I presume security updates will continue for 2 more

years, if that includes FireFox I'm tempted to stick with 10.04

for now - the real determining factor is going to be support for

10.04 by other providers (LibreOffice, VirtualBox, etc). But at

some point I'm going to have to upgrade and I do not look forward

to that - very disruptive as in have my stuff stops working until

I fix it. As far as I can tell most of what I need will be

fixable, just time-consuming redoing PPA's, installing new

versions etc. The main issue I can see is getting temperature

indicators to work with my hardware (a MSI KM4M-V motherboard with

a cheap NVidia graphics card).. I can so long as I stick with

Gnome Panel (I made a test USB install and verified that it can be

done with a bit of compiling and hacking), but the temp indicators

for LxPanel and Gnome Shell don't easily support adding an offset

- my sensor returns readings relative to "too hot" rather than an

actual temperature. The main reason I even need a temp sensor on

this machine is the fan speed driver appears to be in the bios

itself and doesn't always increase fan speed so I need to keep an

eye on the CPU temperature or it will shut down... tempted to

permanently fix that by adding a hardware fan speed control

circuit.. and it would probably be easier to add a thermometer I

can stick on the outside of the case rather than trying to hack in

software support - it gets old having to recompile or reinstall

the custom K10temp driver every time the kernel changes.

GUI-wise, a session running Nautilus and LxPanel seems to be the

most attractive for what I do - I like simplicity, the extra

screen space, and a normal app menu - but I'll have to trim down

the number of launchers in the panel to leave as much room as

possible for window buttons (when I'm working I typically have

many open windows and need to quickly select them with a click).

Gnome Shell with extensions or Gnome Panel are other possibilities

but then I'm back to a 2-panel setup. Gnome Panel has retract

buttons to reclaim space as needed but the new one isn't quite as

configurable as the old Gnome 2 panel (but it is less buggy other

than some fixable theming issues). Gnome Shell with certain

extensions looks better but is more bloated and so far haven't

found an extension that provides a normal app menu that doesn't

cover up half the screen with eye candy or require extra

clicking... when I'm working I don't care about visuals as much as

being able to get to my work apps, files, directories and open

windows as quickly as possible - I want my OS to do what I tell it

to then get out of my way, not be the main show. No OS is perfect

(especially stock - invariably they seem to be designed for

someone else), but there are many GUI choices for Linux-based

distributions.. and even more choice when one mixes up the pieces.

Surely one of the supported options will do fine once I get past

the upgrade and get used to it.

Ubuntu

12.04 on my main machine

[my main machine is at the moment a ZaReason Limbo 6000A tower

from March 2011 with a quad-core 3ghz AMD Athlon II X4 640

processor, since upgraded with a cheap NVIDIA GT210 graphics card,

8 gigabytes of RAM and a 1T hard drive in addition to the 500G

drive it came with.]

5/11/13 - Did it... upgraded my main production system from 10.04

to 12.04 using the update manager app. Went suprisingly well...

after making a full image backup of my hard drive and making sure

I could mount it, clicked the big fat upgrade button and let it do

its thing. Wasn't exactly automatic and there were glitches - had

to select the display manager (lightdm), decide whether to keep

certain config files (kept grub, replaced the rest), a few buggy

dialogs with bunches of unreadable blocks instead of text

(hopefully not saying anything important, hit enter enter to

clear), told it to keep "obsolete" software (some of that stuff I

need for manually installed apps), tons of error messages in the

console as it was upgrading but that's pretty much normal, upon

reboot got a scary message about disk with /tmp not available but

did nothing and it continued on its own, booted into Unity.

Hillarious stack of icons on the side, logged out and back into

LXDE (which was previously installed) to set about fixing stuff.

Used Synaptic to fix a couple of broken packages, to remove one

broken package had to make an empty directory it was complaining

it couldn't change to. Installed gnome-panel for a more sane UI

and set about putting my system back together... not much got

clobbered, reinstalled wine, AcroRead, Google Earth (which

installed ia32-libs, need that for many other things), fixed up

gnome-panel with the stuff I like including adding my modified

Ambiance theme, had to manually download and compile sensors

applet to get CPU/Video temp back. Started the upgrade process

about 9am, about 12 it was done, by 3pm had a functional system

that for the most part is just like Gnome 2. After a bit more

tweaking... [updated screenshot 5/13/13]

I can live with that (as in Yay It Worked).

It's not perfect - a few (expected) cosmetic glitches related to

theming, especially with GTK2 apps... messing around with

gtk-chtheme helps - but it came out a whole lot more perfect than

I was expecting. Actually, doing an update manager

upgrade-in-place on such a "used" system I was expecting an

unbootable mess and was prepared to do lots more fixing and maybe

delete it all and install from scratch, but thought I'd try the

update manager method first just in case it worked so I could

avoid having to redo all my custom stuff - and I was not

disappointed! I'm sure there will be things to fix or adapt to,

but it looks like most of my scripts, associations, virtual

machines, wine apps, dosemu, manually installed apps and other

stuff I depend on made it through the upgrade process - it would

have taken a week or more to get to this point with a fresh

install. The downside is lots of leftover cruft that no longer

applies but that's OK.. easier to ignore the extra stuff than to

figure out what is truly no longer needed.

5/13/13 - Replaced the screenshot from 5/11, not much changed but

now using indicator app complete instead of separate indicators

with a separate clock and having to run gnome-sound-applet in

startup apps to get a volume control (that conflicts with other

sessions), and configured a new session to use mutter instead of

no effects. I had issues using the Classic with effects (compiz)

session - the animations weren't exactly smooth and the desktop

pager broke after telling it to use 2 workspaces (clicking the 2nd

workspace resulted in an empty screen - no panel icons right-click

or anything). I'm not into heavy graphics but basic things like

shadows and simple animations are useful and I liked what I saw in

Gnome Shell (after adding lxpanel and tweaking it a bit), which

uses the mutter window manager. So installed the standalone mutter

package then copied the existing fallback session files and edited

them accordingly...

----- file /usr/share/xsessions/gnome-classic-mutter.desktop ---------------

[Desktop Entry] Name=GNOME Classic (Mutter) Comment=This session logs you into GNOME with the traditional panel using the mutter WM. Exec=gnome-session --session=gnome-classic-mutter TryExec=gnome-session Icon= Type=Application X-Ubuntu-Gettext-Domain=gnome-session-3.0

----------------------------------------------------------------------------

----- file /usr/share/gnome-session/sessions/gnome-classic-mutter.session --

[GNOME Session] Name=GNOME Classic (Mutter) RequiredComponents=gnome-panel;gnome-settings-daemon; RequiredProviders=windowmanager; DefaultProvider-windowmanager=mutter DefaultProvider-notifications=notify-osd DesktopName=GNOME

----------------------------------------------------------------------------

Also mucked around using dconf-editor - mutter causes a very

minor cosmetic glitch in the left-side title bar menu button,

right-clicking the title bar brings up the same menu so set the

key /org/gnome/desktop/wm/preferences/button-layout to

":minimize,maximize,close" (no menu). Did other stuff with

dconf-editor to set up things like I want.. like adjusting the

clock format under /com/canonical/indicator/datetime and enabling

mutter's edge tiling under /org/gnome/mutter. I occasionally use

Unity 2D (the yellow panel icon turns it on and off) so set the

key /com/canonical/unity-2d/launcher/hide-mode to 2 to enable

dodge-windows. Used Gnome Tweak Tool to enable other stuff, mainly

affecting the Gnome Shell session but there's some overlap like

showing mounted drives on the desktop. Other tweaks include

uninstalling the overlay scrollbar packages (I want plain

scrollbars not popup widgets), added my CreateLauncher and

AddToApplications scripts (see 5/19/12 and 5/20/12 entries) to

nautilus-scripts to make up for removed features. All my existing

desktop shortcuts and file associations made it through the

upgrade process so haven't needed the scripts yet, but I'm sure at

some point I'll need to make a launcher or add a binary or script

to the list of apps that files can be associated to.

So far so good... it's better than Gnome 2. Upgrading in place is

usually not recommended but in my case it kept the vast majority

of my customizations intact saving a huge amount of time, I know

how to fix glitches like conflicting/broken packages, and I made a

full backup image of my existing 10.04 disk before attempting the

upgrade so if it didn't work I could restore things to the way

they were or be able to pull stuff over after installing from

scratch. Despite dreading it for a year the upgrade seems to be a

phenomenal success, my system is snappier than it has ever been.

Other than a couple cosmetic bugs in gnome panel that I don't care

about (can't usefully make the panel transparent because widgets

use the background from the theme, and sometimes the text

temporarily overlays the icon on the first window button), a

somewhat different (better) widget set, a few differences that

make it work and look better, and more up-to-date apps (the main

reason for upgrading), it basically IS my old system with a shiny

new skin.

6/22/13 - After yesterday's update of mesa, the mutter window

manager no longer works properly, breaking my gnome-classic-mutter

session and also Gnome Shell which now just loads a disfunctional

desktop with no panels etc. Mutter works (and restores classic

functionality) if I run the command mutter --replace in a

terminal, but the same command run from a GUI tool or

mystartapps.sh does not work.. has to be run from an open

terminal. So... until whatever broke stuff gets unbroken, using

plain Gnome Classic with metacity. At least it's faster without

effects...

This might be a malfunction in my video card or maybe the NVIDIA

driver - mutter fails horribly in VirtualBox now (totally garbled

graphics) and that virtual system has not been updated. I have

been having video-related flakes lately - sometime back the system

started intermittently failing to bring up the GUI (after a kernel

update), a problem that was solved by installing the latest NVIDIA

driver (which wasn't exactly easy - I failed to uninstall the

previous version first which caused all sorts of issues). Now this

issue - it seems

correlated with the mesa update but with no easy way to roll back

updates it's hard to test the theory, could just be coincidence.

Regardless, things were humming along fine, then after an update

mutter no longer worked unless run from a terminal - it's hard to

imagine what sort of hardware failure would care whether or not my

window manager ran from startup scripts or a terminal, and the

issues happened after updates, so I'm leaning towards some sort of

software issue. The new software might simply not be fully

compatible with my cheap PNY/NVIDIA GeoForce 210 card using the

NVIDIA driver (the nouvaeu driver doesn't work right on this

machine configuration). Might be time to get a better video

card...

6/23/13 - Problem sorted (for now).. basically just reinstalled

the NVIDIA driver - should have thought of that but never had to

do that before - apparently some updates stomp on the driver

configuration (still weird that mutter would run from a terminal

but not from GUI startup). To track down the problem I found the

error in .xsession-errors and googled.. sure enough users

reporting Gnome Shell not starting after some update then

reinstalling the driver to fix it. The procedure is fairly simple

- ctrl-alt-F1 to get a text screen and log in, run "sudo service

lightdm stop" to kill X, change to the directory containing the

driver then sudo ./NVIDIA-Linux-[press tab to complete the

filename], follow the prompts to uninstall and reinstall

(including the dkms module) then sudo reboot (might help to cross

fingers). Part of the issue is probably because the driver is

closed-source so developers can't fully understand or fix all the

interactions... another part of the issue might be an

incompatibility with vesafb as noted in dmesg output but so far I

haven't found an easy way to disable it (lots of conflicting

low-level info and I'm kind of getting tired of breaking then

having to fix my system, plus without vesafb I'd likely uglify my

full-screen text terminals), yet another issue seems to be

poorly-tested updates that muck with stuff that should be left

alone. Perhaps the solution is still to get a better/more

compatible video card... but which one? [...]

7/6/13 - Disabling the conflicting vesafb driver is fairly

easy... edit /etc/default/grub (back it up first) and change the

lines...

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash"

GRUB_CMDLINE_LINUX=""

...to...

GRUB_CMDLINE_LINUX_DEFAULT=""

GRUB_CMDLINE_LINUX="video=vesa:off vga=normal"

...then run update-grub to update the boot stuff. However, this

blows away the pretty Ubuntu splash screen while booting leaving

just a lot of text flying by (which doesn't bother me.. basically

the same as dmesg output), and also makes virtual full-screen

consoles (ctrl-alt-F1 etc) use a 80x24 screen instead of nice

smaller fonts. This gets rid of the vesafb warning from the NVIDIA

driver (making me feel a bit better) but otherwise can't tell any

difference... there is still the bug where the first double-click

of a session doesn't work (regardless of the GUI environment,

workaround is do it again or right after booting highlight the

file first - this bug does not happen on my HP mini 110 or under

VirtualBox), and VirtualBox won't run anything that uses mutter

(Gnome Shell, Cinnamon, custom sessions etc). I don't care that

much about the double-click bug (only an issue right after

booting) but it would be nice to fix the VirtualBox issue as

that's how I experiment with different configurations before

trying them on real hardware. There might not be a solution other

than get another video card.. seems that NVIDIA is not fully

compatible with Ubuntu (and likely other distros), the mutter

window manager seems to be picky about what it will run on, and

VirtualBox has always had issues running 3D or muttery things on

this machine (just went from semi-broken to fully-broken). Modern

PC's are very complex beasts with a huge amount of variation -

it's almost a miracle that modern software works at all -

especially free software - so not sweating a few bugs (just

documenting them), it's just a matter of figuring out what works

and what doesn't, fixing stuff if possible and learning to live

without fancy stuff that doesn't work and in the grand scheme of

things I don't really need anyway.

[update... got mutter and Gnome Shell working in VirtualBox

again.. see below]

On the other hand, VirtualBox works quite well for Windows 7 when

2D/3D accelleration is turned off and that's what really counts

since that's how I run Altium Designer to make circuit boards - it

must work or I'd be stuck with having to set up a dedicated

machine for it and I really don't want to do that. Especially

considering the last time I had a dedicated Windows box for it I

had bugs bugs bugs and it eventually crashed and burned taking out

the whole damn OS, not to mention the hassle of having to transfer

files between the Windows box and my main work machine. Running W7

virtually solved pretty much all the issues - backup is just a

matter of copying a directory, Altium Designer works MUCH better

when all it sees is plain video (sure enough if I enable VB's

2D/3D accelleration Altium crashes the OS as soon as it runs), and

VirtualBox's shared directory feature works fine for transferring

files back and forth. To run Windows 7 in a plain window without

VirtualBox's menus to maximize screen area I use a shortcut

launcher that runs the command...

VBoxSDL --termacpi --startvm "Win7pro"

...if for some reason I need to run it with VB's menus I can just

start it from the main VirtualBox program. I've been running W7

from a 40 gig virtual disk but space was getting tight and it was

time to attempt an upgrade to the new version of Altium (which due

to upgrade risks needs to be installed in parallel with the old

version), plus I might need to install other huge stuff like maybe

SolidWorks (big maybe, will have to see how it reacts to plain

video), so needed to increase the disk size. The existing virtual

disk was dynamic, so I could use the command...

VBoxManage modifyhd Win7pro.vdi --resize 80000

...(in the virtual machine directory) to resize the disk to about

80 gigs. Reportedly this doesn't work if the virtual disk was set

up to be a fixed size. This command only adds unallocated disk

space, to make use of the extra space I attached an Ubuntu live CD

image and booted it then used gparted to expand the Windows

partition into the extra space, removed the CD image from the

virtual CD drive and rebooted. Windows went through a CHKDSK thing

to verify the file system but otherwise didn't complain and now

I've got lots of extra disk space. Installing AD 13 went well,

seems to be an improvement over AD 10 but without changing much

(yes I hate change especially when I have work to do and have

already invested huge amounts of time learning how to make the

stuff work... the only changes I want to see are bug fixes and

improvements).

Other software notes...

Recently Google Earth started failing with "invalid http request"

when I entered an address, so got the new version 7 deb from the

Google Earth page and installed it. Didn't work... uninstalled it,

removed the .googleearth directory from my home dir, reinstalled

the deb, works now. Much improved, the previous version had really

ugly monospaced fonts but now it looks fine.

Sometimes I need to save video and audio playing on my computer

and for some things browser-based tools won't work. Previously I

tried to use a program called RecordMyDesktop but it never worked

right (no audio and now it doesn't work at all), but found

something called Kazam Screencaster in the repositories, works

great once I set it to use H264/MP4 encoding for video (VP8/WebM

didn't work) and "monitor of built-in stereo audio" (otherwise no

sound). The GUI makes it easy to select the area of the screen to

record, has an adjustable delay before starting and adds a "tray"

icon to gnome panel to stop the recording and prompt for a

filename to save the video. Haven't tested it much but seems to

work, kept up with 30 frames a second while encoding a 360x200

view window. I'm sure at some size point the frame rate will have

to be reduced, no option to dump raw uncompressed video.

7/8/13 - [edited] Was playing around and noticed that mutter was

still working on my other older/stuffed-up 12.04 VirtualBox VM

(but Gnome Shell just went to fallback)... I hadn't updated that

system in 2 months so backed it up then applied all updates

(expecting failure), but mutter still worked (but not Shell).

After reinstalling the VB guest additions (have to after a kernel

update to get a usable system), found that Gnome Shell worked too.

Cool. Updated my newer/less-stuffed-up 12.04 VM... didn't make any

difference, mutter still hopelessly garbled. Updated the VB guest

additions (from 4.2.12 to 4.2.14), now mutter works, so does Gnome

Shell (mostly.. on my newer 12.04 VM with splash/lightdm sometimes

it fails to launch, and in both VM's the overview still has a

garbled background but it has always done that and I don't use or

care about that feature). Basically the fix was to update the

guest additions.

9/7/13 - Ever since upgrading my main system from 10.04 to 12.04

it's had a minor bug when first starting the system - the first

double-click of a desktop icon failed unless I highlighted an icon

then clicked the background to unhighlight first (or just

double-clicked twice), after that it was fine but I noticed that

sometimes after running VirtualBox the bug would return. Didn't

matter what GUI or window manager I used. None of my other systems

did this (real or virtual) and couldn't find any reports of anyone

else having that problem, figured it was probably some X thing

related to my cheap video card, or maybe something to do with USB

or my cheap generic mouse and just expected and ignored it. When

it comes to complex operating systems, when that's the main bug

it's probably in pretty good shape! But still whenever the kernel

was updated I'd check to see if the double-click bug was fixed

(instead of doing the click dance after booting to prime the GUI),

and after today's update to 3.2.0-53 the bug seems to be gone. A

little thing but one step closer to perfection, a few seconds

saved each day. There is still an occasional apport system error

dialog that pops up because some service I usually don't need had

a problem but these tend to take care of themselves (and provide

the devs with debugging info), usually involves some leftover

cruft or service I don't need anyway, and it doesn't happen often

enough to apply the real fix (remove apport:-).

So now, as far as the GUI itself is concerned.. I can't think of

anything important I want to fix. Probably a rare moment that will

pass! Sure there are plenty of app bugs (that will probably always

be the case), but the combination of the Ubuntu 12.04 core,

Nautilus 3.4 and other Gnome 3.4 components, Gnome Panel, the

Mutter WM, a self-compiled indicator-sensors package (to get

semi-accurate CPU/NVIDIA temperature readings), and a few hacks to

the Ambiance theme, gives me a GUI that works pretty much exactly

like I want (works like Gnome 2) and doesn't slow me down with

unnecessary graphics effects.

10/21/13 - My initial double-click bug is back.. but whatever,

used to "priming" things by clicking around when I start up. I'm

thinking it's a result of some odd interaction between the NVIDIA

driver, the X system and/or Nautilus based on an observation after

an "incident". A couple of days ago applied a bunch of updates

including Xorg and afterwards nothing using OpenGL (mutter,

compiz, glxgears, etc) would work, had to boot into a plain

metacity gnome panel session (yay for multiple sessions!). No

double-click bug - a clue that it's something to do with the

NVIDIA driver. Problem was the update cleared my xorg.conf file

causing the NVIDIA driver to not be properly loaded. Not sure if

this is a bug as I'm currently using the NVIDIA driver from their

website, not the repository version which may (or may not) account

for this lack of consideration. Not knowing the cause yet I

proceeded to extend foot and shoot by installing the repo driver -

don't do that, result is no GUI and having to reinstall the driver

from a text console.. all I would have had to do is restore the

xorg.conf from a backup. To properly convert to the repo driver I

(theoretically) should run the installer from the website with the

--uninstall option then apt-get install nvidia-319 to get the

latest 3.19 version. But mucking around with video drivers is

nerve-racking so putting it off, it's working now.

The PPA version of LibreOffice 4.1 has broken EPS import. To make

docs for my circuits I need to extract the schematic from a PDF

file output by Altium Designer and get it into a rotated EPS file

to import into LibreOffice, using the following commands...

pdftops -noembtt -f 1 -l 1 "[name].pdf" "[name]_schem.ps"

ps2eps -R + < "[name]_schem.ps" > "[name]_schem_rotated.eps"

Some time ago, about when 4.1 came out, EPS import failed

with a "graphics filter not found" error, to get by I installed

OpenOffice from the website and associated it with DOC and ODT

files, but I like LibreOffice better. Bug report says fix

released, but not seeing it in the PPA.

Not sure what the holdup is, got work to do so removed the PPA

version and installed the website version of LibreOffice, problem

solved and it's off the update system. Newer versions of software

often do fix problems and work better, but for important apps I

need to be able to roll back updates if something breaks. An

example is SVG files, previously I had tried to import SVG

graphics of circuit boards produced by the gerbview program in an

attempt to make sharp zoomable docs, and it didn't work right

(missing pads etc). Now it works perfectly.

From the I Want To Keep My Obsolete Software That Works Just Fine department...

I still have a few dos apps and batch files that I use (mainly

QBasic stuff) which I run using DosEmu/FreeDos. Despite being over

2 decades over, for one-off stuff it's still easier to fire up

QBasic and just do it rather than editing, compiling, repeat until

it compiles, repeat until it produces the output I'm looking for -

with QBasic all that is in one app, having used it for a long time

I know it well, and when I'm working speed to answer counts, looks

don't even figure (nobody else will see it). Previously if I had

to process data I'd have to copy it to my DosEmu file tree, launch

DosEmu and do whatever, copy the data back to whereever I was

working then clean out the temp stuff. If I drop a symlink into

the DosEmu tree then dos apps can access that target dir, so I

wrote this "dosprompt" script...

#!/bin/bash # launch a dosemu dos prompt in the current directory dosroot=~/MYDOS # location of dos filesystem dosemu starts in td=dp.tmp # temp dir in dos file system pn=`pwd` mkdir $dosroot/$td ln -s "$pn" $dosroot/$td/cdir #link to current dir echo >$dosroot/$td/run.bat "@echo off" echo >>$dosroot/$td/run.bat "cls" echo >>$dosroot/$td/run.bat "cd \\$td\\cdir" xdosemu -E "\\$td\\run.bat" rm $dosroot/$td/run.bat rm $dosroot/$td/cdir rmdir $dosroot/$td

The script lives in my Nautilus Scripts directory so wherever I'm

at I can right-click, Scripts, dosprompt and it boots DosEmu with

that directory current (named dp.tmp\cdir but that doesn't

matter). DosEmu doesn't provide a way to launch in a particular

directory, so instead the script creates a batch file that changes

to the symlinked directory and runs the batch with DosEmu using

the -E option to tell it to stay running after the batch exits.

Most of the time I'm running QBasic programs so if the program

already exists it's nice to just run it (saves having to type

qbasic /run program.bas), so using similar techniques I made this

"qbasic_pause" script...

#!/bin/bash # runs a BASIC file in QBASIC using dosemu # current directory mapped so BASIC program will have # access to all files in the current dir and subdirs dosroot=~/MYDOS # location of dos filesystem dosemu starts in td=runbas.tmp # temp dir in dos file system if [ -e "$1" ]; then bn=`basename "$1"` pn=`dirname "$1"` if [ "$pn" == "." ]; then pn=`pwd`;fi # in case run from terminal if [ -n $bn ]; then mkdir $dosroot/$td ln -s "$pn" $dosroot/$td/dir.tmp #link to current dir echo >$dosroot/$td/runbas.bat "@echo off" echo >>$dosroot/$td/runbas.bat "cls" echo >>$dosroot/$td/runbas.bat "cd \\$td\\dir.tmp" echo >>$dosroot/$td/runbas.bat "qbasic /run $bn" echo >>$dosroot/$td/runbas.bat "echo." echo >>$dosroot/$td/runbas.bat "pause" xdosemu "$dosroot/$td/runbas.bat" rm $dosroot/$td/runbas.bat rm $dosroot/$td/dir.tmp rmdir $dosroot/$td fi fi

This one is designed to have BAS files associated to it but can

also be used as a Nautilus script. To perform the association I

used Assogiate (aka "File Types Editor") to create a mime type for

*.bas files with text/plain as the parent type, then used my

AddToApplications script to create a desktop file for the

qbasic_pause script so Gnome can be told to add it to

associations for *.bas files (I miss being able to associate

directly to a script but I guess that made too much sense..). Now

I can right-click a QBasic file and open with qbasic_pause to run

it with the current directory set to the location of the QBasic

program, so no problem finding data files.

True I probably spent more time figuring out these scripts (then

writing about them) than I save from using them.. but that's fine

since time spent when not working is "free" and when I do need to

use dos in the course of working I can just do it without being

distracted by extra file copies etc, for me that makes it well

worth the effort. If I could find modern software that works

better for quickly writing code (I didn't say good-looking code,

just code) I'd use it, but so far for simple input, process,

output stuff nothing I've found beats the old QBasic (QB64 doesn't

let me run it or a program from anywhere so it's out), and if I

need to distribute the app I can use FreeBasic to compile it for

Linux or Windows.

What else is going on...

I recently installed a mostly stock Ubuntu 12.04.3 (Unity and

all) to a friend's HP110 netbook - same model that I have but mine

usually runs a custom session I made using Nautilus and lxpanel.

We'll see how she adapts to Unity.. for normal-user

one-app-at-a-time use it actually works quite well, the global

menu saves vertical screen space. My main beefs with the global

menu are it's invisible unless the mouse is over it, and when an

app is in a smaller window then the menu should follow the window,

not stay at the top (when visible). In my opinion anyway. Added a

few essentials like flash and codecs, and for me Synaptic, removed

extra launcher icons leaving just the file manager and FireFox.

Had a minor issue when installing that required googling...

installed dual boot to keep the original (Ubuntu 8.04-based) HP

Mobile system, when it got to the partition size screen it didn't

label which side was which.. the new system is on the right so to

make it bigger have to slide the slider to the left. Otherwise

installation was uneventful and other than a black screen and

stray message when booting (didn't do the framebuffer fix) the new

system seems to work fine and the old system still works for a

backup. Sort of.

Got NetFlix under Ubuntu.. followed the directions

on the webupd8 site.. basically it's a plugin that uses a custom

version of wine to run Silverlite as if it were a native plugin.

Bloated but clever and so far works.. under Firefox once after

pausing I had to refresh the page to get it to continue but

otherwise no issues once I figured out the user agent string...the

User Agent Switcher plugin didn't work under either Chromium or

Firefox, the User Agent Overrider plugin works. Netflix also works

in Windows XP under VirtualBox in case the complicated wine-based

system goes down. But native is better. I really want something

like Apple TV and (when I want to) bypass my computer altogether..

kind of inconvenient to do anything else on a computer when it's

playing a full-screen video.. but still it's nice to be able to

play NetFlix videos. Got Hulu too, it's flash-based and has always

worked for me under Linux. Something will eventually have to be

done about that as flash is no longer supported under Linux -

unless using the Google Chrome browser (but pipelight now supports

running the Windows version of flash so that might be a solution

if the current Linux version of flash becomes unworkable). HTML 5

is proposing a controversial solution - basically a plugin system

for DRM videos but I'm guessing it'll only support closed systems

like Windows. What we really need is an open-source

platform-independent solution that lets content makers do their

DRM stuff but in any operating system. Yea that's a tall order..

but perhaps an interface layer with a "generic" x86 binary blob

within.. blob supplied by the service. Like almost every other

(especially Linux) user, I don't like DRM, but I do like good

content and if those providing the content demand DRM.. well here

we are. Thankfully there are clever hackers that can figure out

how to make stock Windows code run under Linux.

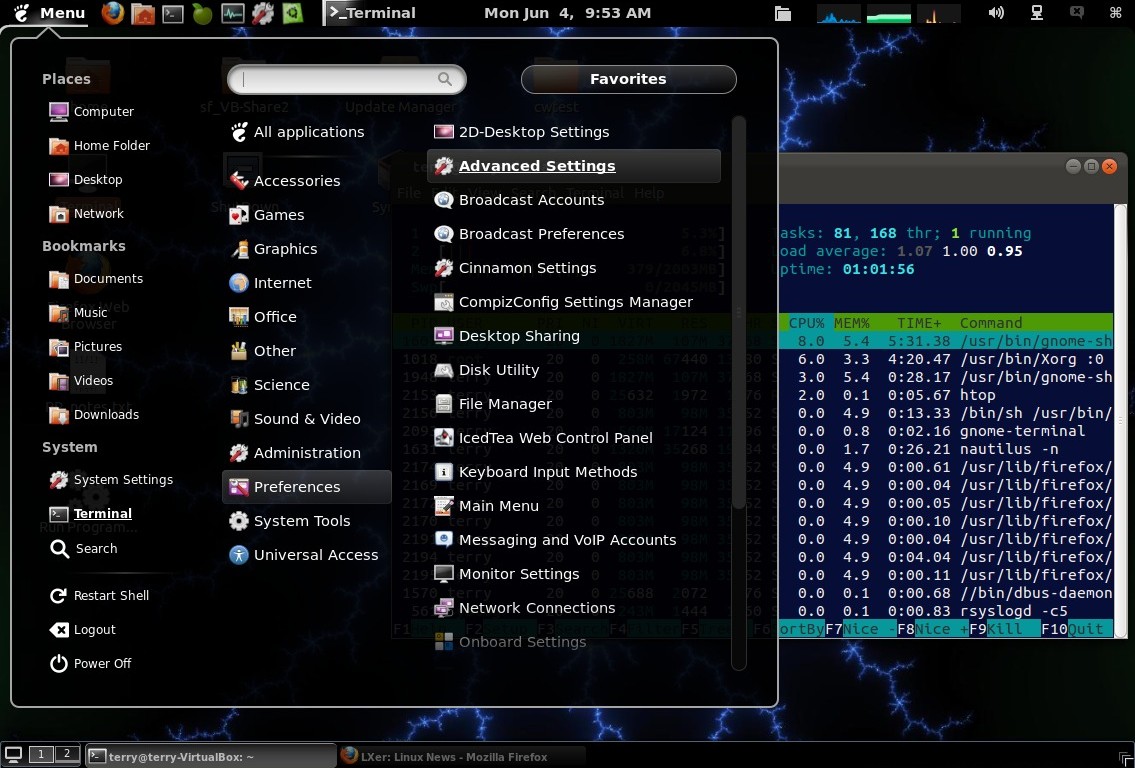

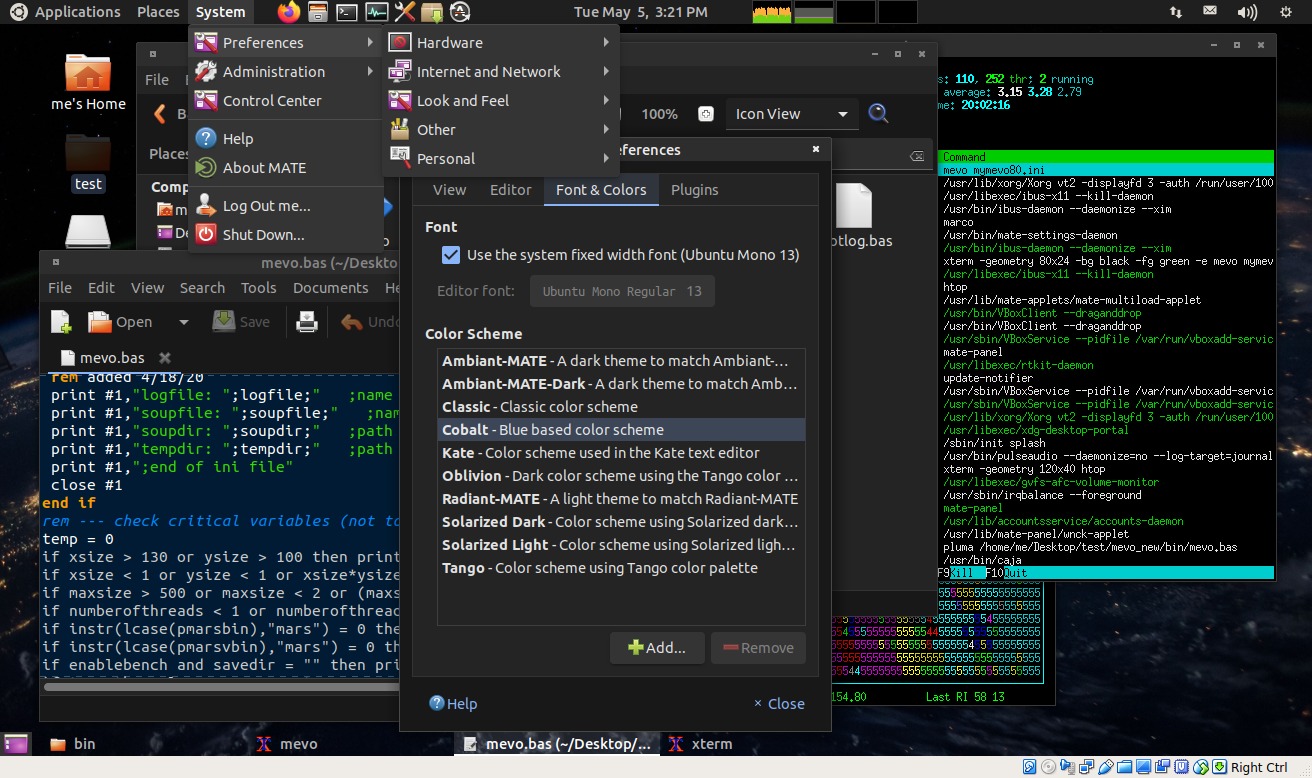

11/17/13 - Fooling around with Pear OS 8.. here's what my

VirtualBox install currently looks like...

The base system is Ubuntu 13.04 [corrected], running a customized

gnome-panel "fallback" session with a custom dock called "plank".

I added the "slanted e" icon next to the pear on the top bar, it's

a standard gnome-panel drop-down app menu because for me the stock

"launchpad" app launcher is full screen (sometimes, other times

under VB it's offset and doesn't show everything) and has no

categories.. not for me. To add the normal menu ran dconf-editor

went to org|gnome|gnome-panel|lockdown and unchecked locked-down,

then can alt-right-click and configure the panel. The global menu

can also be disabled/re-enabled this way. Many installed apps

aren't visible, had to run alacarte and unhide them to show in

launchpad or the gnome panel menu. The system is a bit flakey

running under VirtualBox.. sometimes the background goes away and

the window manager crashes. The plank doc seems a bit fragile,

when messing with the autohide settings it permanently hid

itself.. had to install gconf-editor and poke around to find the

setting to make it show again. It's a bit too easy to lose dock

icons.. for apps in a window easy to get back but if say launchpad

is accidently undocked then [...back up the launchers in

~/.config/plank/dock1/launchers, or go to /usr/share/applications

and drag the launchpad icon to the dock]. The dock looks cool but

if I were using this on a real system I'd uncheck it in startup

apps and replace it with lxpanel (that works, just add to startup

apps - makes it easy to select which panel to use) or add another

gnome-panel for a bottom task bar. Actually, if I did use this

setup on a real machine I'd wait for the next LTS, and then

probably start with a stock Ubuntu install and add in the bits

that I want - basically the theme. Still it's nice to see what

people can do with the Gnome 3 infrastructure.

Rather (besides just being curious), I'm using the system to see

what's changed in the new[er] Ubuntu. Perhaps in a slightly bent

format but that's OK as I bend my system up pretty good too. So

far other than some flakiness with the gala WM (based on mutter)

when running under VirtualBox (mutter is also flakey under VB),

and some usage issues with plank and launchpad - both optional

apps - the core system seems fairly stable. But there are a few

core issues - biggest one I've noticed so far videos play back in

black and white (not single-color, blended black and white) - [...

aha - it's an incompatibility with the new mutter.. do metacity

--replace and videos now in color.. in the new daily ubuntu doing

mutter --replace causes the same black and white bug. It's a

VirtualBox thing.]

Another issue that has an easy fix - I found that I could not

make custom associations by adding .desktop files to

~/.local/share/applications, turns out that the desktop file must

contain NoDisplay=false for it to show up in the association list

- fixed my AddToApplications script in the 5/20/12 entry. I can

see why - it makes the list more trim by eliminating probably

useless choices - but still a change from 12.04 that took some

figuring out. File associations are extremely important to me so

glad it was just a simple change.

In other happenings, updated my AmbianceMod

theme so that scroll bars in gtk2 apps will show up better.

11/18/13 - Here's a CreateShortcut script for nautilus-scripts

(derived from the AddToApplications script)...

#!/bin/bash # CreateShortcut - 11/18/2013 WTN # Makes desktop files for running scripts or binaries # Put in ~/.gnome2/nautilus-scripts or in a path directory # to use from a command line. Usage as a nautilus-script.. # Right-click executable, select Scripts CreateShortcut # Enter name for the shortcut, OK or cancel to quit # Select Yes or No when prompted to run app in a terminal # Launcher will be created without an icon, to select an # icon right-click, properties and browse for an icon. # prefix="launcher_" # new desktop files start with this if [ "$1" != "" ];then # if a command line parm specified.. execparm=$(which "$1") # see if it's a file in a path dir if [ "$execparm" == "" ];then # if not execparm=$(readlink -f "$1") # make sure the full path is specified fi if [ ! -x "$execparm" ];then # make sure it's an executable zenity --title "Create Shortcut" --error --text \ "The specified file is not executable." exit fi if echo "$execparm" | grep -q " ";then # filename has spaces execparm=\""$execparm"\" # so add quotes fi else # no parm specified, prompt for the Exec command # no error checking, whatever is entered is added to the Exec line execparm=$(zenity --title "Create Shortcut" --entry --text \ "Enter the command line for the launcher") fi if [ "$execparm" == "" ];then exit;fi nameparm=$(zenity --title "Create Shortcut" --entry --text \ "Enter a name for this launcher") if [ "$nameparm" == "" ];then exit;fi if zenity --title "Create Shortcut" --question --text \ "Run the app in a terminal?";then termparm="true" else termparm="false" fi # now create the desktop file filename="$prefix$nameparm.desktop" echo > "$filename" echo >> "$filename" "[Desktop Entry]" echo >> "$filename" "Type=Application" echo >> "$filename" "NoDisplay=false" echo >> "$filename" "Name=$nameparm" echo >> "$filename" "Exec=$execparm %f" echo >> "$filename" "Terminal=$termparm" chmod +x "$filename" #make executable

Another way to make launchers is this script (what I used to

use)...

#!/bin/bash gnome-desktop-item-edit --create-new $(pwd) &

...however that requires gnome-panel and makes me browse for the

executable. I thought hmm.. would be easier to just enter a name

and choose terminal or no terminal, almost exactly what the

AddToApplications script does except put the desktop file in the

current directory and make it executable, I'll drag it to where I

want it. A few minutes of editing, done. It doesn't set the icon

(on my system the default is the same as for binaries), easy

enough to right-click properties and pick an icon if I want

something different.

11/19/13 - A few things. I've been tempted a time or two to set

up a normal blog where each "thought" gets its own file - but for

now probably not. Several reasons come to mind.. My notes files

are references on how I set up my systems (primarily for me) and

it works better when it's one big page so I can scan through it

and find my favorite scripts etc. Regular blogs tend to get

regurgitated ad nausium through the blogosphere and while I don't

imagine my humble words being important enough to garner such

treatment, I also don't want to encourage it either, especially

when I might have something "political" to say about Ubuntu etc -

when I write such things it's to document things and what I think

about them in the context of my other notes, not to be picked out

in isolation (that said, I don't care if anyone copy/pastes parts

so long as attributed with a link back to the source page.. that

at least preserves the overall context, not to mention I might

change my mind or append to entries). Finally, it's a hellofalot

easier for me to just right-click open with seamonkey than to mess

around with blogging software - not that it won't happen in the

future but right now I'm fine with the all-in-on-file format.

TL;DR - then don't read it, I'm not writing for the fast crowd.

Lately Ubuntu has been taking quite a bit of flak.. occasionally

deserved but mostly simply because Canonical is a business and

they have to do business stuff. Like enforce trademarks and

generate revenue and insulate themselves from upstream changes.

Recently there was a somewhat overreaching C&D regarding

www.fixubuntu.com that in my opinion was resolved nicely - site

was fixed, apology issued, should be end of story. The shame is it

involved a media storm, I would like to think that in the future

such disputes can be handled more intelligently.

Generating revenue - well that's what companies have to do, if

you don't like the way Ubuntu does it then read my notes here or

go to the afore-mentioned site for a fix. The Ubuntu repositories

carry a number of alternative desktop environments and Mark and Co

have always supported the freedom to install and uninstall

whatever software the user wants or doesn't want. Personally I

don't use Unity (except occasionally the QT version on demand) and

I really don't care if some searches are also sent to the internet

(I find that useful for finding videos) even if to generate Amazon

ads. A setting was provided to turn that off, plenty of other ways

to disable that behavior, and while some of the criticism was

warrented (resulting in a global on/off switch and other ways to

control what gets sent outside the machine) we type stuff into

Google or a number of other web sites and have stupid ads follow

us around all the time without worrying about it. Ubuntu's version