Links to some of the stuff on this page...

6/2/2020 - This is a continuation of Ubuntu Stuff, now with Ubuntu 20.04 on a brand new ZaReason Limbo 9200a. Warning - editing system files and settings can break your computer (happens to me all the time!). Not responsible for anything that happens as a result of trying any of this stuff.

A few days ago my new ZaReason Limbo 9200a PC was delivered. The

system has a 6 core/12 thread AMD processor and plenty of storage.

The primary drive is a 1T M2 SSD, with 3 more conventional drives,

1T, 2T and 4T. It came with Ubuntu 20.04 pre-installed on the SSD.

The other drives automount via fstab. The 4T drive is for backups

so probably should disable it in fstab and mount only as needed.

Fixing up the Desktop Environment(s)

First tasks are to check out what I have and fix up a basic working environment. I'm an old-fashioned app menu panel and desktop icons guy, 25 years of expectations are not going to go away, just how I work. So first step is to find the terminal, sudo apt-get install synaptic to use that drag in everything else I need to be productive. Pretty much I followed what I did under VirtualBox to get a Gnome Panel "flashback" session and a MATE session, detailed in my 20.04 notes in Ubuntu Stuff. Give and take, things are different with real hardware. Afterwards had MATE and Flashback sessions set up the way I like in addition the stock Ubuntu session.

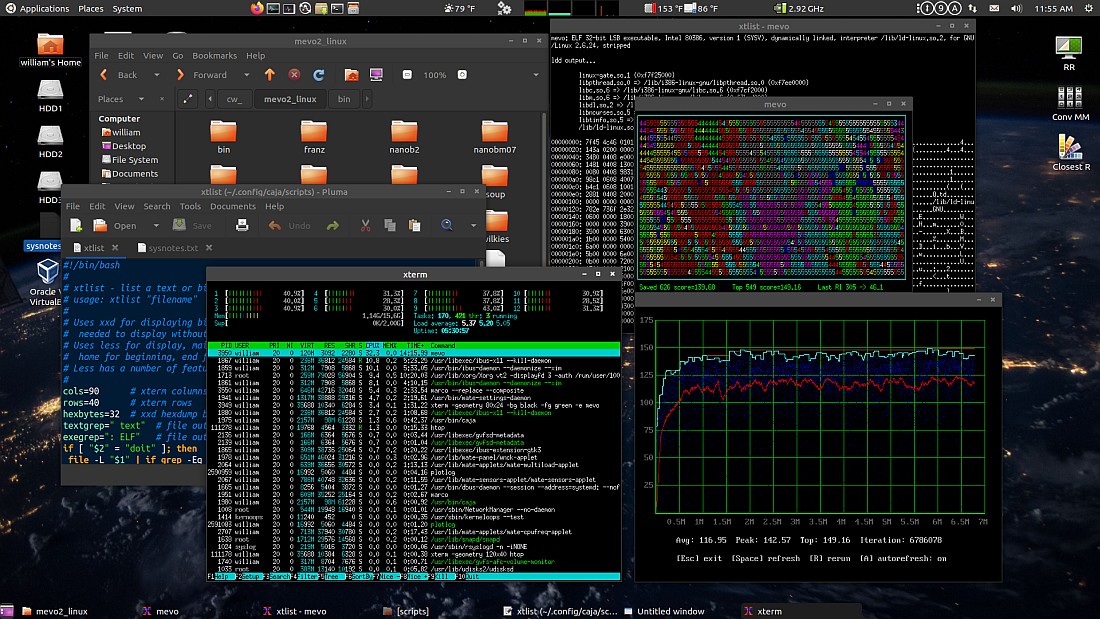

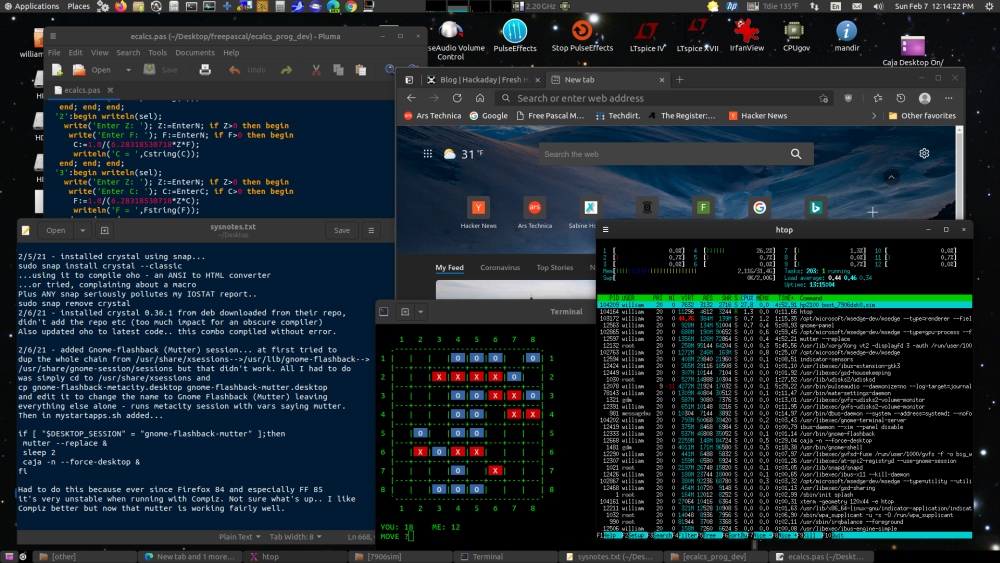

Here's the MATE session running some of my toys... (click for

big)

Here's the Flashback session...

It's getting there but took quite a bit of work - always does

with a fresh system.

Everything was done without modifying any root system files,

rather just installed packages and added a "mystartapps.sh" script

to the startup applications to selectively add or replace

components based on the $DESKTOP_SESSION variable. Even with the

Flashback session I still need MATE components, in particular Caja

since the Nautilus file manager no longer can handle the desktop.

Gnome Shell and Gnome Flashback both supply (different) components

that provide basic desktop functionality but I need my desktop to

function like a file manager. Caja is a fork of the Gnome 2

Nautilus and handles the desktop nicely, even better than the old

Gnome 2.

Here's a list of the desktop-related packages I installed along

with all their dependencies...

sudo apt-get install synaptic - installs a graphical package

manager

dconf-editor - for making adjustments to some of the settings

gnome-system-tools - adds useful things like users and groups

gnome-flashback - adds the gnome-flashback session(s) and

gnome-panel

gnome-tweak - Gnome-specific settings

mate-desktop-environment-core - pulls in caja and most of the

stuff needed to set up MATE

caja-open-terminal - adds a open terminal option, pulls in

mate-terminal

caja-admin - adds options to open folders and edit files as

administrator, pulls in the pluma editor

mate-applets - panel widgets

mate-indicator-applet - volume control clock etc

mate-notification-daemon - popup bubbles?

mate-tweak - MATE-specific settings

compiz - eliminates video tearing, better effects

indicator-sensors - temperature indicator for gnome panel and mate

panel

mate-sensors - temperature indicators for mate panel

mate-utils - optional extra utilities

Installing these and fully rebooting the system (logging out and

in isn't enough) adds extra login sessions for MATE, Flashback

(metacity) and Flashback (compiz). MATE compains about

incompatible widgets when starting up, possibly from running gnome

panel first (one of the first things I did was add gnome panel to

gnome shell so I could get around, but don't know if that's got

anything to do with it). Removed the incompatible widget and set

up the panels the way I want. Ran into a minor bug when setting up

the panels - sometimes selecting move on a widget made it go away

instead. Once set up I locked the items to avoid click mistakes.

The MATE session is pretty

much good to go now other than an issue with some of the dark

themes - when renaming files the cursor is invisible. To fix that

with my favorite dark theme I copied Adwaita-dark from

/usr/share/themes to ~/.themes, renamed it to

Adwaita-dark-modified, edited index.theme and changed both

instances of Adwaita-dark to Adwaita-dark-modified, and in the

gtk3.0 folder edited gtk.css and added the following (after the

import line):

.caja-desktop.view .entry,

.caja-navigation-window .view .entry {

color: blue;

background-color: gray;

caret-color: red;

}

For the Flashback sessions I added a script named

"mystartapps.sh" in my home directory containing the following...

#!/bin/bash if [ "$DESKTOP_SESSION" = "gnome-flashback-metacity" ];then caja -n --force-desktop & fi if [ "$DESKTOP_SESSION" = "gnome-flashback-compiz" ];then caja -n --force-desktop & fi

...made it executable and added it to Startup Applications. Note

- for this to work have to tell Flashback to not draw the desktop.

Use dconf Editor to navigate to org, gnome, gnome-flashback and

change the desktop setting to false.

It's starting to feel

like home now.

Originally I had Gnome Panel and Caja running (via

mystartapps.sh) in the original Ubuntu session just to get around

while setting up the other stuff, but with the other sessions

working I returned it to stock and fixed it up a bit...

Turns out the stock Ubuntu 20.04 desktop is more useful than I

thought. Originally I was under the impression that the new

desktop-icon extension didn't support desktop launchers (they had

generic icons and opened as text) but when a desktop file is

right-clicked there's an option to allow launching, when selected

the app icon appears and it works normally. Still have to open the

desktop in a file manager to do anything fancy, there's a

right-click option to do just that. A nice touch is almost

everywhere there's a right-click open in terminal option. A

cosmetic bug - the icons highlight when moused over but often the

highlight gets "stuck". Everything still works, just distracting.

Here's

a commit that mostly fixes the issue.

The side dock icons were a bit largish, using dconf Editor

navigated to org / gnome / shell / extensions / dash-to-dock and

changed dash-max-icon-size from 48 to 36. The dock isn't like

Gnome Panel where each window gets an icon, rather all instances

of an app are represented by a single icon with extra dots for

muliple instances. If no instances are open then clicking the icon

restores the most recently minimized window. If an instance is

already open then it displays thumbnails for each instance. More

clicks than a conventional panel but less clutter and distraction.

Another way to switch between apps is the Activities button on the

top panel which displays thumbnails for all windows.

The Gnome Shell extensions are Applications

Menu by fmuellner, system-monitor

by Cerin, and Sensory

Perception by HarlemSquirrel, the Tdie reading is from

indicator-sensors. With these extensions and after getting used to

the dock and other features I no longer need Gnome Panel and Caja

for this session. However sometimes I need the extra features of

the Caja desktop and file manager so I made a script to flip

between Caja and stock...

--------------- begin CajaDesktopOnOff -------------------------

#!/bin/bash if [ "$DESKTOP_SESSION" != "ubuntu" ];then zenity --title "Caja Desktop Control" --error \ --text="This script only works in the ubuntu session." exit fi b1=true b2=false if pgrep caja;then b1=false b2=true fi selection=$(zenity --title "Caja Desktop Control" --list --text \ "Select..." --radiolist --column "" --column "" --hide-header \ $b1 Caja $b2 Ubuntu) if [ "$selection" = "Caja" ];then caja -n --force-desktop & fi if [ "$selection" = "Ubuntu" ];then killall caja fi

--------------- end CajaDesktopOnOff ---------------------------

...and made a desktop launcher for it (with Caja). Defaults so

that OK is to flip. Make sure all Caja windows are closed or not

doing anything before flipping back to Ubuntu. Even though I don't

require a panel with the dock, it's still nice to have. One way

would be add it to mystartapps.sh...

if [ "$DESKTOP_SESSION" = "ubuntu" ];then gnome-panel --replace & fi

It actually launches both the top and bottom panels, the top

panel hides behind Gnome Shell's top panel so have to make sure

it's set to be the same size or smaller or bits of the panel will

peak through. Anything done to Gnome Panel also affects the

Flashback session. Instead of adding to the startup apps it would

be easy to make a GnomePanelOnOff script similar to the above..

Yes I think I'll do that...

--------------- begin GnomePanelOnOff -------------------------

#!/bin/bash if [ "$DESKTOP_SESSION" != "ubuntu" ];then zenity --title "Gnome Panel Control" --error \ --text="This script only works in the ubuntu session." exit fi b1=true b2=false if pgrep gnome-panel;then b1=false b2=true fi selection=$(zenity --title "Gnome Panel Control" --list --text \ "Select..." --radiolist --column "" --column "" --hide-header \ $b1 "Use Panel" $b2 "Remove Panel") if [ "$selection" = "Use Panel" ];then gnome-panel --replace & fi if [ "$selection" = "Remove Panel" ];then killall gnome-panel fi

--------------- end GnomePanelOnOff ---------------------------

That works.. boots up stock, can add/remove the extra components

as needed.

2/7/21 - lately Compiz has been interfering with some apps,

mostly Firefox, so set up a Mutter Flashback

session.

Getting my old 32-bit binaries to work was fairly easy - ran sudo

dpkg --add-architecture i386, reloaded the package list and

installed libc6-i386, lib32ncurses6, lib32readline8, lib32stdc++6,

libx11-6:i386, libncurses5:i386 and libreadline5:i386. Probably

don't need the lib32 versions but whatever. Oddly I couldn't find

libncurses5:i386 and libreadline5:i386 using the Synaptic package

manager (very necessary) but checked the repositories on the web

and it said it was there, apt-get install worked. Bug in

synaptic's search function? No matter, all my old corewar toys and

precompiled 32-bit FreeBasic binaries work fine.. used MEVO as a

load simulator in the earlier screen shots.

Added/installed my scripts I use frequently - in particular

AddToApplications and AddToFileTypes to make up for the lack of

user-adjustable associations. MATE/Caja already has an option for

making app launchers so don't really need CreateShortcut but added

anyway, sometimes I like to make launchers that are not on the

desktop. Compiled and installed Blassic and my crude but useful

work scripts (conv_mm and closestR). Installed the FreeBasic

binary package along with a bunch of dev packages it needs to

work. Compiled and installed Atari800, had to install the sdl1.2

dev packages to get a graphical version. There's also a fairly

recent version in the repositories, no longer needs ROM files

thanks to Alterra. The Vice C64 emulator works well, much better

than it did under VirtualBox.

Tried to install IrfanView in wine but it needs mfc42.dll. Copied

the file to the system32 folder, no joy. Googled.. oh run regsvr32

mfc42.dll, no joy. Moved it to the windows folder then regsvr32

worked. My script doesn't... oh nice got a 64-bit wine now,

install location is different, edited the script, now it works.

There are plenty of native Linux image editors but I like

IrfanView for simple/common stuff, been using it since before

Windows 95.

No DosEmu/FreeDos in the repos. One of the things I like about

DosEmu is it's scriptable - rigged it up so I could right-click a

.bas file and run it in QBasic, very handy for "one offs". DosBox

kind of works but totally not the same. I suppose I don't really

"need" dos anymore, FreeBasic and my fbcscript script works for

quick programs, but I just want my old stuff. Tried compiling

DosEmu from source but that ended up looking futile - configure

was bugging out trying to figure out what glibc version I have

(was kind of comical.. puked the environment variables and a bunch

of other junk then declared I had version 0 and I needed a newer

version... nice. Even if I patched over that chances of

successfully compiling and installing are too low to put in the

work. Thought about transplanting what I'm running under 12.04 but

it's a lot of files and I'm trying to avoid messing with anything

above /usr/local as much as possible.

Found a DosEmu/FreeDos deb for Ubuntu 19.10 and installed that

with gdebi, appeared to work but after copying over my dos

configuration and environment found that it won't run anything

that uses DMPI (XMS), or most games. This bug has cropped up

before, there was a fix but it no longer works and likely won't

ever work (it works on my 12.04 system but only because it no

longer updates.. kernel updates often broke it). Disabled XMS in

the config file, still very useful for running QBasic and other

normal dos apps. My old qbasic_pause script for right-clicking bas

files still works. For games, installed DosBox and rigged it so it

booted into the same environment with the same path, works and can

make the screen bigger. Got Dos.

Thoughts so far

MATE - probably my favorite environment, certainly has my

favorite components. Very configurable and expands on the

traditional desktop concept. On my system the Marco window manager

flickers when watching video, installing and selecting Compiz

fixes that. The panel is easier to set up and adjust, widgets can

be placed anywhere on the panel and it has a better temperature

widget than Gnome. Sometimes things bugs out when moving, once set

up and locked it's fine. The main bug/feature that makes me not

use it as much is the screen blanks after inactivity and I can't

find a setting that controls that.

FlashBack - the default desktop is not useful for me, can't

arrange the icons (the options are grayed out). Works well when

the Flashback desktop is turned off using dconf-editor and caja -n

--force-desktop is run at startup to handle the desktop. MATE and

Flashback can share themes if compatible components are chosen.

Gnome Panel isn't as configurable as MATE's panel - can't make

transparent, panel widget and icon positioning is less flexible.

But it is more stable, no need to lock since it stays locked

unless alt is pressed. Been using it for 7 years so used to it.

The Metacity window manager flickers too, install Compiz to fix.

With both MATE and Flashback I haven't figured out how to switch

the clock to day/date/time format [one solution is use app

indicator rather than app indicator complete, and use the separate

clock applet, still somehow I got app indicator complete to output

the full format date/time on 12.04].

Ubuntu/Gnome Shell - I'm liking this session more and more. The

side dock took a bit of getting used to but the more I used it the

more I like it. Even the Activities overview is useful - these

features work better on a fast machine with a large monitor. The

new desktop-icons extension works well - the manipulation

functions are limited but once set up the icons are beautiful and

perfectly functional. I still need the more advanced stuff but not

all the time, sometimes it's nice to simplify. For most tasks the

stock environment is fine and perhaps less to mess with means less

distraction. The fancy stuff is all there, behind a couple

double-clicks.

The machine itself is awesome. Got to get the memory module fixed

but that's not a big deal.. 16 gigs is plenty. It's crazy fast..

to me anyway, spec-wise it's only about midrange to average.

6/5/20 - To make sure the system wasn't stressing the solid state

disk too much I made a disk monitor script...

------------ begin diskrwmon -----------------

#!/bin/bash # diskrwmon - original 200602, comments 200605 # script to show uptime/load, temperature, cpu and disk I/O stats # requires xterm, sensors from lm-sensors and iostat from sysstat # semi-specific specific to my Ubuntu 20.04 system # if [ "$1" != "" ];then # now running in xterm (presumably) while [ 0 ]; do # loop until terminal window closed echo -ne "\033[1;1H" # home cursor echo "" uptime echo "" # -f for Fahrenheit, omit for C # first grep isolates desired sensor, # second grep highlights from + to end of line sensors -f | grep "Tdie:" | grep --color "+.*$" # run iostat to get a cpu usage report, trim blank lines iostat -c | grep " " echo "" # run iostat to get a human-formatted 80-column disk report, # first displaying cumulative since boot, then over 10 seconds # for older versions of iostat use iostat -dh 10 2 (not as pretty) iostat -dhs 10 2 done else # no parm so launch in an xterm window # adjust geometry rows as needed to avoid scrolling xterm -geometry 80x42 -e "$0" doit fi

------------ end diskrwmon -------------------

The script displays two iostat disk reports every 10 seconds, the

first is cumulative since the last boot, the second over the last

10 seconds. Also shows uptime (useful for interpreting the

cumulative report), temperature and CPU usage. It is

system-specific, have to edit to set which sensor to display and

adjust the terminal height which depends on how many file systems

are mounted. On my system the output looks like...

[after an update ended up with loop0-loop9 so had to change the

xterm line to 80x44 to compensate]

The system buffers non-critical writes to avoid hammering the

file system with small writes, on a solid state disk writing a

single byte can result in kilobytes to megabytes being actually

written depending on the nature of the storage system. Calculating

lifetime is tricky - according to my old crude and probably wildly

inaccurate ssd life program, 81.1

blocks per second sustained with an endurance of 1000 writes per

block on a 1T drive with 500G free results in a lifetime of a bit

over 1 year, that's a bit concerning (and hope it's very wrong).

That program was written in the EEE PC days, things are different

now but regardless the goal is to minimize writes/per second. I

will probably move /home to sda to get it off of the SSD, and

mount the SSD with noatime - with access time even reading a file

writes data. One thing for sure, the solid state disk is very fast

compared to a normal disk drive.

[6/6/20] Another way to calculate lifetime is by how many times

the drive can be rewritten. Say the endurance is 1000 writes, for

a 1T drive that's 1000T of writes before errors occur. If each

write causes 5 times the data to be written (amplification factor)

then say 7 gigs/day actually writes 35 gigs/day, or 12.775T per

year. 1000/12.775 ~= 78 years. Even if endurace was only 300

that's still > 20 years. But that seems unrealistic... frequent

writes of just a few bytes (logs, access time etc) can still

trigger megabytes of actual writes and greatly shorten lifetime,

so best to set noatime on the SSD and move at least /var/log to a

normal disk.

Those 9 loop file systems are from "snap", Ubuntu's new packaging system. I have no snap apps installed yet it read over 20 megabytes of something from something.. and every bit of data read causes data to be written in the form of access time updates - definitely need to add noatime to the SSD mount entry. As I have written earlier in my Ubuntu Stuff Notes I'm not a fan of snap - it's opaque, pulls in huge dependencies, updates outside of the package system (from what I've heard sometimes even when the program is running, causing loss of work), and in my testing in a VM didn't work half the time - but for now left it in place. In my VirtualBox test install I definitely noticed the extra drag and removed as much of snap as I could. This system has enough resources to not notice the extra snap drag, might let it be for now to study it but if it misbehaves it's gone.

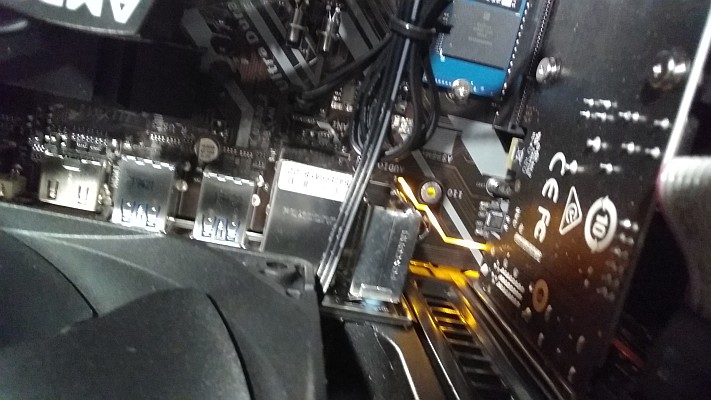

Then things went south. While

swapping around ram modules to troubleshoot the missing memory

issue, this happened...

...that ain't no LED, that's a trace glowing cherry red! Ouch!!!!

Definitely a "I need a beer" moment [but turned out to be a weird

indicator light after all, see below]. ZaReason offered to send me

a replacement motherboard but after this I'm inclined to leave the

surgery to them. I was planning on eventually getting another

system anyway (I work better if my work is on a separate

system/monitor) so when the new system arrives will swap the

drives and send this one in for repair. I thought about whether or

not to post about this, but decided to share it as a lesson that

when working on computers unexpected things can happen. And it's

kind of a cool picture, seen (and repaired) plenty of fried traces

but caught this one in the act [of doing a damn good job of

impersonating a trace on fire].

7/3/20 - Got the new machine, which has a ASRock B450M Pro4

motherboard, swapped the drives from the first machine to the new

machine and it came up fine, just like I left it (one of the

things I love about Linux). Unlike the Giga-Byte MB with this one

I didn't have to adjust BIOS settings to get VirtualBox to work.

With that sorted, turned my attention to the first broken machine.

First thing I noticed was one of the ram modules wasn't seated

properly, fixed that but no go, nothing but the drives clicking,

that trace lighting up and a bit of an electrical smell like

something was getting hot. But it wasn't the trace.. cautiously

touched it expecting it to leave a mark but it was cool, it was an

indicator light after all just like ZaReason thought it was (good

going Giga-Byte.. I know let's make a useless light that looks

just like a trace on fire - brilliant!). The real problem was the

power connector on the motherboard was loose and barely touching

on one side, probably knocked it loose while trying to swap around

RAM modules in the dark. Inspected the connector, no apparent

damage, plugged in all the way and the computer booted fine, got

back the full 32 gigs of ram, apparently that was just a

mis-seated RAM module. Lesson - don't work on a PC in the dark!!!

Glad it's sorted and that I don't have to send anything back for

repair.

The new machine "only" has 16 gigs of RAM but that's probably

fine.. less memory to experience a cosmic ray induced bit flip

(not sure how often that happens but without ECM I'd likely not

know unless something bugged out), less chance of file corruption

since Linux uses extra memory as a file cache. Perhaps I should

make a program that allocates say a gig of memory and periodically

checks it for changes.

7/4/20 - Besides iostat, a handy command for determining what is

being written is "sudo find / -xdev -mmin 1" which lists the files

that have been written to in the last minute. The command itself

always updates the auth.log and system.journal files. To help

minimize writes to the SSD I created a "home_rust" folder on a

spinning drive and from my home folder copied .cache, .config and

.mozilla to it, replacing them with symlinks. That got rid of the

majority of writes to the solid state drive, especially when

browsing the web. I could further reduce writes with some

system-level surgury - mount the SSD with noatime and move at

least /var/log and maybe /var/lib/NetworkManager to spinning rust.

Access time is marginally useful and files that are repeatedly

read tend to live in the memory cache and don't update the file

access time (not a bug please don't fix). Logs are another story..

every time a program is opened or some GTK thing doesn't feel

happy it spews the logs, and every new network connection updates

NetworkManager's timestamps. How much effect this has is not

clear, depends on how smart the SSD is regarding keeping partially

empty pages for small writes without triggering page erases/moves

(write amplification).. but would feel better if it didn't happen

at all.

On the other hand, one must be very careful moving system

folders, particularly to a drive that can be unmounted with one

misplaced click. The few home data folders I moved are all

basically non-critical - apps complain, settings go away until

remounted - but system directories are another story. No idea how

systemd would react if it found itself unable to write its logs.

Also the matter of making the switch while the system is running,

can get away with it with home directories but trickier trying to

move log that way, a log write might occur before the process

completes. Nahh.. unless I can see evidence that it's actually

hurting the drive lifetime better leave it alone, and from what I

can tell SSD's have come a long way since the days of the EEE

netbook when one had to worry about such things.

I found a tool called nvme that reads the NVMe (SSD) logs and

generates a report, it's in the nvme-cli package. After

installing, running "sudo nvme smart-log /dev/nvme0n1" (in my

case) generates a report that includes the number of blocks

written and estimated lifetime used. Be super careful with that

tool, some commands will make the drive go bye-bye in a "flash".

Here's a screen-shot... (click for bigger)

As can be seen in this screenshot, in the 14-odd hours the system

has been up it has written 1.5G of data to the SSD (this probably

does not include any write amplification and housekeeping but

seems like quite a lot). When the system is idle writes tend

toward 0, most writes that do occur go to sda thanks to moving

those home folders. When FireFox is open it writes quite a bit to

sda.. in the case of Yahoo megs at a time. Almost all idle writes

to nvme0n1 even with FireFox open are to the logs and the

NetworkManager timestamp. According to nvme smart-log the drive

has logged 141,659 data unit writes, each one 512,000 bytes, for a

total of 72,529,408,000 bytes written. Or ~73 gigabytes over

the 95 hours this drive has been in use. The money figure is

percentage used, at 0%. With that in mind copying all my work data

to the SSD.. 137 gigs worth (which resulted in about 128 gigs of

writes according to iostat.. probably a base 2 vs base 10

difference). After that operation, data units written increased to

410,508, or ~210 gigabytes written, percentage used still 0.

Windows 7 (in VirtualBox) running from the SSD generates lots of

idle writes but wow is it snappy! Seems to settle down after

awhile.

7/6/20 - Getting my HP2652 wireless printer working was tricky..

first had to get the "HP Direct" connection going, figuring out

the password etc (press wireless and info buttons together to

print the info, ended up being 123456), and trying to set it up.

Running hplip was no use, even after installing hplip-gui. Add on

the printer setup screen found it but it was non-functional,

complaining it couldn't find a driver. Had to go to Additional

Printer Settings and click Add - this saw the printer but appeared

to lock up. After trying a few times figured out it was waiting

for me to click the wireless button on the printer, after doing

that it worked. Manual says the wireless LED should stop blinking

but never did, but seems to work. This printer doesn't seem to be

capable of printing a full-size page so have to resize to 80-85%

to keep from cutting off the margins (same as how it behaved with

Ubuntu 12.04). Adobe Reader does a better job of recognizing the

printer's capabilities, installed the last avalable version

(9.5.5), 32-bit-only so have to have that set up. To get acroread

to show up in Gnome's associations had to use my AddToApplications

script on the acroread binary then select it in the file open-with

properties. Showed up automatically in Caja's associations.

Headphones didn't work, just had sound through the HDMI monitor

and no other selection in the Sound Settings Output section.

Rebooted into the BIOS setup and changed the Front Panel sound

setting to AC97, now it shows up in Sound Settings and works.

SSD lifetime again

7/14/20 - Here's a (probably) better SSD lifetime estimator...

(got to be better than what I was using for the EEE PC)

------- begin SSDlife2 ------------

#!/usr/local/bin/blassic print "===== SSD life estimator =====" input "Drive capacity (gigabytes) ",dc input "Capacity used (gigabytes) ",cu input "Write endurance (times) ",we input "Data written (gigabytes) ",dw input "Timeframe (hours) ",tf ' calculate write amplification (guessing!) wa = 1 + ((cu / dc)^2 * 5) : print "wa = ";wa ' calculate amount written aw = dw * wa ' calculate gigs per hour gph = aw / tf : print "gph = ";gph ' calculate total write capacity twc = dc * we ' calculate lifetime in hours hours = twc / gph : print "hours = ";hours ' calculate days days = hours / 24 : print "days = ";days ' calculate years years = days / 365.24: print "years = ";years input "----- press enter to exit ----- ",a system

------- end SSDlife2 --------------

This one takes into account that modern SSD's (probably) better

handle write amplification, in this program it's calculated based

on capacity used compared to total capacity and likely

overestimates - as written 20% full results in WA=1.2 and 50% full

results in WA=2.25, no idea how close to reality that is but for

my workload where I'm constantly punching holes in huge files I'd

rather error on the side of caution. Reduce the 5 or increase the

2 in the wa formula for a less drastic increase or enter 0 for

capacity used to fix write amplification to 1. The write endurance

input is how many times the entire capacity of the drive can be

rewritten, for my WDC 1T (1000G) drive it is specified as 600T so

that would be 600 times. Data written and timeframe can come from

iostat and uptime or from the nvme smart-log command.

My iostat figure for the last 17.75 hours is 32.7G so 1000G

capacity, 200G used, 600 times, 32.7G over 17.75 hours computes to

a lifetime of 30.96 years. Going by the nvme smart-log figures,

since using this drive it's performed 604,630 writes of 512,000

bytes each, or 309.57G (base 10 G's) over 179 hours. I'm pretty

sure that does not include housekeeping so entering 1000, 200,

600, 309.57, 179 - that works out to 32.98 years. So far so good

but also I haven't been using the drive all that heavily yet.

One thing I've noticed (also recall this from the EEE PC days),

whenever trim is issued, typically once a week with a stock Ubuntu

install, iostat will report the entire unused capacity as having

been rewritten. I seriously doubt that's actually the case - trim

might trigger some writes from reorganization in the interest of

making future writes more efficient but if it actually wrote 773G

of data it would have taken a long time for the drive to become

responsive after issuing the trim (no perceivable delay), plus

iostat (nor any utility as far as I can tell) can't actually tell

what's going on inside the drive beyond the information the drive

offers up, which (again as far as I can tell) does not include

internal housekeeping.

Backups

The system has a 1T SSD and 1T, 2T and 4T regular drives. The

plan was to put OS and work files on the SSD, static

not-that-important files and constantly changing files on the 1T

so they won't consume SSD capacity, big stuff on the 2T drive and

use the 4T drive for local in-the-machine backups. In addition to

this I also periodically copy the really important stuff to an

external drive and/or email off-site, but that stuff is only a

small fraction of the total capacity, for the rest backing up

internally and periodically swapping out the backup drive is

sufficient. The biggest risk of data loss is by far fumble-fingers

which happens all the time, followed by single-drive failure, rare

but when it happens it takes out a lot. Internal backups mitigate

both of the more common scenarios and cycled drives and off-site

backups mitigate other scenarios. I back up my system files while

the system is running (everything except for stuff like /tmp /dev

/media /proc and anything under /mnt is done separately to avoid

backing up the backup drive), not exactly proper but in my

experience (had my boot drive fail before) that is sufficient to

restore the system to working condition.

Right now there's nothing all that important in the new machine

beyond time setting it all up, but now that I'm starting to do

real work it's time to get backups working. Key word is working!

First thing I tried was the backup program included with Ubuntu -

Deja Dup, a front-end for duplicity. Specified what I wanted to

back up and where to, it appeared to work except kept asking me if

I wanted to copy the contents of symbolic links (no of course) and

once the backup was completed it just sat there doing nothing with

no disk or cpu activity. Only options were cancel and resume later

so clicked the latter. It appeared to work, saved a bunch of

archives and in Nautilus the revert to a previous version option

seemed to recognize the snapshot. I soon learned those backups

were basically useless, at least for the way my fingers fumble.

After mistyping a chmod command and wiping out my work folder

(could have fixed it.. accidentally removed execute from all the

folders but since there wasn't any real work saved there yet good

time to test the backups) discovered that Deja Dup was incapable

of restoring the folder, restored from the source drive. With that

fixed, tried restoring and reverting test files, now it couldn't

find the snapshot at all. Worse, the archive files were

incomprehensibly named, restoring anything manually looked next to

impossible. Maybe it works for some but it failed for me bigtime

on the first try. Next.

Fired up Synaptic and found a backup program called "Back in

time", installed backintime-qt and as far as I can tell it works

perfectly. It uses rsync, same as what I used for my old backup

script but implemented better - as usual with rsync it backs up

only files that have changed but unlike my homemade solution the

unchanged files are hard-linked with previous snapshots so that

many snapshots can be taken, each appearing as a compete copy of

the file system but without consuming extra space for duplicated

content. Awesome! Restore options are apparently limited to full

restores but it doesn't matter, if a file or folder gets wiped out

simply open up the backup folder, navigate to it and drag it back,

no need to even run the backup program. When making a snapshot I

run it as root (using the provided menu entry) and it gets all the

symlinks permissions and ownerships right - the snapshot tree is

simply a copy of the filesystem. One limitation is it doesn't back

up symlinks in the root directory, at least not when selecting

them as folders, don't want to back up all of root as that would

presumably recurse into the backup drive under /mnt. Not a

problem, just backed those up separately, they will never change.

Permissions

I'm gonna have to get an external drive and reformat it ext4,

this problem crops up every time I use a NTFS-formatted drive to

transfer files. At least symlinks seem to be preserved but file

permissions get all out of whack, upon copying back to the new

system everything seems to come out 777 - executable, by anyone.

Same with directories, all get set to 1777. Not all that worried

about the directory permissions but for the files it causes any

text file to open up the run dialog instead of an editor or

whatever else it's associated to, which is quite irritating. Tried

to fix it using recursive chmod commands but they never seemed to

recurse right and one syntax slip and there goes all the stuff,

which happened (then discovered my backups were no good). I used

to use a perl script called "deep" for recursively fixing

permissions but perl 5.30 no longer supports File::Glob::glob,

error message said to replace with File::Glob:bsd_glob but that

didn't work. So hacked up my own crude solution...

------- begin fixallperms.sh ---------------

#!/bin/bash # this script resets the permissions of all files with extensions # in and under the current directory to 644 (non-executable) # and all files that match exelist below to 755 (executable) # files without extensions are ignored exelist="*.sh *.exe *.bat *.com *.blassic *.fbc" basedir=`pwd` find . -type d -readable | while read -r filedir;do cd "$basedir" cd "$filedir" for file in *.*;do if [ -f "$file" ];then echo "$basedir/$filedir/$file" chmod 644 "$file" fi done for file in $exelist;do if [ -f "$file" ];then echo "EXE - $basedir/$filedir/$file" chmod 755 "$file" fi done done

------- end fixallperms.sh -----------------

The script recursively applies chmod to all files in and under

the current directory (my way.. got tired of destroying stuff

trying to make -R work), first setting all files with extensions

to 644 (non-executable, ignores files without a dot extension i.e.

most binaries) then sets particular extensions I want to be

executable to 755. First it gets the current working directory,

then uses find to generate a list of subdirectories (including the

current directory) which it pipes to a while read loop, then for

each directory changes to the base directory then the relative

find directory, uses for to reset all files in that directory to

644 then uses for again to set executable files to 755. Use with

caution! Don't try to run this on an entire home directory and

definitely not on system directories, it is meant only for fixing

permissions within specific folders after they got wiped out from

transferring via a NTFS or FAT drive.

Working through other issues

7/15/20 (replacing previous to simplify.. editing this on the new

machine)

Gnome Panel's weather app is broken, the forecast doesn't work

and the option to enable the radar map url doesn't stay checked.

The similar app for MATE panel works fine and with the same url.

Found an app called gnome-weather, not a panel app and doesn't

have a radar pane but works and has a nice hourly forecast

feature. The radar was handy for a quick check, for

interactive/moving displays always went to the local TV radar page

anyway. Making a minimal window that just shows a specified web

page doesn't look that difficult, found

this example.

MATE is wonderful and very glad it exists (especially the Caja

file manager), but it does have some rough edges. Some have been

previously noted... trying to move panel widgets often results in

removing them instead (or appearing to have been removed). Once

the panel is set up it is stable and works fine, but noticed

another oddity.. with some widgets (including launchers and the

menu) checking the Lock option does not deactivate the remove

option. There's a cosmetic one- or two-off bug in the MATE system

settings app... in the left pane selecting a section jumps to it

but highlights a section below it. The big one is after about

15-30 minutes of no mouse or keyboard activity (haven't timed) the

screen blanks, even when watching video. It doesn't do that in a

VM, on my old 12.04 machine I use 20.04 running MATE in a VM to

watch Netflix Hulu etc and it works fine. Have not found a setting

to control that. MATE's settings app doesn't have a power section

but in Gnome the screen blank function is set to never. Despite a

few rough spots I generally like MATE better - uses less memory,

better panel applets, has a really cool Appearance app for

selecting and saving theme combinations (which transfer to Gnome)

- but the screen blanking thing usually drives me back to Gnome

Flashback.

The Caja file manager does not properly generate thumbnails for

PDF files. So far the only workaround I have found is to open the

folder in Nautilus (aka Files) to create the thumbnails, once

created then Caja displays the thumbnails fine. But not for any

newly created PDF files until refreshed by opening in Nautilus.

Only PDF files seem to be affected, thumbnails for images are

fine. Setting Caja to be the default file manager didn't fix the

issue but did add a handy right-click to Open in Files (before had

to search for it in a list of apps) and makes Gnome Panel's Places

entries open in Caja.

It takes a combination

It would be nice if there was a single distribution that provided a well-functioning traditional desktop out-of-the-box, but from what I can see there isn't, at least not without some tweaking. MATE is very close - close enough that if the screen blank thing was fixed I'd probably spend most of my time in the MATE session, but there are still some settings I can only access from a Gnome session and MATE isn't quite as stable as the good old Gnome 2.3x system from which it was forked (no doubt from having to convert to GTK3 and all the other modern stuff). I do hope they keep up development, in my opinion as far as Linux desktop GUI's it's the best thing going right now for providing a traditional interface.

Gnome... if not for simplifying Nautilus to the point of hardly

being able to use it, and especially removing its desktop

functionality, Gnome would still be fine. I have an earlier

version of Gnome 3 on my old 12.04 system and it works more or

less like a better version of Gnome 2. Removing things like being

able to run Nautilus scripts without files specified is bad enough

(many of my scripts operate on the current directory, stuff like

play all media files etc), but removing the ability to manage the

desktop is too much, the only way to work around that is to use

another file manager that can provide that functionality and right

now that pretty much means Caja. Even Nemo can no longer handle

the desktop. Developers have scrambled to provide at least

something for the desktop - a Gnome Shell extension and whatever

that thing is that ships with the stock Flashback session - but

these are not good replacements. Basically they're just launcher

panels and provide little or no actual file manager abilities,

better than nothing but require opening the desktop in a file

manager to do anything beyond simple basics.

My productivity depends on my desktop being an extension of my

file manager with the added ability of remembering icon positions

and other desktop-specific stuff like creating launchers. Like it

has been for 25 years! Every extra click or moment spent having to

do something the OS can no longer do is time taken away from

actually getting stuff done. The new interfaces just don't cut it.

I can see what they are after - most people spend their time

mostly in one app, but my job requires not only running design

stuff in a window running Windows but also many other utilities..

terminals, calculators, text editors, file viewers, dumb but

important little utilities I type stuff into and they give me

answers. Sometimes I have half a dozen or more windows open at

once so I need a good panel for one-click access. Thankfully Gnome

Panel is still provided, and the Flashback session doesn't mind

that I use Caja for my desktop and default file manager. Almost

like they knew... I did here talk in the early days of 20.04

development about using Caja to provide the desktop.

So it seems I'm set now, thanks to the MATE project. Gnome is still fine, all the extra configuration trouble was basically caused by removing functionality from a single app, which I still need to use to compensate for Caja's PDF thumbnail issue.

Most of the other apps I use seem to work fine.. gFTP and gerbv are in the repository, pretty much all of my old simple binaries (32 and 64 bit) function as-is with minimal fiddling. For editing I use SeaMonkey Composer, not in the repository but "installing" is easy, simply download and extract anywhere and run it. Installing was just putting a symlink to it in /usr/local/bin and making another script that runs it with --edit to run composer, used my AddToApplications script to add desktop entries to make it easier to associate and added menu entries. Also use it with the --news option for newsgroups. Wasn't quite right with my dark system theme but came with another theme that works great.

Hmmm... not as good as my 12.04 system?

7/31/20 - Been doing real work with the new system and ran into

some deficiencies. VirtualBox running Windows 7 and Altium work

well, especially nice on the solid state disk (hammers it with

disk writes but according to my lifetime calculator it's good for

another 43 years). Installed FreeCAD, mainly for working with 3D

step files. At almost a gig it's overkill for a step file viewer

but probably is good for other stuff. For all that clicking help

just shows an error saying I need to install the documentation

package (which doesn't exist in the repository). The SeaMonkey web

browser and editor works great, so long as DRM isn't involved, got

FireFox for that. Don't like the new FireFox UI. what used to be 2

clicks to find a bookmark is now several more clicks, the old

Mozilla/FireFox interface is so much better. The new Gnome

Nautilus file manager still sucks, and Caja has a tendency to get

unstable sometimes, besides the PDF preview issue it often hangs

just trying to preview simple graphics. Caja does better with

associations, Nautilus sometimes doesn't pick up newly added

associations and makes me click more to get to what it does

recognize, no simple "open with" list. So I can choose stability

and previews or usability and bugs. My old 12.04 Gnome 3 system is

much better.

Things really got frustrating when it came time to put together

my circuit docs. I use the pdftops and ps2eps command line

utilities to make (sometimes rotated) eps files for embedding in

documentation. Pdftops was already installed, installed ps2eps.

These are very simple utilities but somehow failed - the ps2eps -R

+ command fails to also rotate the bounding box, no amount of

command line fiddling could fix. Uses GhostScript in the

background, probably what's failing. It gets much worse - the

included version of LibreOffice is just broken. When trying to

open an empty file (from Templates to start a new document) it

opens up an unrefreshed window with the desktop background showing

through. Works when started from the app menu, just not

right-clicking a file from the file manager. Traced the issue to

the Compiz window manager, opens OK when using Metacity or Mutter.

With that sorted, it's still broken - doing very simple stuff like

writing some text, hitting enter a couple times then dragging in

an image fails - inserts the image before the text instead of

where I told it to. Unusable. Might be able to fix by uninstalling

the distro version and manually installing downloaded debs, that's

what I do on my old system running LibreOffice 5. The imagemagick

convert -crop command doesn't properly update the dimensions, to

fix have to load the resulting cropped image into IrfanView (an

old Windows program I run with wine) and resave. The new software

as it stands now is unsuitable for doing documentation, to get my

work done had to zip up my work folder, copy it to a USB drive and

copy it to my old 12.04 system.

8/1/20 - Having to copy work back and forth to edit new stuff on

the old system is not a tenable situation, so Take Two.

Uninstalled all traces of LibreOffice 6, copied over the

pile-of-debs for version 5.2.3 from my old computer and installed

them using sudo dpkg -i *.deb. The older version works fine, no

problems with Compiz. Not sure why version 6 was so bad but it

reflects my experience with some new software in general,

especially new versions of programs I have used for years - all

those shiny new features (that I rarely need) tend to take the

focus away from the basic boring stuff (that I absolutely need)

and I usually notice right away. Especially when the

"improvements" make it harder to do simple things. I checked LO 6

on my Ubuntu 20.04 VirtualBox intstall and it seems to work OK

there (under MATE), graphic images insert properly so not sure

what gives. Maybe I hit a corner case (like immediately), maybe

it's something about this machine, but regardless of the cause the

new version didn't work and the old version works.

Had no luck getting ps2eps to work right. Uninstalled the

repository version and copied an older version from my 12.04

system to /usr/local/bin (it's just 2 files, a bbox binary and a

perl script) but it behaved the same.. rotated the vectors but not

the bounding box.. likely something changed in GhostScript that

isn't accounted for. This is a stupid simple thing people need to

do all the time (rotating PDF's), surely there's a solution, and

there is - pdftk, already installed. The following command creates

a new PDF that's rotated clockwise 90 degrees...

pdftk input.pdf cat 1-endeast output output.pdf

To rotate counter-clockwise use endwest, to flip use endsouth.

Once the rotated PDF is made then pdftops -eps can convert it to

EPS format for embedding into documentation. I'll never remember

that syntax so made 3 scripts...

-------- begin pdf2eps -------------------

#!/bin/bash # convert PDF file to an EPS file if [ "$1" = "" ];then echo "Usage: $0 input.pdf [output.eps]" echo "Converts a PDF file to an EPS file" echo "If output not specified then uses basename.eps" exit fi if ! file "$1"|grep -q "PDF";then echo "That's not a PDF file" exit fi outfile=`echo -n "$1"|head -c -4`.eps if [ "$2" != "" ];then outfile="$2";fi echo "Converting $1 to $outfile" pdftops -eps "$1" "$outfile"

-------- end pdf2eps ---------------------

-------- begin pdf2epsr ------------------

#!/bin/bash # convert PDF to a clockwise-rotated EPS file if [ "$1" = "" ];then echo "Usage: $0 input.pdf [output.eps]" echo "Converts a PDF file to a clockwise-rotated EPS file" echo "If output not specified then uses basename_rotated.eps" exit fi if ! file "$1"|grep -q "PDF";then echo "That's not a PDF file" exit fi outfile=`echo -n "$1"|head -c -4`_rotated.eps if [ "$2" != "" ];then outfile="$2";fi echo "Converting $1 to $outfile (rotated clockwise)" pdftk "$1" cat 1-endeast output /tmp/pdf2epsr.tmp.pdf pdftops -eps /tmp/pdf2epsr.tmp.pdf "$outfile" rm /tmp/pdf2epsr.tmp.pdf

-------- end pdf2epsr --------------------

-------- begin pdf2epsccr ----------------

#!/bin/bash # convert PDF to a clockwise-rotated EPS file if [ "$1" = "" ];then echo "Usage: $0 input.pdf [output.eps]" echo "Converts a PDF file to a counter-clockwise-rotated EPS file" echo "If output not specified then uses basename_ccrotated.eps" exit fi if ! file "$1"|grep -q "PDF";then echo "That's not a PDF file" exit fi outfile=`echo -n "$1"|head -c -4`_ccrotated.eps if [ "$2" != "" ];then outfile="$2";fi echo "Converting $1 to $outfile (rotated counter-clockwise)" pdftk "$1" cat 1-endwest output /tmp/pdf2epsr.tmp.pdf pdftops -eps /tmp/pdf2epsr.tmp.pdf "$outfile" rm /tmp/pdf2epsr.tmp.pdf

-------- end pdf2epsccr ------------------

If the output file isn't specified then the scripts derive it

from the input filename, "somefile.pdf" becomes

"somefile_rotated.eps". Added these to my PDF associations. Ok

back to work, see how far I get...

OK getting better

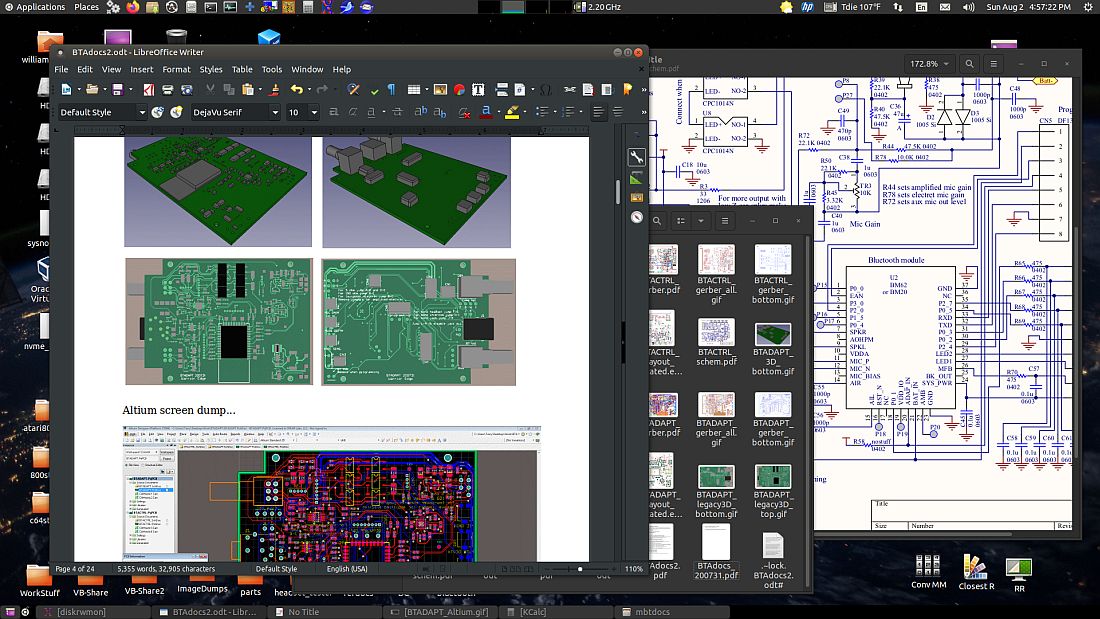

8/3/20 - Got through writing the docs and making the parts lists for the project I am working on (a Bluetooth adapter for my company's ANR headsets). LibreOffice 5.2.3 is working fine and the other tools are stepping in line. Becoming blind to Caja's cosmetic preview bugs and getting more used to the new Nautilus. Not getting used to Nautilus' more-clickity way of opening files with other associated apps, but whatever at least the overall process is getting better and the sheer speed of the new system usually makes up for the occasional UI deficiencies. My old 12.04 system can still do more, but the new system is catching up and what it can do it does much faster. While working on stuff...

And it runs DOS... while working on stuff I needed to calculate

the output power, power dissipation and current drain of the

amplifier with various loads for the docs, so fired up QBasic and

quickly wrote the following code...

SupplyV = 4.94 'main supply voltage OpenV = 3.5 'open-circuit RMS output voltage Qcur = .005 'no-signal current drain Beta = 30 'estimated output transistor beta IntR = 13 'estimated internal output resistance SerR = 35 'external resistance in series with the load PRINT PRINT "For SerR ="; SerR; " IntR ="; IntR; " OpenV ="; OpenV; PRINT " SupplyV ="; SupplyV; " Qcur ="; Qcur; " Beta ="; Beta PRINT PRINT "LoadR", "LoadPwr(mW)", "InputPwr(mW)", PRINT "PwrDiss(mW)", "SupCurrent(ma)" PRINT "-----", "-----------", "------------", PRINT "-----------", "--------------" LoadR = 300: GOSUB calcsub: GOSUB printsub LoadR = 200: GOSUB calcsub: GOSUB printsub LoadR = 100: GOSUB calcsub: GOSUB printsub LoadR = 50: GOSUB calcsub: GOSUB printsub LoadR = 32: GOSUB calcsub: GOSUB printsub LoadR = 16: GOSUB calcsub: GOSUB printsub SYSTEM calcsub: loadpower = ((LoadR / (IntR + SerR + LoadR)) * OpenV) ^ 2 / LoadR loadcurrent = SQR(loadpower / LoadR) inputcurrent = loadcurrent + loadcurrent / Beta + Qcur inputpower = SupplyV * inputcurrent outputpower = loadcurrent ^ 2 * (SerR + LoadR) dissipation = inputpower - outputpower RETURN printsub: PRINT LoadR, loadpower * 1000, inputpower * 1000, PRINT dissipation * 1000, inputcurrent * 1000 RETURN

...which when run produced this output which I copy/pasted into

my document...

For SerR = 35 IntR = 13 OpenV = 3.5 SupplyV = 4.94 Qcur = .005 Beta = 30

LoadR LoadPwr(mW) InputPwr(mW) PwrDiss(mW) SupCurrent(ma)

----- ----------- ------------ ----------- --------------

300 30.34582 76.04004 42.15388 15.39272

200 39.83481 96.74167 49.93576 19.58333

100 55.92586 145.4185 69.91856 29.43694

50 63.77551 207.0095 98.59115 41.90476

32 61.25 248.0292 119.787 50.20833

16 47.85156 303.8615 151.3346 61.51042

I have the modern QB64 equivalent, but for whatever reason it

doesn't run this simple code and doesn't indicate why. Blassic is

good for some things like this but the extra numerical precision

is unnecessary and messes up table prints so I end up getting

bogged down writing extra code to fix stuff like that instead of

getting the numbers I need and moving on. When I'm working I don't

need complications or having to figure out why something doesn't

work, so for quick programs like this I almost always grab QBasic

first. It always works and if it doesn't it's me not it.

8/8/20 - I moved my drives back to the first new machine ZaReason

made for me, with the Gigabyte B450M DS3H motherboard. The one I

thought I blew up with an indicator light that looked like a

severely overloaded PCB trace - I still think whoever designed

that was messing with old people, had me going. The second machine

with the ASRock B450M Pro4 motherboard was OK, performance-wise

was the same but it had a tendency to run somewhat hotter. Never

overheated but Tdie would often spike to 180F+ before the fan

caught up, triggering distracting notification alarms. The

Gigabyte motherboard seems to be faster on the fan, have to push

it a lot harder to trigger a temperature notification. Running

MEVO2 set to 10 threads, 0 thread delay, evolving nano warriors...

The downward drop in performance around 8.8M iterations was when

I expanded the soup from 77x21 to 158x47, generating thousands of

new unevolved warriors which were quickly displaced by the rather

strong warriors inhabiting this soup. All this is still going on

in the background as I edit this and otherwise use the system,

can't perceive any lag whatsoever.

Other reasons for making the swap back to the first machine were

the second machine has a different case with jacks on the side

instead of the front, being under my desk it put USB quantum spin

1.5 in full effect and then some. Also has a bright blue power

indicator that illuminated the whole room when on at night.. once

past the immediate work push it was time to swap back to the first

machine. The second machine has WiFi so it's better for using at

the amp shop, avoids having to run a cable for internet.

8/10/20 - This is cool...

That's one of the two IDE's (integrated development environments) available with BBC Basic for SDL, the other is more like the IDE in the original Windows version. Installation is easy, extract the files to a folder somewhere, install the SDL libraries using the commands in the included text file, then run ./mkicon.sh to make a desktop short-cut. It's not exactly a compiler, but it can create stand-alone applications (using the other IDE) by making an "app bundle" which includes the program, libraries and an interpreter. The paid-for (non-SDL) Windows version works much more like a regular compiler, producing a single EXE file that can just be run. There is also a console version without graphics and sound, and a program that converts QBasic code to BBC Basic. The latter is a Windows program but it works with wine, although I had to help it a bit by creating a "lib" directory in my user's Temp directory (what to do was fairly obvious from the error message). The Windows versions of BBC Basic (regular and SDL) work fine with wine, the SDL version doesn't require installing, just unzip somewhere and run the exe. See here for the differences between the Windows and the SDL versions.

BBC Basic home pages are here and here. Also here. BBC Basic for SDL is

open source software, here's the source code.

If a command line parameter is

supplied, the SDL and console versions run the program rather than

bringing up the IDE (or the minimal interface of the console

version). To make running and associations easier I made symlinks

in /usr/local/bin to the bbcsdl and bbcbasic (console) binaries so

I wouldn't have to specify the path and if the binaries are moved

only the symlinks have to be updated.

Associating to the console version is a bit trickier as it has to

be run in a terminal and generally file manager associations don't

do that unless a desktop file or a script is made, I chose to make

a script that runs the program in an xterm terminal window...

[updated 8/17/20]

----- begin run_bbcbasic --------------

#!/bin/bash # run_bbcbasic version 200817 # this script runs a .bbc program in bbcbasic, if not already in # a terminal then launches one. Associate this script to .bbc files # to add to right-click options (usually my default is bbcsdl) # Also, to help with running bbc basic text like a script, if running # basrun2.bbc then saves cur dir to /tmp/current_bbcbasic_dir.txt # so it can preserve the directory when running the program interpreter="bbcbasic" # interpreter (full path if not on path) if [ -t 0 ];then # if running in a terminal # set terminal titlebar name to interpreter - program echo -ne "\033]2;`basename "$0"` - `basename "$1"`\a" if (echo "$1" | grep -q "basrun2.bbc") ;then # save the current working directory # basrun2.bbc has been modified to change back to the directory pwd > "/tmp/current_bbcbasic_dir.txt" fi "$interpreter" "$@" # run program in interpreter echo -ne "\033]2;\a" # clear titlebar name exit # exit script fi # if $MyRunFlag set to ran then exit if [ "$MyRunFlag" = "ran" ];then exit;fi # set MyRunFlag to keep from looping in the event of an error MyRunFlag=ran # rerun self in a terminal # uncomment only one line, modify parms as needed # profiles must have already been created, these are mine xterm -geometry 80x25 -bg black -fg green -fn 10x20 -e "$0" "$@" # gnome-terminal --profile="Reversed" -x "$0" "$@" # mate-terminal --profile="CoolTerminal" -x "$0" "$@" # konsole --profile="BBC" -e "$0" "$@"

----- end run_bbcbasic ----------------

This script runs the specified program with the bbcbasic

interpreter in an xterm terminal (if not already running in a

terminal). It also works with the tbrandy interpreter, not

specific other than the interpreter runs the program specified on

the command line.

The program has to end with QUIT to exit when the program ends,

otherwise quits to the console. The console version doesn't come

with much in the way of docs but it works pretty much like an

old-school BASIC - use line numbers so lines can be replaced,

LIST, RUN, LOAD, SAVE, NEW etc work as expected, commands must be

capitalized. Line numbers are totally optional but to edit a

program from the console then use RENUMBER, then lines can be

replaced or use EDIT line_number to edit a line. For real editing

without needing line numbers edit it using the SDL version.

The QBasic to BBC translator seems to work well except that it uses an install command for the library, so won't run outside of the Windows environment, just says file not found. To make the converted program work anywhere first make a text version of the QBLIB.BBC library (open QBLIB.BBC in the IDE then save-as, and save it as QBLIB.BAS to convert it to text form - or just copy/paste the code into a text file), then in the converted program REM out the initial INSTALL command and copy/paste the contents of QBLIB.BAS after the converted code and save.

BBC Basic is one of the most advanced BASICs I have encountered,

the graphics abilities are amazing and despite being an

interpreter the programs run extremely fast and smooth. It has its

limits and it'll take awhile to get familiar with this dialect,

but for a lot of the kinds of programs I make it should make for a

nice cross-platform programming alternative. I'm mainly using the

open source SDL version but I elected to also purchase the Windows

version, it has a better compiler function that produces a single

stand-alone EXE file with all the dependencies encapsulated

within, simplifying distribution. The Windows IDE and the exe

files it produces run more or less perfectly on my Ubuntu 20.04

system using wine, the only thing I've found that doesn't work

under wine is the help menu items and that's because wine's CHM

viewer is broken, the help files open ok using Okular or another

Linux CHM viewer [actually kchmviewer works much better]. Or use

the web manual.

Or the PDF

version.

BBC Basic

is old, the first version included with the BBC Micro was

released around 1982. Most of the original code was written by

Sophie Wilson (who also invented the ARM processor architecture),

more recent development (the last few decades) has been mostly by

Richard Russell, who created Z80, MSDOS and other ports and

presently maintains the Windows, SDL and console versions. Being

an old language also means it is fairly stable, while there are

differences between the various platforms and sometimes changes in

the computing environment necessitate changes in low-level code,

but by and large the language isn't going to change - I like that!

Why I still use QBasic. Most of the updates now are bug fixes, and

it's open-source so if I need to I can fix stuff myself. Getting

it to compile was fairly easy, just had to install nasm

libsdl2-net-dev and libsdl2-ttf-dev in addition to the

libsdl2-ttf-2.0-0 and libsdl2-net-2.0-0 I had to install to use

the binary version. Already had the main libsdl2 and dev packages.

Basically (as usual with these things) kept running make and

installing what it complained about until it stopped complaining.

One oddity - the binary isn't recognized as a binary by file

managers etc, the type comes back as "ELF 64-bit LSB shared

object", whereas most Linux binaries are type "ELF 64-bit LSB

executable". Still runs fine from scripts, desktop files,

associations or a command line, just can't double-click a BBC

Basic binary directly from the file manager (at least not from

Nautilus or Caja).

Here are a few things I found on the net...

The Usborne books are supposed to be for kids, but they'll also

turn an aging man back into a kid.. I guess '20's count. Back to

the days of typing in programs and saving them on cassette tape.

When I got a C64 with an actual disk drive, now that was

something. Still have a lot of those programs I used to play

with.. Elite was one of my favorites. Nowadays when my computer

writes only a billion bytes to my solid state disk that's a light

usage day.

8/11/20 - here's a script that can be associated to .bbc files to

edit them using the SDLIDE.bbc editor...

----- begin edit_SDLIDE --------------

#!/bin/bash # edit a BBC program using the SDLIDE.bbc editor editfile=$(readlink -f "$1") sdlide=$(dirname $(readlink -f $(which bbcsdl)))/examples/tools/SDLIDE.bbc bbcsdl "$sdlide" "$editfile"

----- end edit SDLIDE ----------------

The script requires a symlink to the bbcsdl binary in a path

directory such as /usr/local/bin, it figures out where the bbcsdl

install directory is from the symlink using the which, readlink

and dirname commands. There is one program that can't be edited -

SDLIDE.bbc - editing the editor from itself would just be silly,

not to mention risky. If you must do that (even just to see the

code) then edit a copy.

The SDLIDE editor is turning out to be quite nice...

I can run the code from the IDE but with my run_bbcbasic script I

can right-click the .bbc file and run it in a terminal using the

console version for a more retro look... (which also lets me

copy/paste the program output)

SDLIDE isn't quite as colorful as BBCEdit but besides the huge

advantage of being able to script it for right-click editing, it

has a really nice debugging facility that shows the execution

point and the contents of variables...

Despite the advanced features, for the most part that can be

ignored for running old-school BASIC code.. I didn't have to do

that much to get the MS BASIC version of the old

"superstartrek.bas" program running - added spaces between the

keywords (BBC Basic tolerates some scrunching but sometimes it

conflicts with new keywords), changed the number prints to add

surrounding spaces and added a string before prints that started

with a number to avoid unwanted tabbing, and most importantly,

rewrote any FOR/NEXT loops with code that jumped outside the

loop.. could get away with that with some early BASICs but not

with BBC Basic and most other modern BASICs which keep the loop

variable on the stack. Easy enough to replace code like...

[example edited to clarify scope]

1200 FOR I=1 TO 7:FOR J=2 TO 9 1210 X=A(I,J):IF X=9 THEN 2000 1220 NEXT J:NEXT I 1230 REM CODE 2000 REM DISTANT CODE

...with something like...

1200 I=1 1202 J=2 1210 X=A(I,J):IF X=9 THEN 2000 1220 J=J+1:IF J<=9 THEN 1210 1222 I=I+1:IF I<=7 THEN 1202 1230 REM CODE 2000 REM DISTANT CODE

It gets a little trickier when the loop terminator is a variable

and that variable is modified during the loop, have to figure out

if it goes by the initial value, or the value when next is

executed. I always considered that undefined behavior that depends

on the implementation.

8/12/20 - In the original FOR/NEXT mod example the jump was to right after the loop, BBC Basic has a command just for that - EXIT FOR [var]. EXIT FOR exits the current FOR/NEXT, EXIT FOR I would exit all loops until it exits the FOR I loop. Also has similar commands for REPEAT and WHILE loops. Most languages have similar constructs for exiting loops, sometimes just have to leave a loop early. However, these constructs are of little use when converting old code that contains numerous jumps out of loops to destinations throughout the program, so needed a conversion method that just worked and could be applied without much thought about what the code actually did. Edited the example to make that use case more clear. There were a few other things that needed fixing.. early MS BASIC dropped through if ON var GOTO was out of range, in BBC Basic this is an error so needed a bit of code to make sure the variable has a valid target.

The Super Star Trek conversion seems to be fairly stable now so here's the code, will update it if I

find any major bugs. I modified the look of the short range scan..

the original had no divisions making it more difficult to figure

out directions. Here's how it looks now...

The stock look can be restored by changing a few variables near

the beginning of the program.

The Star Trek program (and its many versions) is one of my

favorite bits of old code to play around with, for comparison here

are versions for Atari 8-bit

and for a HP21xx minicomputer.

This conversion was fairly trivial compared to those, at least in

its current form. It is tempting to try a graphical conversion...

would be a good opportunity to learn about graphics and text

viewports and all that stuff. The Atari version, although still

text-based, used screen codes to keep the short range scan visible

all the time, at least when not damaged.

10/17/20 - Made a few minor mods to the Star Trek code so make

the input prompts consistent between different versions of BBC

Basic. Also modified the computer so that enter or 0 brings up a

list of commands, reject invalid inputs (before could crash with

divide by 0) and to display distance in both navigation units and

the actual distance (which is used only for phasor strength

calculations). See below for discussion about INPUT

behavior.

2/9/21 - A colorized version of the

Star Trek program... [under development, now has difficulty level,

save/restore and preference settings]

...makes it look better when playing it on my phone using the

Android version of BBC Basic (downloaded from this page).

To make it show up under @usr$ in the BBC Basic Android app, I

copied the program (after converting to .bbc format using the PC

IDE) to the phone's Android/data/com.rtrussell.bbcbasic/files/

folder. Still figuring out the Android version but it's pretty

darn neat. [...]

2/11/21 - After a few days of playing around with it I'm learning

more about how to use the setup.. doing anything PC-like on a

phone is different (to put it mildly). The system boots into

"TouchIDE" (touchide.bbc) which permits browsing the file system

for .bbc programs, @lib$ and @usr$ are always available.. @usr$

goes the PC-accessible directory, @lib$ goes to the library

directory and provides an easy way to get back "home", from @lib$

tap .. to go to the default immediate mode load/save area and from

there tapping examples goes to the startup directory. Holding a

file brings up options to edit, delete, rename, copy and cut. Copy

and cut permits copying and moving files (for moving it's safer to

copy then come back and delete the original). Holding empty space

past the file list brings up options for new file, new folder,

paste, list all files and exit. The editor has zooming and syntax

hi-lighting, nice! Prompts to save when exiting via the back

button. Most of the sample programs return to TouchIDE when back

is tapped (on my buttonless phone I have to swipe up to get

buttons), but it's trickier for simple programs that aren't aware

of the environment. On my phone once the keyboard disappears

(after pressing back twice to trigger Esc to interrupt a program)

it's hard to get it back, but with determined quick tapping I can

get it back (getting better at it.. too bad Esc isn't on the

phone's virtual keyboard). Ignoring cheap phone glitches, I'm

impressed! This is a full-blown BBC Basic programming

environment.. on a phone!

2/15/21 - [separate Android version removed, it's now all in the

main SST3 program] There are a few tricks to programming with the

Android version, mostly because of the strangeness of Android

itself. While files can be copied from a PC to the directory

referenced by @usr$, copying edited files there (even with the

phone's file manager) don't show up when the phone is mounted on

my PC (ugh why? to make it harder to back up my files?). The only

way I found to get files back out of the phone was to copy them to

a SD card, then the files show up when mounted. Android is

peculiar...

Matrix Brandy

8/14/20 - Matrix Brandy is a fork of the Brandy BBC Basic interpreter that adds SDL 1.2 graphics support, among things. The language isn't as complete as BBC Basic for SDL2 and it doesn't have an IDE, but it has a couple of properties that makes it particularly useful for Linux users - it can load and run basic code in (unix) text format from the command line, and if the first line starts with #! then it treats that line as a comment. These properties permit adding a #! line to the beginning of a program to specify the interpreter, making it executable, then running it as if it were any other program. For example adding "#!/usr/local/bin/brandy" (without the quotes) as the first line makes it run in a graphical environment when double-clicked (in Ubuntu it asks whether to run, display or run in a terminal). There is also a console version called tbrandy for text-only programs, adding a line like "#!/usr/bin/env -S konsole --profile="BBC" -e tbrandy" makes it use tbrandy to run the code in a konsole terminal using the (previously created) profile "BBC". Or can copy of the run_bbcbasic script presented earlier to run_tbrandy and edit it to use tbrandy instead of bbcbasic, then specify #!/usr/local/bin/run_tbrandy on the first line - then if just run is selected it runs the program in the terminal specified in the script and if run in terminal is specified it runs it in the system default terminal. Choices! All these examples assume that symlinks to the binaries and scripts are in the /usr/local/bin directory, the usual place for those things.

Matrix Brandy must be compiled from source, it's not difficult

but have to have build-essential installed along with -dev

packages for SDL 1.2 and other packages, when compiling the error

messages will say what is missing, search for the corresponding

-dev packages in Synaptic or another package manager. The tbrandy

(and sbrandy) console versions have far fewer dependencies.

Building both the graphical and console versions in the same

directory is slightly tricky, make clean has to be run before

recompiling to remove previously compiled objects or it's a shower

of errors.. this also (sometimes) has the effect of removing the

binaries so previously compiled binaries have to be preserved. To

make it easier I just threw it all into a script...

----- begin makeall.sh -----------------

#!/bin/bash # rebuild all versions # remove existing executables rm brandy rm sbrandy rm tbrandy # build sbrandy and tbrandy echo echo "Building sbrandy and tbrandy" echo make clean make text # rename binaries to preserve # (current make clean doesn't delete these but might in the future) mv sbrandy sbrandy_ mv tbrandy tbrandy_ # build SDL version echo echo "Building brandy" echo make clean make # preserve binaries mv brandy brandy_ # clean up make clean # restore binaries mv sbrandy_ sbrandy mv tbrandy_ tbrandy mv brandy_ brandy # pause so any errors can be read sleep 5

----- end makeall.sh -------------------

Being able to run BASIC code like a script is very useful for

simple programs and quick "one off" hacks. Previously I have used