Ubuntu Stuff, Continued

Index of stuff on this page...

12/27/21 - a recap... The archived oldcomp/index

page documented some of my experiences playing around with

emulated old computer systems from about 1998 to 2007, first under

Windows 95 but some time before 2005 I started playing around with

various Linuxes including DSL, Knoppix and eventually Ubuntu. Just

rediscovered select.. forgot about that one. Ubuntu

Notes covers my experiences with Ubuntu from 2006 to 2011,

when I finally got a 3ghz quad-core machine with Ubuntu 10.04

pre-installed. The comp/index

page covers from 2011 to 2012, transitioning from Gnome 2 to

Gnome 3 and

figuring out how to make the new Gnome 3 components work the way I

want them to (you know, like Windows 95 but better with two panels

and a real OS under it all). Ubuntu Stuff covers from 2012 to 2020,

when I got my current 3.2ghz hex-core system with Ubuntu 20.04 and

gobs of ram and storage. More Ubuntu Stuff covers my efforts to

tame Ubuntu 20.04 and the newer Gnome 3 into something I can use

(a mixture of Gnome 3 and MATE components), but more and more I

can focus on coding and cool software and less on the operating

system.

Since 2005 a lot has changed with desktop Linux, mostly for the

good. It's still not perfect but things work better than they ever

have, open-source applications approach and often exceed the

quality of commercial software. While commercial support is still

nothing like it is with Windows, the platform now attracts the

attention of major players, including Microsoft and . PCB software

for Linux is coming along, I can make KiCad work but I prefer an

older version of Altium Designer which I run in a Windows 7

VirtualBox window. Recently I installed a Windows 10 VirtualBox VM

for more modern Windows stuff (Solidworks Viewer etc) but it's a

LOT slower than my 32-bit Windows 7 VM. Ubuntu Linux and

VirtualBox (or VMware) makes it a safer to run an outdated copy of

Windows, basically reducing it to a large file that can be backed

up and restored, and I don't have to surf the web from within

Windows, instead I can find what I want on the native Linux side

and copy it to a shared folder. Wine has gotten quite good at

running simpler Windows software natively, I use it almost daily

to run IrfanView for image editing and LTspice for simulating

electronic circuits. When I started with Linux it did good to play

audio without glitches, now I can run Ardour and mix tracks with

studio quality output, and transmit audio to bluetooth devices

with per-device latency compensation then somehow the apps know to

send the audio a specified interval before the video - that's just

cool. I can stream NetFlix and play high resolution movies full

screen, pre-2011 my systems could barely play video. My system now

can do pretty much everything I want it to do, with few bugs. Of

course there are always bugs in a complex system but once

workarounds are found minor bugs become more like a quirky

personality.

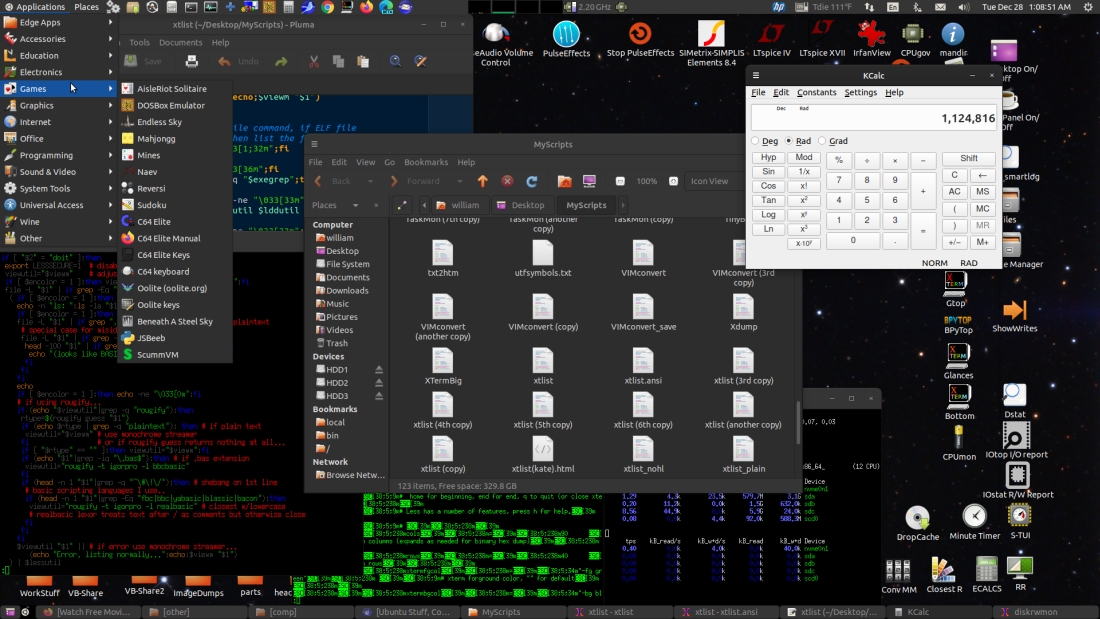

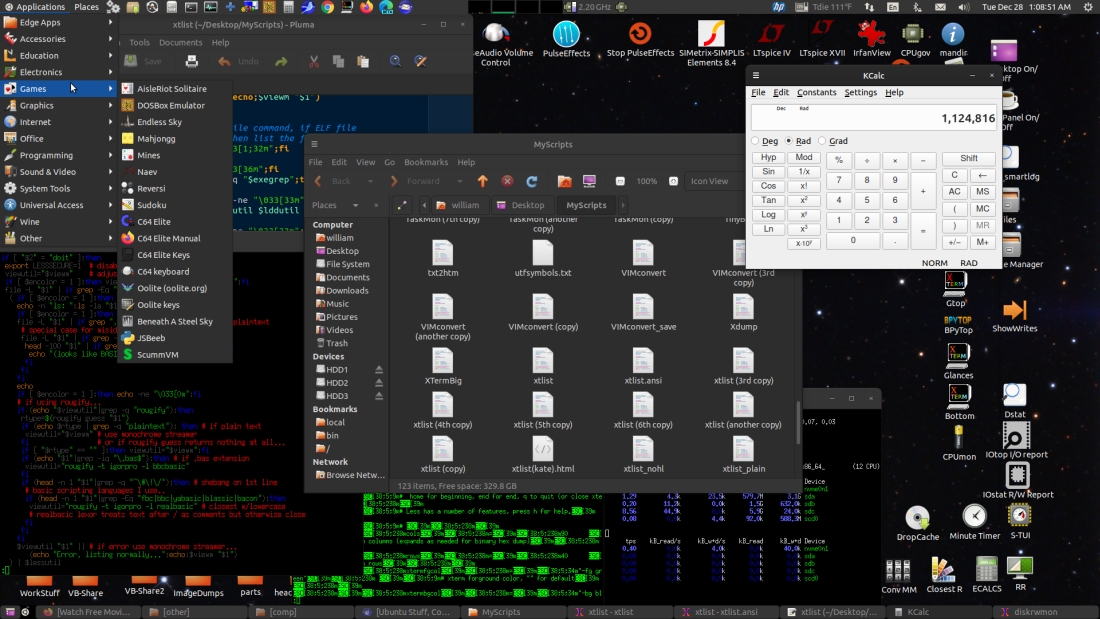

Here's my present desktop running a few things...

Contrived for the screenshot but it basically looks like that

when I'm working. The desktop environment components are Gnome

Panel aka Flashback, Caja for the desktop (Ubuntu's desktop

component has to be disabled), and the mutter compositing window

manager. The session was made from a copy of the default flashback

session, mostly just hacking in what I wanted to run (documented

on previous pages).

It works pretty much like the old Gnome 2 and the new MATE, and

works sort of like earlier versions of Windows from 95 to 7 except

it has a separate panel for tasks. I tend to have a lot of tasks.

Frequently used app and folder shortcut icons are placed around

the edges so I can quickly get to them, I almost never maximize

windows unless it's a video or something, when working I need to

quickly shove windows around as needed. Tasks are per-window so it

only takes one click to focus that window. It has an organized app

menu that only needs mouse moves and a click to find and run apps.

The desktop is essentially the root of my file system, with links

to files and folders, actual files and folders, and desktop

shortcut files. I need to be able to do (a complete set of) file

manager things with the files and folders on my desktop. Nautilus,

the Gnome file manager, can no longer handle the desktop so at the

start of the session it runs MATE's Caja file manager for the

desktop (caja -n --force-desktop). The apps in the screenshot are

a few xterm windows running various things, the Caja file manager,

MATE's Pluma text editor and KDE's calculator.

Rougify Convert

1/13/22 - I've been using my VIMconvert script to colorize

source code on my pages and I like the way it looks for most

things, but that script is rather complicated - not only does it

depend on VIM but also rewrites VIM's output on the fly. When

working on the color version of my xtlist script

I found something called "rougify" from the ruby-rouge package, I

usually prefer VIMconvert but rougify is a lot simpler and it's

always nice to have choices. Here's a "rougify_convert" script I

made, colorized by itself using the "igorpro" theme... [updated

1/21/22]

----------- begin rougify_convert ------------------------

#!/bin/bash

# rougify_convert 220121

# highlight source code using rougify from ruby-rouge package

# convert to html using ansi2html from colorized-logs package

# uses seamonkey to display html

# also uses zenity and sed

# usage: rougify_convert filename

tempdir="/dev/shm/rougify_convert_tmp" # ansi/html files will be left here

browser="seamonkey"

browserparms="--new-window"

browserprefix="file://"

defaulttheme="igorpro" # defaults if nothing selected

defaultbgcol="White"

if ! [ -f "$1" ];then exit;fi # exit if file doesn't exist

set -e # exit if error so zenity cancel works

mkdir -p "$tempdir"

fname=$(basename "$1")

ansiname="$tempdir/$fname.ansi"

htmlname="$tempdir/$fname.html"

# get list of rougify themes.. this works with 3.15.0

themelist=$(rougify help style|tail -n 1|sed -e "s/,/ /g")

theme=$(zenity --title "rougify_convert" --text "Select theme..." \

--column "" --hide-header --list $themelist)

if [ "$theme" = "" ];then theme=$defaulttheme;fi

bgcol=$(zenity --title "rougify_convert" --text "Select background..." \

--column "" --hide-header --list "Default" "White" "Black" "None")

if [ "$bgcol" = "" ];then bgcol="$defaultbgcol";fi

a2hopts=""

if [ "$bgcol" = "White" ];then a2hopts="-w -c";fi

if [ "$bgcol" = "Black" ];then a2hopts="-c";fi

if [ "$bgcol" = "None" ];then a2hopts="-n";fi

rougify highlight -t "$theme" "$1" > "$ansiname"

ansi2html $a2hopts < "$ansiname" > "$htmlname"

"$browser" $browserparms "$browserprefix$htmlname" &

----------- end rougify_convert --------------------------

Requires zenity, rougify from the ruby-rouge package, and

ansi2html from the colorized-logs package. Temp directory is set

to /dev/shm/ which on modern Ubuntu-like distros should be a

user-writable ram disk. If not change /dev/shm/ to /tmp/ or some

other writable directory. Output files are not removed to permit

reusing. The script uses zenity to prompt for the rougify theme

and ansi2html parameters to set the background color, then runs

rougify to produce an ANSI file then uses ansi2html to convert the

ANSI file to HTML. The method it uses to grab the theme list

assumes that the command "rougify help style" outputs a

comma-separated list of themes as the last line, if that changes

the script will have to be modified. Not sure why it added the

bold attribute to the pasted text but that's easy to change in the

Seamonkey Composer editor and I think like the way it looks bolded

better anyway. As with VIMconvert lately both Firefox and

Chrome-based browsers have been making it difficult to copy/paste

into Seamonkey Composer while preserving the colors, so the

browser is set to seamonkey.

mdbrowser - a Markdown File Viewer

1/13/22- Lately lots of source packages have README.md and other

docs in markdown format. Okular can display the text but doesn't

show the in-line graphics, often fetched from an external web

site. At first I found something in the repository called simply

"markdown" that converts markdown text to html including images,

but it didn't properly handle embedded script code. Soon found

"pandoc" (was already installed on my system) which does a much

better job. Both simply read a file and write the equivalent HTML

to standard output. Here's my "mdviewer" script...

---------- begin mdviewer --------------------------------

#!/bin/bash

# mdbrowser file - view a markdown file in a browser - 220113b

# requires the pandoc program and a web browser

if ! [ -f "$1" ];then exit;fi # exit if file doesn't exist

tempdir="/dev/shm/mdbrowser_tmp" # converted html file left here

mdconvert="pandoc" # name of markdown-to-html converter

mdconvparms="" # extra parms for converter

browser="google-chrome" # firefox, google-chrome, seamonkey etc

browserparms="--new-window" # don't open in tab if browser running

browserprefix="file://" # add to temp name for url

mkdir -p "$tempdir" # make temp dir if it doesn't exist

tname="$tempdir/$(basename "$1").htm" # form temp html filename

"$mdconvert" $mdconvparms "$1" > "$tname" # convert file to html

"$browser" $browserparms "$browserprefix$tname" &>/dev/null & # view it

---------- end mdviewer ----------------------------------

Temp directory is set to "/dev/shm/mdbrowser_tmp", edit if

needed. Temp HTML file is not removed.

More code colorizing stuff

1/18/22 - Found a couple more options for colorizing program

code... "pygmentize" from the python3-pygments package, and

"chroma". Also for converting the ANSI-coded text to HTML the

"aha" converter program works better for scripts that contain UTF8

codes. Here's a script that uses pygmentize and aha...

---------- begin pygmentize_convert -----------------------

#!/bin/bash

# pygmentize_convert 220118

# colorize source using pygmentize from the python3-pygments package

# then convert ANSI to HTML using aha (or ansi2html)

# then view the HTML file using the SeaMonkey browser

tempdir="/dev/shm/pygmentize_convert_tmp" # ansi/html files will be left here

browser="seamonkey"

browserparms="--new-window"

browserprefix="file://"

if ! [ -f "$1" ];then exit;fi # exit if file doesn't exist

mkdir -p "$tempdir"

fname=$(basename "$1")

ansiname="$tempdir/$fname.ansi"

htmlname="$tempdir/$fname.html"

# -f options: terminal terminal16m or terminal256

pygmentize -g -f terminal16m "$1" > "$ansiname"

aha < "$ansiname" > "$htmlname"

#ansi2html -w -c < "$ansiname" > "$htmlname"

"$browser" $browserparms "$browserprefix$htmlname" &

---------- end pygmentize_convert -------------------------

Here's a fancier script that uses chroma with zenity to prompt

for the theme, formatter and converter (ansi2html or aha, white or

black)... [updated 1/21/22]

---------- begin chroma_convert ---------------------------

#!/bin/bash

# chroma_convert 220121

# colorize source to ANSI using chroma

# then convert to HTML using aha or ansi2html from colorized-logs package

# then view the HTML file using the SeaMonkey browser

# uses zenity to prompt for style/formatter/converter, ok for defaults

# uses tr to convert to unix line ends

tempdir="/dev/shm/chroma_convert_tmp" # ansi/html files will be left here

browser="seamonkey"

browserparms="--new-window"

browserprefix="file://"

defaultstyle="pygments" # defaults if nothing selected

defaultformatter="terminal16m"

defaultconverter="ansi2html -w -c"

if ! [ -f "$1" ];then exit;fi # exit if file doesn't exist

set -e # exit if error so zenity cancel works

mkdir -p "$tempdir"

fname=$(basename "$1")

unixname="$tempdir/_$fname" # underscore prefix in case tempdir set to "."

ansiname="$tempdir/$fname.ansi"

htmlname="$tempdir/$fname.html"

# get list of available styles...

stylelist=$(chroma --list|grep "styles: "|tail -c +9)

style=$(zenity --title "chroma_convert" --text "Select style..." \

--column "" --hide-header --list $stylelist)

if [ "$style" = "" ];then style=$defaultstyle;fi

formatter=$(zenity --title "chroma_convert" --text "Select formatter..." \

--column "" --hide-header --list terminal terminal16m terminal256)

if [ "$formatter" = "" ];then formatter=$defaultformatter;fi

conv=$(zenity --title "chroma_convert" --text "Select converter..." \

--column "" --hide-header --list \

"ansi2html white" "ansi2html black" "aha white" "aha black")

converter=$defaultconverter

if [ "$conv" = "ansi2html white" ];then converter="ansi2html -w -c";fi

if [ "$conv" = "ansi2html black" ];then converter="ansi2html -c";fi

if [ "$conv" = "aha white" ];then converter="aha";fi

if [ "$conv" = "aha black" ];then converter="aha --black";fi

tr -d "\r" < "$1" > "$unixname" # convert file to unix line ends

chroma -l autodetect -s $style -f $formatter "$unixname" > "$ansiname"

$converter < "$ansiname" > "$htmlname"

"$browser" $browserparms "$browserprefix$htmlname" &

---------- end chroma_convert -----------------------------

Both scripts use the SeaMonkey browser to display the output for

easy copy/paste into SeaMonkey Composer, the generated ANSI and

HTML output is left in the temporary directory.

1/21/22 - Noticed that chroma (version 0.7.1 from the 20.04

repository) has issues with dos-formatted line ends, so added a

simple tr command to remove CR characters from a temp copy before

running chroma on it. Also updated the rougify_convert script to

use the set -e trick to exit if zenity's cancel button is clicked

and added default theme and background color for clicking ok

without selecting anything, edit to set the defaults.

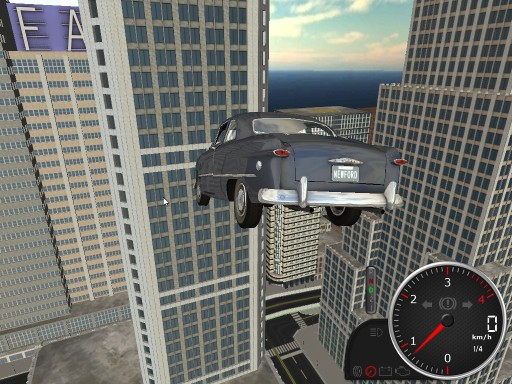

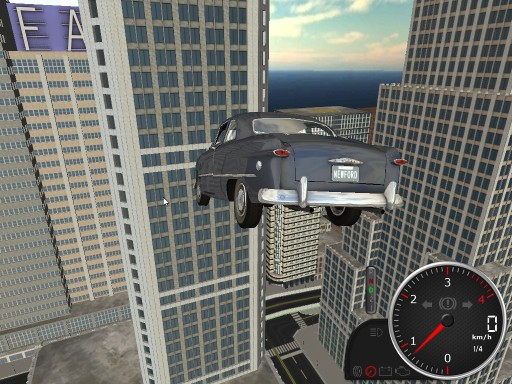

Rigs of Rods

1/25/22 - Well here's a wonderful time waster, Rigs of

Rods... (click images for bigger)

I installed the snap version from the Ubuntu Snap Store. The

install itself doesn't come with a lot of content but a wide

selection of terrains and vehicles are available from the Rigs of Rods

repository, the above screenshots are "Penguinville" with an

easily destroyed 1949 Ford Club Coupe. The default settings were

quite sluggish on my system but disabling light sources and

shadows got it up to speed. Rigs of Rods is more of a physics

simulator than a game, basically you drive around and, uh, wreck

stuff, but it's loads of fun.. can grab the car with the mouse and

give it a fling.. wheee! crunch! The Rigs of Rods' physics engine

is based on deformable rods and vertices, and is similar to the

ideas used in the commercial BeamNG.drive game for Windows.

This article

from the docs explains the physic simulation in more detail.

Cool stuff.

[1/28/22] Apparently Rings of Rods (RoR) and BeamNG both use a

similar (if not the same) reality engine, the glitches shown in

numerous BeamNG videos are basically the same glitches I get in

RoR, specifically sometimes if deformations happen too fast, it

results one or more "glitch rays" extending from the (mangled)

object. Only happens after extreme crashes and doesn't impact

normal game play. The rods and vertices model of reality is very

effective and somewhat models what happens at an atomic level -

atom nucleons contain mass and bonds between the atoms are the

rods, the atom doesn't care what angle the bond makes, that's

determined by other forces (electrostatic etc). From this simple

model emerges all sorts of macro physics. Of course RoR doesn't

simulate this at a microscopic level so it takes shortcuts, stuff

like elastic and static deformation is modeled at the rod level to

permit realistic behavior with a relatively few number of nodes.

To permit simulating vehicles without having to model every shaft

and gear, some objects in RoR have special properties - steering

can be done with special rods that shorten and lengthen, and

wheels can rotate on their own without an engine. This leads to

hilarious behavior, the steering wheel seems to be indestructible

and even the most scrunched up vehicles can still try to move.

These are optional behaviors, RoR can more accurately model the

drive train in trade for more compute power.

HTML Index Maker

1/28/22 - Here's a script that generates an HTML index file for a

directory of certain files...

---------- begin mkhtmindex ----------------------------

#!/bin/bash

# mkhtmindex 220128 - create a crude 0index.htm containing...

# <html><body><h3>Index</h3>

# <li><a href="fname1.html">fname1.html</a> </li>

# <li><a href="fname2.html">fname2.html</a> </li>

# ...etc...

# <br><body></html>

outfile="0index.htm"

matchlist="*.html *.htm *.txt *.jpg *.gif *.png"

temp1="mkhtmindex.tmp1"

temp2="mkhtmindex.tmp2"

echo "Creating $outfile..."

if [ -f "$outfile" ];then rm "$outfile";fi

if [ -f "$temp2" ];then rm "$temp2";fi

echo "<html><body><h3>Index</h3>" > "$temp1"

for file in $matchlist ;do

echo "<li><a href=\"$file\">$file</a> </li>" >> "$temp2"

done

sort -fg < "$temp2" | grep -v "\*." >>"$temp1"

echo "<br><body></html>" >> "$temp1"

rm "$temp2"

mv "$temp1" "$outfile"

echo "Done."

---------- end mkhtmindex ------------------------------

Useful for indexing a bunch of saved documentation pages, adjust

the matchlist variable as needed.

2/1/22 - Here's a recursive version, also indexes PDF files too

by default...

---------- begin mkrhtmindex ---------------------------

#!/bin/bash

# mkrhtmindex 220201 - create a crude 0index.htm containing...

# <html><body><h3>Index</h3>

# <li><a href="fname1.html">fname1.html</a> </li>

# <li><a href="fname2.html">fname2.html</a> </li>

# ...etc...

# <br><body></html>

# recursive version using find, usage...

# mkrhtmindex [maxdepth ["matchlist"]] where maxdepth is an

# integer and "matchlist" is a quoted extended regex string

# For example: mkrhtmindex 2 ".htm$|.html$|.txt$"

# creates index of .htm .html and .txt files in current dir

# and one subdirectory below the current dir

outfile="0index.htm"

matchlist=".html$|.htm$|.txt$|.jpg$|.gif$|.png$|.pdf$"

maxdepth=5 # default maximum recursion depth

temp1="mkhtmindex.tmp1"

temp2="mkhtmindex.tmp2"

if [ "$1" != "" ];then maxdepth=$1;fi

if [ "$2" != "" ];then matchlist=$2;fi

echo "Creating $outfile..."

if [ -f "$outfile" ];then rm "$outfile";fi

echo "<html><body><h3>Index</h3>" > "$temp1"

# do the current directory first...

find -L . -maxdepth 1 -type f -readable | grep -E \

"$matchlist" | sort -g | sed "s/.\///" > "$temp2"

# now add subdirectories...

find -L . -maxdepth "$maxdepth" -type f -readable | grep -E \

"$matchlist" | sort -g | sed "s/.\///" | grep "/" >> "$temp2"

while read -r file;do

echo "<li><a href=\"$file\">$file</a> </li>" >> "$temp1"

done < "$temp2"

echo "<br><body></html>" >> "$temp1"

rm "$temp2"

mv "$temp1" "$outfile"

echo "Done."

---------- end mkrhtmindex -----------------------------

This version takes optional parameters for recursion depth and

grep regex match string (which must be quoted), in the match

string "$" means end of line (otherwise would match the string

anywhere in the name) and "|" separates the search terms. If no

parms supplied then uses what's defined by the maxdepth and

matchlist variables. The sed "s/.\///" commands strip the leading

"./" characters from the filenames returned by find.

Different Themes for Different Apps

2/10/22 - I usually use a slightly modified

Adwaita Dark theme but recently I installed the Pan

newsreader and it absolutely hates dark themes. What to do...

turns out the solution is very simple - the environment variable

setting GTK_THEME="ThemeName" overrides the theme for GTK3 apps,

and the environment variable setting

GTK2_RC_FILES="/usr/share/themes/ThemeName/gtk-2.0/gtkrc"

overrides the theme for GTK2 apps. The env command (besides

listing environment variables) permits setting an environment

variable then running an app with the variable setting in effect.

For example, to run the Pan app using the Adwaita theme, edit the

launcher command line and change...

pan %U

...to...

env GTK_THEME="Adwaita" pan %U

What to edit depends on the desktop environment, for MATE or

Flashback the "Main Menu" applet can be used. For apps that are

run by association it's useful to add a script to ~/.local/bin

with the same name as the app binary containing (for example)...

#!/bin/bash

env GTK_THEME="Adwaita" /usr/bin/appbinary "$@"

...then for most Ubuntu-like systems the script will run first so

it can set the variable then run the app from its installed path.

A similar technique can be used for GTK2 apps using the

GTK2_RC_FILES variable, but it must be set to the full path to the

theme's gtkrc file.

Here's a script that lists installed GTK2 and GTK themes...

---------- begin listthemes --------------------------------

#!/bin/bash

# listthemes 220210 - lists installed themes

# if not in a terminal then relaunches itself in an xterm window

# list themes in /usr/share/themes and ~/.themes, for each directory...

# print "directoryname -"

# print "GTK2" if gtk-2.0/gtkrc exists

# print "GTK3" if gtk-3.0/gtk.css exists

# if theme.index exists then...

# print GtkTheme= entry (G=entry)

# print MetacityTheme= entry (M=entry)

# print IconTheme= entry (I=entry)

# print Comment= entry (D=entry, D= suppressed if color)

# uses sed to add color ANSI codes to print line

if ! [ -t 0 ];then # if not already in a terminal

if [ "$1" != "xt" ];then # if 1st parm not xterm (in case -t fails)

xterm -geometry 130x40 -e "$0" xt # launch in a xterm window

fi

exit

fi

color=1 # make 1 for color output, 0 for monochrome

width=$(tput cols) # get terminal width

if [ "$width" = "" ];then width=80;fi # in case that didn't work

for d in "(-ist-)" /usr/share/themes/* "(-umt-)" ~/.themes/*;do

if [ "$d" = "(-ist-)" ];then echo "(installed system themes)";fi

if [ "$d" = "(-umt-)" ];then echo "(user modified themes)";fi

if [ -d "$d" ];then # if a directory...

line=""

if [ -f "$d/gtk-2.0/gtkrc" ];then line=" GTK2";fi

if [ -f "$d/gtk-3.0/gtk.css" ];then line="$line GTK3";fi

if [ -f "$d/index.theme" ];then

e=$(grep "GtkTheme=" "$d/index.theme"|head -n1|tail -c+10)

if [ "$e" != "" ];then line="$line G=$e";fi

e=$(grep "MetacityTheme=" "$d/index.theme"|head -n1|tail -c+15)

if [ "$e" != "" ];then line="$line M=$e";fi

e=$(grep "IconTheme=" "$d/index.theme"|head -n1|tail -c+11)

if [ "$e" != "" ];then line="$line I=$e";fi

e=$(grep "CursorTheme=" "$d/index.theme"|head -n1|tail -c+13)

if [ "$e" != "" ];then line="$line C=$e";fi

e=$(grep "Comment=" "$d/index.theme"|head -n1|tail -c+9)

if [ "$e" != "" ];then line="$line D=$e";fi

fi

if [ "$line" != "" ];then

line="$(basename "$d") -$line"

line="$(echo -n "$line"|head -c "$width")"

if [ "$color" = "1" ];then # use sed to colorize line...

line="\033[0;32m$line\033[0m"

line=$(echo -n "$line"|sed "s/ - /\\\033[0m - /")

line=$(echo -n "$line"|sed "s/ GTK2/\\\033[1;33m GTK2/")

line=$(echo -n "$line"|sed "s/ GTK3/\\\033[1;34m GTK3/")

line=$(echo -n "$line"|sed "s/ G=/\\\033[0;36m G=/")

line=$(echo -n "$line"|sed "s/ M=/\\\033[0;33m M=/")

line=$(echo -n "$line"|sed "s/ I=/\\\033[0;35m I=/")

line=$(echo -n "$line"|sed "s/ C=/\\\033[0;32m C=/")

line=$(echo -n "$line"|sed "s/ D=/\\\033[0;31m /")

fi

echo -e "$line"

fi

fi

done

if [ "$1" = "xt" ];then # was launched in an xterm window

echo -n "------- press a key to close -------"

read -rn 1

fi

---------- end listthemes ----------------------------------

A few tricks in this script.. trying a new way to launch itself

in xterm if not already running in a terminal, the first ! [ -t 0

] test triggers if not already running in a terminal but still

don't fully trust that to be 100% reliable so to make sure and

avoid a potential infinite loop it also checks for the "xt"

parameter added to the xterm run self command, also used to

trigger the "press a key" prompt. The terminal width is determined

using $(tput cols), there's also a $COLUMNS variable but it's

mostly useless because if the terminal is resized anything then

run from the resized terminal won't see the change. If for some

reason $(tput cols) returns no output (like tput isn't installed)

then defaults to 80 columns. A for/do/done loop is used to iterate

over all the theme directories, the tags (-ist-) and (-umt-) are

used to trigger printing "(installed system themes)" and "(user

modified themes)". Probably don't need the if [ -d "$d ] check but

whatever. The line is built up item by item depending on what it

finds.. GTK2 GTK3 and entries in the index.theme file if it

exists, tagging the entries G=gtktheme M=metacity theme

I=icontheme C=cursortheme and D=comment/description, this line is

then trimmed to the terminal width to avoid wrapping. If color is

enabled, then sed is used to replace the tagged line entries with

colorized versions without changing the visible line length. Not a

perfect scheme, can be fooled, but works well enough for something

like this.

Output on my system looks like this...

...probably could ditch a lot of those, left over from setting up

my system in search of the perfect look. These days pretty much

just use the Adwaita themes system-wide since that's what most

GTK3 apps expect, but now I can use some of the other themes for

certain apps.

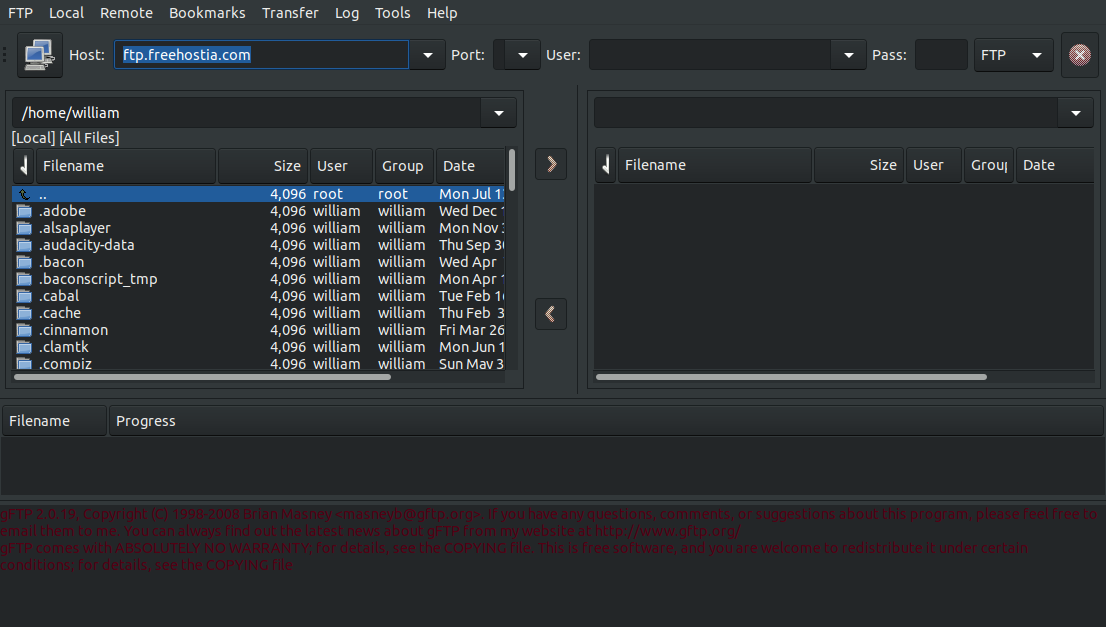

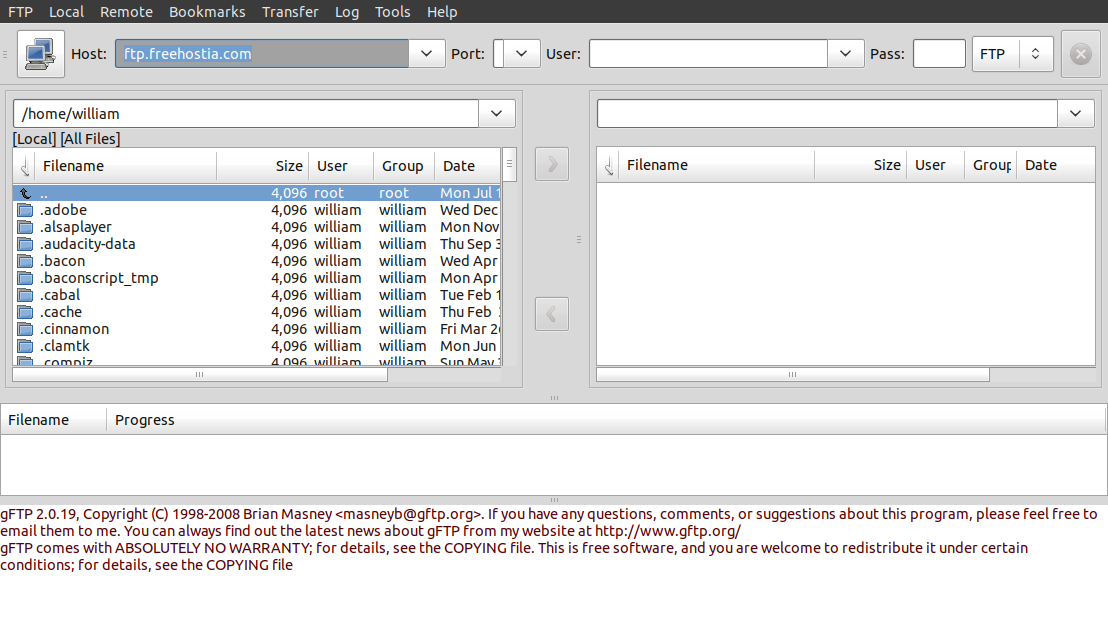

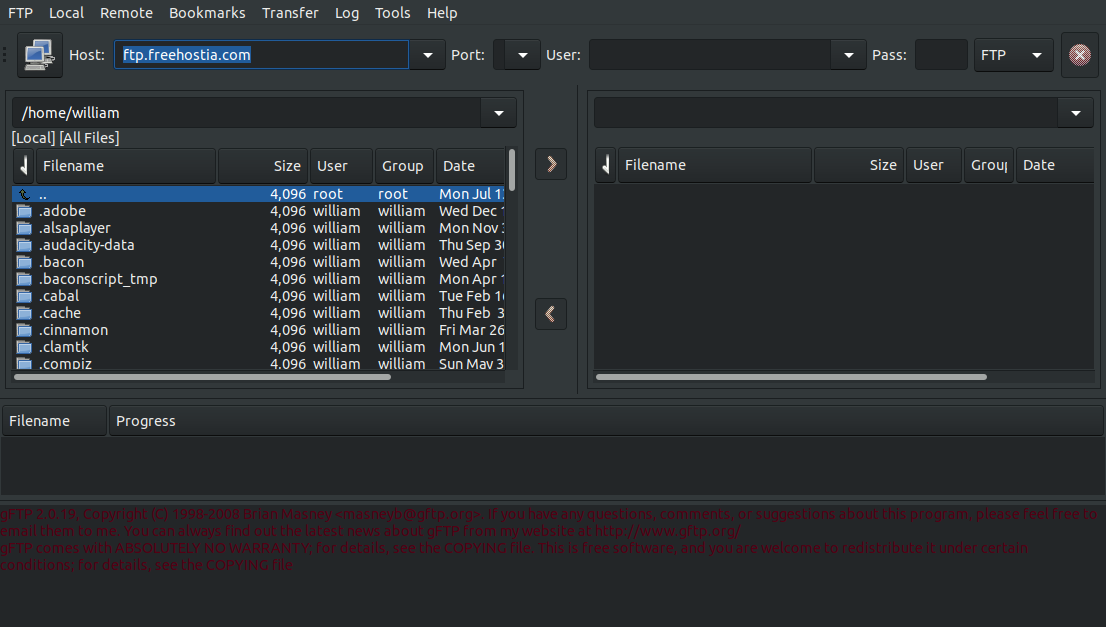

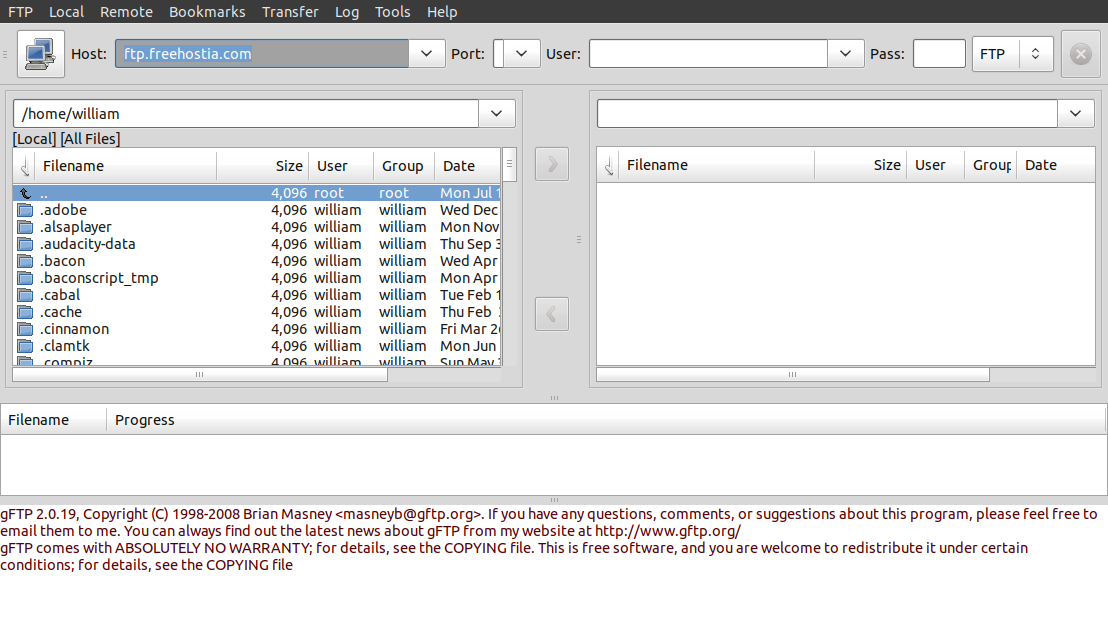

2/14/21 - I don't have many GTK2 apps left but one that I use all

the time is gFTP, and it's not so good with my default dark system

theme...

Would have been OK except for the terminal text color. Here it is

using the Shiki-Brave GTK2 theme from the shiki-brave-theme

package...

Much better! To get this I used the Main Menu applet to edit the

gFTP menu entry to use the command line...

env GTK2_RC_FILES="/usr/share/themes/Shiki-Brave/gtk-2.0/gtkrc" gftp-gtk %u

Curiously pretty much all of the GTK3 themes that also had GTK2

directories (Adwaita Ambiance etc) produced horrible results,

mixed elements from my default dark system theme and the specified

GTK2 theme producing an upchuck of yuck. GTK2-only themes tended

to work better but it was still hit and miss finding a nice

looking theme.

BTW the %u %U etc codes sometimes seen in desktop files after the

command line are for drag-n-drop support, %f for a single file, %F

for a file list, %u for a single url or file, and %U for a list of

urls or files. Equivalent to "$1" or "$@" in scripts.

XTLIST with colorized xxd output

and other sed ANSI tricks

2/21/22 - Here's a new version of my xtlist file lister script

(previous versions here and here)

modified to colorize the hex dump and to tweak the source

highlight output to avoid dark colors (among things)...

------------- begin xtlist ----------------------------------

#!/bin/bash

#

# xtlist - list a text, source or binary file in a xterm window - 220221

# usage: xtlist "filename"

# To use this script as written requires the following...

# xterm, xxd, sed, libtree from https://github.com/haampie/libtree

# (edit below to use lddtree from the pax-utils package instead),

# unbuffer from the expect package, rougify from the ruby-rouge package,

# and pygmentize from the python3-pygments package. Optional utilities

# that don't exist are eliminated or replaced with something else.

#

# Uses xxd for displaying binary files, set hexbyte to bytes per line

# If encolor=1 then uses sed to colorize xxd output

# As written uses rougify from the ruby-rouge package to colorize source

# code, can also use source-highlight, pygmentize or chroma

# If fixcolors=1 then uses sed to brighten dim color codes

# As written pygmentize is used to highlight autodetected BASIC code

# Make enbas=0 to disable BASIC-specific overrides

# Change encolor=1 to encolor=0 to disable color and use plain cat

# As written uses libtree for displaying binary dependencies (link below)

# and uses unbuffer from expect package to trick libtree into color output

# Uses less for display, main controls are up/down arrow, page up/down,

# home for beginning, end for end, q to quit (or close xterm window)

# As written enables mouse scrolling but that disables copy/paste

# Less has a number of features, press h for help

#

cols=90 # xterm columns (expands as needed for binary hex dump)

rows=50 # xterm rows

encolor=1 # 1 for colorized output, 0 to disable color

fixcolors=1 # 1 to fix dim colors (if color enabled)

xtermfgcol="-fg green" # xterm forground color, "" for default

xtermbgcol="-bg black" # xterm background color, "" for default

xtermfont="" # extra xterm parameters

#xtermfont="-fn 10x20" # xterm font, "" for default (xlsfonts for list)

#xtermfont="-fn 9x15"

xtermfont="-fn 7x14"

hexbytes=32 # xxd hexdump bytes per line (must be even)

textgrep=" text| empty" # file output to determine if a text file

exegrep=" ELF" # file output to determine if an ELF binary

# default utilities

lddutil="" # optional, utility to list ELF dependencies

ptyutil="" # optional, utility to fake terminal operation

viewm="cat" # required, default utility for streaming monochrome text

viewc="cat" # required, default utility for streaming color text

lessutil="less"

# ----- edit/comment these to specify/disable utilities ---------------

#lessutil="less -R -~" # viewer utility and options

lessutil="less -R --mouse -~" # less with mouse scroll (disables copy/paste)

# syntax-highlighting viewer for color...

viewc="rougify highlight -t igorpro" # rougify from ruby-rouge

#viewc="source-highlight --failsafe -f esc -i" # GNU source-highlight

#viewc="pygmentize -g -f terminal256" # pigmentize from python3-pygments

#viewc="pygmentize -g -f terminal256 -O style=paraiso-dark" # with theme

#viewc="chroma -l autodetect -s paraiso-dark -f terminal256"

# viewer for .bas files...

enbas=1 # 1 to enable BASIC specific overrides, 0 for highlighter default

viewbas="rougify -t igorpro -l bbcbasic"

#viewbas="pygmentize -l basic -f terminal256 -O style=paraiso-dark"

# viewer for auto-detected BASIC code (not in a .bas file)...

#viewadbas="rougify -t igorpro -l bbcbasic"

viewadbas="pygmentize -l basic -f terminal256 -O style=paraiso-dark"

# viewer for lower-case scripted basic code...

viewscrbas="rougify -t igorpro -l realbasic" # closest w/lowercase support

# utility for listing dependencies...

#lddutil="lddtree -a" # lddtree from the pax-utils package

lddutil="libtree -ap" # libtree from https://github.com/haampie/libtree

# command to trick libtree into thinking it's running in a terminal...

ptyutil="unbuffer" # unbuffer from the expect package

#ptyutil="pty" # pty from https://unix.stackexchange.com/questions/249723/

# ----- end utility edits -----------------------------

function NotExists # helper for determining if utilities exist

{ if which "$1">/dev/null;then return 1;else return 0;fi }

if [ "$2" = "doit" ];then

export LESSSECURE=1 # disable less shell edits etc

viewutil=$viewm # adjust for color or mono operation

if [ "$encolor" = "1" ];then viewutil=$viewc;else ptyutil="";fi

# make sure utilities exist, silently adjust if not

if [ "$lddutil" != "" ];then if NotExists $lddutil;then lddutil="";fi;fi

if [ "$ptyutil" != "" ];then if NotExists $ptyutil;then ptyutil="";fi;fi

if NotExists $lessutil;then lessutil="less";ptyutil="";encolor=0;viewutil=$viewm;fi

if NotExists $viewutil;then viewutil="cat";fi

if [ "$encolor$enbas" = "11" ];then

if NotExists $viewbas;then viewbas=$viewutil;fi

if NotExists $viewadbas;then viewadbas=$viewutil;fi

if NotExists $viewscrbas;then viewscrbas=$viewutil;fi

fi

file -L "$1" | if grep -Eq "$textgrep";then

( if [ "$encolor" = "1" ];then echo -ne "\033[1;33m";fi

echo -n "ls: ";ls -la "$1"

if [ "$encolor" = "1" ];then echo -ne "\033[36m";fi

file -L "$1" | if grep ",";then # display type if not plaintext

# special case for misidentified BASIC source code

file -L "$1" | if grep -q " source,";then

head -100 "$1" | if grep -Eqi "^rem |^print \"";then

echo "(looks like BASIC)"

fi

fi

fi

echo

if [ "$encolor" = "1" ];then

echo -ne "\033[0m"

if (echo "$viewutil"|grep -q "rougify");then # if using rougify...

rtype=$(rougify guess "$1") # get what type file it thinks it is

if (echo "$rtype" | grep -q "plaintext"); then # if plain text

viewutil=$viewm # use monochrome viewer

fi # if rougify guess returns nothing at all...

if [ "$rtype" == "" ];then viewutil=$viewm;fi # use monochrome

if (head -n 1 "$1"|grep -q "^\#\!\/bin\/");then # if 1st line #!/bin/

if (head -n 1 "$1"|grep -Eq "\/bash|\/sh");then # and /bash or /sh

viewutil="rougify -t igorpro -l shell" # force shell lexor

fi

fi

fi # end rougify-specific tweaks

if [ "$enbas" = "1" ];then # BASIC-specific overrides

if (echo "$1"|grep -iq "\.bas$");then # if .bas or .BAS extension

viewutil=$viewbas # viewer for .bas files

else

# autodetect old style basic without .bas extension

# this is separate because some highlighters can't handle HP BASIC

firstline=$(head -n 1 "$1"|grep -E "^ {,4}[0-9]{1,5} {1,4}[A-Z]")

if (echo "$firstline"|grep -Eq \

" REM| PRINT| LET| IF| DIM| INPUT| READ| FOR| GOTO| GOSUB");then

if ! ( # first line looks like basic, make sure...

head -n 20 "$1"|while IFS= read -r line;do # check 20 lines

if (echo "$line"|grep -vEq "^ {,4}[0-9]{1,5} {1,4}[A-Z]");then

echo "nope" # signal out of the subshell if not numbered

fi

done | grep -q "nope");then viewutil=$viewadbas

fi

fi

fi # end .bas file

if (head -n 1 "$1"|grep -q "^\#\!\/");then # if #!/ on 1st line

# basic scripting languages I use..

if (head -n 1 "$1"|grep -Eq "fbc|bbc|yabasic|blassic|bacon");then

viewutil=$viewscrbas # viewer for lowercase basic

fi

fi

fi # end BASIC specific overrides

fi # end encolor highlighter stuff

$viewutil "$1" || # if error use monochrome streamer...

(echo "Error, listing normally...";echo;$viewm "$1")

) | \

( if [ "$encolor$fixcolors" = "11" ];then # if enabled...

# this only works with 256 colors (terminal256)

# change esc[38;5;233-239m to esc[38;5;104m (gray to lighter tinted gray)

# change esc[38;5;17-21m to esc[38;5;33m (darker blue to lighter blue)

# change esc[38;5;0,16,232m to esc[38;5;231m (black to light gray)

sed "s/\x1B\[38;5;23[3-9]m/\x1B\[38;5;104m/g" \

| sed "s/\x1B\[38;5;\(1[7-9]\|2[0-1]\)m/\x1B\[38;5;33m/g" \

| sed "s/\x1B\[38;5;\(0\|16\|232\)m/\x1B\[38;5;231m/g"

else cat ; fi # otherwise pass straight through

) | $lessutil

else

# list binary file.. display output of file command, if ELF file

# also display ldd and readelf output, then list the file as a hex dump

( if [ "$encolor" = "1" ];then echo -ne "\033[1;32m";fi

echo -n "ls: ";ls -la "$1"

if [ "$encolor" = "1" ];then echo -ne "\033[36m";fi

file -L "$1";file -L "$1" | if grep -Eq "$exegrep";then

if [ "$lddutil" != "" ];then

echo; if [ $encolor = 1 ];then echo -ne "\033[33m";fi

echo "$lddutil output...";echo; $ptyutil $lddutil "$1"

fi

echo; if [ "$encolor" = "1" ];then echo -ne "\033[33m";fi

echo "readelf -ed output...";echo; readelf -ed "$1"

fi

echo; if [ "$encolor" = "1" ];then echo -ne "\033[33m";fi

echo "hex listing...";echo

if [ "$encolor" = "1" ]; then

# colorize xxd output...

xxd -c $hexbytes "$1" | while IFS= read -r line; do # read each line

line=${line//\\/\\\\} # escape backslashes to avoid misinterpreting

line="\033[32m$line\033[0m" # address color, reset color at end of line

line=$(echo "$line"|sed "s/: /: \\\033[36m/") # add hex color

line=$(echo "$line"|sed "s/ / \\\033[31m/") # add ascii color

echo -e "$line" # write modified line, convert \033 codes to binary

done

else

xxd -c $hexbytes "$1"

fi

) | $lessutil

fi

else

if [ -f "$1" ]; then

if ! (file -L "$1"|grep -Eq "$textgrep");then # if not a text file

xddcols=$((hexbytes*5/2+hexbytes+11)) # calc hex dump columns

if [ $cols -lt $xddcols ];then cols=$xddcols;fi # expand as needed

fi

xterm -title "xtlist - $1" -geometry "$cols"x"$rows" \

$xtermfgcol $xtermbgcol $xtermfont -e "$0" "$1" doit &

fi

fi

------------- end xtlist ------------------------------------

This version adds commented settings for using the pygmentize or

chroma highlighters (but I still usually use rougify), but one main

change is to the xxd output when listing binary code when color is

enabled...

# colorize xxd output...

xxd -c $hexbytes "$1" | while IFS= read -r line; do # read each line

line=${line//\\/\\\\} # escape backslashes to avoid misinterpreting

line="\033[32m$line\033[0m" # address color, reset color at end of line

line=$(echo "$line"|sed "s/: /: \\\033[36m/") # add hex color

line=$(echo "$line"|sed "s/ / \\\033[31m/") # add ascii color

echo -e "$line" # write modified line, convert \033 codes to binary

done

$hexbytes is set to the number of columns and "$1" is the

filename. First it pipes the xxd output into a while read loop

(with -r so it doesn't interpret backslashes, the IFS= ensures

that whitespace isn't trimmed but that doesn't matter in this

case) setting the line variable to each line, one line at a time.

For each line it first uses sed to convert all "\" characters to

"\\" so that echo -e won't mangle them, then it puts a green ANSI

code at the beginning of the line and a color reset code at the

end of the line, then replaces the first ": " with ": " plus a

cyan color code, then replaces the first double space with a

double space plus a red color code, then echos the line with -e to

preserve the color output. This all gets piped into less -R.

The results look like...

Just a little thing but it does make it easier to parse. Note

that there is no real processing delay from doing this, the

pipeline still processes one line at a time as needed so even if

passing a multi-gigabyte file the hex listing appears almost

instantly.

Another change is when listing colorized source code it edits the

ANSI codes on the fly to avoid darker colors, this is implemented

as an extra (code) block inserted between the highlighter code

block and less...

(

...highlight lister code...

) | \

( if [ "$encolor$fixcolors" = "11" ];then # if enabled...

# this only works with 256 colors (terminal256)

# change esc[38;5;233-239m to esc[38;5;104m (gray to lighter tinted gray)

# change esc[38;5;17-21m to esc[38;5;33m (darker blue to lighter blue)

# change esc[38;5;0,16,232m to esc[38;5;231m (black to light gray)

sed "s/\x1B\[38;5;23[3-9]m/\x1B\[38;5;104m/g" \

| sed "s/\x1B\[38;5;\(1[7-9]\|2[0-1]\)m/\x1B\[38;5;33m/g" \

| sed "s/\x1B\[38;5;\(0\|16\|232\)m/\x1B\[38;5;231m/g"

else cat ; fi # otherwise pass straight through

) | $lessutil

Since it was all straight(ish) replacements implemented as a

sequential sed pipeline rather than a while read loop, if the fix

isn't enabled then passes the text through cat instead. The regex

code was a bit tricky but slowly starting to get it.. '\x1B'

matches the binary escape chars, '\[' matches '[', '23[3-9]'

matches 233 through 239, 1[7-9] matches 17 through 19 and '2[0-1]'

matches 20-21, so '\(1[7-9]\|2[0-1]\)' matches 17 through 21 (the

'(' '|' and ')' have to be backslash-escaped), and

'\(0\|16\|232\)' matches 0, 16 or 32. I used this handy 8-bit

ANSI color code chart to figure out what to change, from

this ANSI

Escape Codes guide.

Here is the stock rougify highlight -t igorpro output...

...and with fixcolors enabled...

...I can read that better. As with the xxd colorizing code, the

extra processing adds no real overhead - large source files still

appear almost instantly, although (as with VIM) sometimes the

highlighting is hit or miss. Pygmentize seems to do better with

tricky code.

Another change to the xtlist script is separating the .BAS file

and basic scripts detection so I can use rougify and/or pygmentize

for BASIC code even when using another highlighter for other

source code, now the rougify-specific section just includes

workarounds for plain text and to force the shell lexor for

/bin/sh and /bin/bash - was getting confused by the <html>

in the mkhtmindex script's comments. There's a lot of

BASIC-specific overrides in this script (I use various BASICs a

lot) - if the file extension is .BAS (any case) then it uses

whatever viewbas is set to for the highligher utility. If the

extension is not .BAS then it checks to see if it looks like

old-style BASIC code (uppercase with line numbers), if so then

uses the viewadbas setting. This is separate from the .BAS

detection because pygmentize does a better job with old HP BASIC

source (uses [n] for arrays) which I usually save with a .TXT

extension since QBasic BBCBASIC etc will barf on it. Finally, I

write a lot of scripts using scripted basic (blassic,

yabasic

and my homemade solutions fbcscript, baconscript

and bbcscript), these are

detected if the file starts with #!/ plus a key string and use the

viewscrbas setting. All of this BASIC specific stuff can be

disabled by making enbas=0.

The script is starting to get a bunch of dependencies so added

code to verify that the utilities exist, if any of the optional

utilities don't exist (xterm xxd and sed assumed to exist) then

tries to fall back to something that'll at least work. If the main

highligher viewc doesn't exist then uses cat, if the BASIC

specific highlighters don't exist then falls back to the main

highlighter, and if lddutil or ptyutil doesn't exist then disables

those. The script triggers a few shellcheck warnings but these are

intentional - in several places a $variable is not quoted to force

expansion to separate parameters, and var=${var//search/replace}

doesn't (as far as I can tell) do what I need to do.

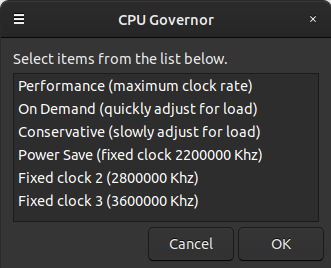

Controlling the CPU speed, take 2

3/21/22 - The previous version of my CPUgov script

uses the cpufreq-set utility to select one of four CPU speed

governors - performance, ondemand, conservative, or powersave.

Like most computers these days it seems, the cooling fan in my

system can't support full CPU utilization continuously, and the

AMD Ryzen 3600 in my system doesn't gracefully throttle, the whole

thing just shuts off if it gets too hot (ouch). So typically I use

the powersave governor and have a script that periodically checks

the temperature, forcing powersave if things get too hot. Recently

I discovered that I could bump up the powersave frequency from

2.2Ghz to 2.8Ghz and the system was still fine thermally even with

100% processor usage, so redid my CPUgov script to allow selecting

additional fixed clock rates...

--------------- begin CPUgov ---------------------------

#!/bin/bash

# CPUgov - set all CPU's to specified governor - 220321

# requires Zenity and the cpufrequtils package, and CPU/kernel

# support for frequency control. For governor descriptions see...

# https://www.kernel.org/doc/Documentation/cpu-freq/governors.txt

# The cpufreq-set binary must be made root suid, i.e..

# sudo chmod u+s /usr/bin/cpufreq-set

# ..otherwise this script has to run as root.

cpudev="/sys/devices/system/cpu"

maxcpu=31 # max cpu scanned, non-existent cpus ignored

# bail if the CPU doesn't have adjustable frequencies...

if ! [ -e $cpudev/cpu0/cpufreq/scaling_available_frequencies ];then exit;fi

function GetFreqs # helper to get last three available frequencies

{ freq1=$1;freq2="";freq3="" # return freq1 freq2 freq3 in ascending order

if [ "$2" != "" ];then freq1=$2;freq2=$1;freq3="";fi # empty if not avail

while [ "$3" != "" ];do freq1=$3;freq2=$2;freq3=$1;shift;done }

GetFreqs $(cat $cpudev/cpu0/cpufreq/scaling_available_frequencies)

sel2="";if [ "$freq2" != "" ];then sel2="Fixed clock 2 ($freq2 Khz)";fi

sel3="";if [ "$freq3" != "" ];then sel3="Fixed clock 3 ($freq3 Khz)";fi

selection=$(zenity --title "CPU Governor" --hide-header \

--column "" --width 330 --height 230 --list \

"Performance (maximum clock rate)" \

"On Demand (quickly adjust for load)" \

"Conservative (slowly adjust for load)" \

"Power Save (fixed clock $freq1 Khz)" \

"$sel2" "$sel3"

)

gov="";minfreq=$freq1

if [ "${selection:12:1}" = "2" ];then minfreq=$freq2;fi

if [ "${selection:12:1}" = "3" ];then minfreq=$freq3;fi

if [ "${selection:0:3}" = "Per" ];then gov=performance;fi

if [ "${selection:0:3}" = "On " ];then gov=ondemand;fi

if [ "${selection:0:3}" = "Con" ];then gov=conservative;fi

if [ "${selection:0:3}" = "Pow" ];then gov=powersave;fi

if [ "${selection:0:3}" = "Fix" ];then gov=powersave;fi

if [ "$gov" != "" ];then

for cpu in $(seq 0 1 $maxcpu);do

if [ -e "$cpudev/cpu$cpu" ];then

cpufreq-set -c $cpu -g $gov -d $minfreq

fi

done

fi

--------------- end CPUgov -----------------------------

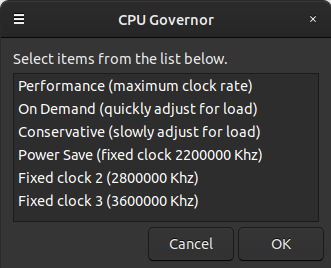

Here's how it looks on my system...

It lists the last 3 entries from the

scaling_available_frequencies file (which for my system is all of

them) with the lowest one being Power Save, the other options also

use the powersave governor but bump up the scaling_min_freq

variable. If there are less than 3 available frequencies it lists

what's available, the blank options are selectable but do nothing.

Like the previous CPUgov this script uses the cpufreq-set utility

from the cpufrequtils package, to avoid having to have root

permissions, prompt for the password etc the cpufreq-set binary is

set to root suid using the chmod command listed in the comments.

Here is a version of the script that does not use the cpufreq-set

utility....

--------------- begin CPUgov2 --------------------------

#!/bin/bash

# CPUgov2 - set all CPU's to specified governor - 220321

# requires Zenity and CPU/kernel support for frequency control.

# For governor descriptions see...

# https://www.kernel.org/doc/Documentation/cpu-freq/governors.txt

#

cpudev="/sys/devices/system/cpu"

maxcpu=31 # max cpu scanned, non-existent cpus ignored

pw="" # put something here to avoid prompting for the sudo password

# bail if the CPU doesn't have adjustable frequencies...

if ! [ -e $cpudev/cpu0/cpufreq/scaling_available_frequencies ];then exit;fi

function GetFreqs # helper to get last three available frequencies

{ freq1=$1;freq2="";freq3="" # return freq1 freq2 freq3 in ascending order

if [ "$2" != "" ];then freq1=$2;freq2=$1;freq3="";fi # empty if not avail

while [ "$3" != "" ];do freq1=$3;freq2=$2;freq3=$1;shift;done }

GetFreqs $(cat $cpudev/cpu0/cpufreq/scaling_available_frequencies)

sel2="";if [ "$freq2" != "" ];then sel2="Fixed clock 2 ($freq2 Khz)";fi

sel3="";if [ "$freq3" != "" ];then sel3="Fixed clock 3 ($freq3 Khz)";fi

selection=$(zenity --title "CPU Governor" --hide-header \

--column "" --width 330 --height 230 --list \

"Performance (maximum clock rate)" \

"On Demand (quickly adjust for load)" \

"Conservative (slowly adjust for load)" \

"Power Save (fixed clock $freq1 Khz)" \

"$sel2" "$sel3"

)

if [ "$pw" = "" ];then

pw=$(zenity --title "CPU Governor" --password)

fi

gov="";minfreq=$freq1

if [ "${selection:12:1}" = "2" ];then minfreq=$freq2;fi

if [ "${selection:12:1}" = "3" ];then minfreq=$freq3;fi

if [ "${selection:0:3}" = "Per" ];then gov=performance;fi

if [ "${selection:0:3}" = "On " ];then gov=ondemand;fi

if [ "${selection:0:3}" = "Con" ];then gov=conservative;fi

if [ "${selection:0:3}" = "Pow" ];then gov=powersave;fi

if [ "${selection:0:3}" = "Fix" ];then gov=powersave;fi

if [ "$gov" != "" ];then

for cpu in $(seq 0 1 $maxcpu);do

if [ -e "$cpudev/cpu$cpu" ];then

echo -E "$pw"|sudo -S -p "" bash -c \

"echo $minfreq > $cpudev/cpu$cpu/cpufreq/scaling_min_freq; \

echo $gov > $cpudev/cpu$cpu/cpufreq/scaling_governor"

fi

done

fi

--------------- end CPUgov2 ----------------------------

This version prompts for the sudo password, to avoid the prompt

the password can be hard-coded where indicated. Usually that is

considered bad security practice but for a personal system it

doesn't matter that much - if a malicious app/user has access to

your home directory you have bigger problems.

Here are the other CPU speed scripts I use, both are run at

startup and require the cpufreq-set utility with root suid

permissions. These scripts are specific to my system and will

require adjustments to use, at least for the maxcpu and freq

variables. The maxcpu variable is set to the highest core number.

Use the command cat

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_frequencies

to list the available frequencies.

This script selects the powersave governor with the minimum

frequency...

--------------- begin CPUpowersave ---------------------

#!/bin/bash

# set all cpu cores to powersave mode

# requires cpufreq-set from cpufrequtils set with chmod u+s

maxcpu=11

gov=powersave

freq=2200000

for cpu in $(seq 0 1 $maxcpu);do

cpufreq-set -c $cpu -g $gov -d $freq

done

--------------- end CPUpowersave -----------------------

This script periodically checks the temperature and if too hot

engages the powersave governor with the minimum frequency...

--------------- begin autotempthrottle -----------------

#!/bin/bash

# autotempthrottle 220321

# automatically throttle CPU to powersave if temp exceeds threshold

# doesn't throttle up, use CPUgov script to throttle back up after triggering

# requires cpufreq-set from cpufrequtils set with chmod u+s

# requires sensors to read temperature

set -e # exit script if an error occurs

threshtemp=190 # degrees F to trigger throttle

sensorid="Tdie:" # sensor to read

maxcpu=11 # number of cores to throttle

gov=powersave # governer name

freq=2200000 # frequency to throttle to

sleeptime=10 # time to sleep between checks

while true;do # loop forever

sleep $sleeptime

dietemp=$(sensors -f|grep "$sensorid"|awk '{print $2}'|head -c-6|tail -c+2)

if [ $dietemp -ge $threshtemp ];then

# wait some more in case momentary

sleep $sleeptime

dietemp=$(sensors -f|grep "$sensorid"|awk '{print $2}'|head -c-6|tail -c+2)

if [ $dietemp -ge $threshtemp ];then

# echo "Throttling back"

for cpu in $(seq 0 1 $maxcpu);do

cpufreq-set -c $cpu -g $gov -d $freq

done

fi

fi

done

--------------- end autotempthrottle -------------------

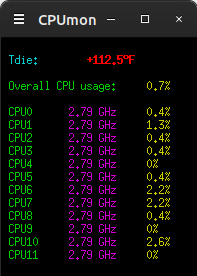

This script checks the temperature and the speed of the CPU cores

to make sure everything is working right... (updated)

--------------- begin CPUmon ---------------------------

#!/bin/bash

# CPUmon 221227 - displays CPU temperature, frequencies and usages

# requires xterm, sensors and cpufreq-info (from cpufrequtils)

# uses /dev/shm for temp files

maxcpu=11 # number of cores minus 1

sensorid="Tctl:" # sensor to read

if [ "$1" = "doit" ];then

stathead=$((maxcpu + 2))

clear # clear screen

echo -ne "\033[?25l" # make cursor invisible

# read /proc/stats and write file with entries for cpu[n] runtime idletime,

# first line is "cpu" with overall stats, additional lines cpu0 cpu1 etc

# runtime calculated by adding system nice and user times together

cat /proc/stat|head -n$stathead|\

awk '{print $1" "$2+$3+$4" "$5}'>/dev/shm/stats2.tmp

while true;do # loop until ctrl-c or window closed

sleep 2 # seconds between updates

# copy previous stats to temp file

cp /dev/shm/stats2.tmp /dev/shm/stats1.tmp

# refresh current stats

cat /proc/stat|head -n$stathead|\

awk '{print $1" "$2+$3+$4" "$5}'>/dev/shm/stats2.tmp

# combine previous and current stats and compute CPU usages

# stats variable contains lines for cpu[n] percentage (one decimal place)

stats=$(paste /dev/shm/stats1.tmp /dev/shm/stats2.tmp|\

awk '{print $1" "int((($5-$2)/(($6-$3)+($5-$2)))*1000)/10}')

echo -ne "\033[H\033[0;36;40m" # home cursor, set initial text color

echo # print CPU temp

echo " $(sensors -f|grep "$sensorid") "|grep --color "+.*$"

echo

# set colors and print overall cpu usage from stats variable

echo -e "\033[32m Overall CPU usage:\033[33m $(echo "$stats"|\

grep "cpu "|awk '{print $2}')% "

echo

for i in $(seq 0 1 $maxcpu);do # loop i from 0 to maxcpu

# set color, print CPU label, tab/set color, print CPU freq (no newline)

echo -ne "\033[32m CPU$i \t\033[35m $(cpufreq-info -c $i -fm) "

# tab, set color, print cpu usage from stats variable

echo -e "\t\033[33m$(echo "$stats"|\

grep "cpu$i "|awk '{print $2}')% "

done

done

else

xterm -geometry 32x$((maxcpu + 7)) -e "$0" doit

fi

--------------- end CPUmon -----------------------------

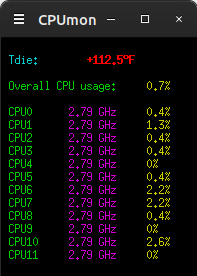

It should produce a display something like this...

Goodbye 2022, Hello 2023

12/27/22 - For some reason in the CPUmon script above the "Tdie:"

sensor no longer works, had to change it to "Tctl:" (while at it

updated the script so that it requires less editing for a

different number of cores). Had to do the same in Gnome Panel's

temp display applet, some update or something. But overall my

hacked Ubuntu 20.04 with Gnome Panel and Caja and Mutter has been

reasonably stable. There have been a few glitches - occasionally

video playback would freeze requiring logging out and back in but

that hasn't happened in awhile (probably buggy NVidia drivers that

got updated), sometimes if I copy or move stuff too fast Caja

would freeze but after a bit it would unfreeze by itself (and

learned to move slower), and occasionally Caja would outright

crash (like when trying to view properties of a snap folder), for

that made a Gnome Panel shortcut to caja -n --force-desktop to get

it back. No big deal, outright system crashes have been very rare.

I'm trying out Ubuntu 22.04 in VirtualBox - can be hacked into

submission but haven't figured out theming with the Gnome Panel

flashback session yet so have it set to Mate for the moment. The

desktop icons package for the flashback session is improved, but

it's still far from working for me - I need a desktop that does

just about everything the file manager does (because ideally it is

the file manager). Thank goodness for Caja and Mate. I was a bit

surprised that Gnome Panel is still around. Gnome Shell, that's a

hard no for me. Wayland doesn't work under VirtualBox (and never

has on my real system, presumably because of NVidia). My current

system works well and now that there's AppImage, FlatPak and even

Snap I have no compelling reason to upgrade to 22.04 at this

point, I can still have the latest versions of major apps if I

want.

Of the new distribution formats my favorite for simpler apps is

AppImage - it's just a binary you stick somewhere and run it, user

is responsible for making menu entries, symlinks, updating etc.

For more complicated apps FlatPak is nice, it takes care of making

menu entries and updates (when I want), currently the only FlatPak

app I have is the latest KiCad but that will likely change.

FlatPak installs are actual files (under /var/lib/flatpak) that

can be browsed and manipulated if need be (but that's probably

discouraged), faster to load but uses more disk space. AppImage

packages are self-mounting compressed file systems, the files are

accessible only when the app is running and cannot be modified.

Snap... it's kind of like FlatPak but the file systems are

compressed and mounted all the time, and updates are performed

with regular updates except it doesn't tell me what it's updating

and it doesn't remove the previous versions of apps.. I guess I

need to make a script for that. Not crazy about the Snap format

(especially being mounted all the time polluting my df listing)

but it's the default for Ubuntu and I have several Snap apps, they

work. All of these formats pretty much solve the previous issue of

requiring the latest operating system to run the latest version of

something.

So yay, upgrading my OS is not as important now. Then again I ran

Ubuntu 12.04 until my previous computer literally died, and on the

Windows side of things 32-bit Windows 7 (in VirtualBox) is still

my main workhorse (got Windows 10 too but it's slower, doesn't

work as well, and only good for new stuff that won't run on 7).

So on to 2023, Happy Holidays and Happy New Year! Peace.

1/1/23 - WooHoo. Not much on resolutions and all that but one

thing I want to get a handle on soon is making halfway

decent-looking GUI apps - my programs tend to look like they came

from the early '80's with 1) 2) 3) menus and all that. Which works

fine but the newer generation and the fine folks I work for kinda

wonder about me sometimes. I'm fine but not so fine with modern

programming languages and environments - why the heck does

everything have to be so darn difficult? I'm mainly a BASIC (or

something like it) programmer but I can deal with C or Pascal or

other languages, the main reason I like BASIC is because that's

what I grew up with and the less I have to refer to docs for the

basics the more I can get done. Cross platform is nice but mainly

need to target Windows so that eliminates Gambas. I like FreeBasic

a lot but to do anything graphically requires handling everything.

Same with BBC Basic, both of these can make me Windows binaries

that work but don't do much for me in the pretty GUI department.

There's Lazarus for Free Pascal but so far haven't figured out how

to make it work. Recently found something called BlitzMax that can

make cross platform graphical programs but it's another one where

the programmer has to basically invent their own GUI framework.

Might be missing something.

Then there's Visual Basic. I bought the VB6 learning edition over

20 years ago, and stuff I made with it back then still works today

under wine or Windows. It was so easy to make simple GUI apps!

Didn't use it that much because back then didn't really need GUI

stuff (QBasic was fine for me) but things change. I've tried to

install VB6 into wine but never could make it work right. So what

the heck installed it into my Windows 7 VirtualBox VM I use for

work stuff. First try wasn't too successful - mostly worked but

the docs didn't - but with a bit of internet help got it

installed. The main tricks are to make sure UAC is disabled and to

run the setup program as administrator, then once installed set

the VB6.EXE file for XP compatibility mode and to run as

administrator. After that could access the online docs and my old

Galaxy toy

recompiled and ran fine. Mostly.. was slightly clipping off the

right and bottom so tinkered with a few numbers in the resize part

to account for (I presume) Windows 7's fatter window borders. And

it's very fast, recompiling is instant. Compared to modern bloated

programming stuff this is like wow.

The app I want to make for work stuff is for making adjustments

to an intercom I make for Racing Radios, each channel contains a

miniature DSP chip which provides automatic gain, limiting, EQ,

background noise suppression and other cool stuff. The control PIC

uses a simple serial protocol for loading DSP code into the

onboard EEPROM and changing the settings for that code.. right now

I'm using a FreeBasic console app for this but it's very crude - I

want something that has sliders for gain EQ and other variable

settings and checkboxes for on/off features. The learning edition

of VB6 doesn't provide access to the serial port but there's a

wrapper called XMComm that I've used before and is still

available. Another workaround would be to shell to something made

with FreeBasic.

Why bother with VB6 when VB.Net is free now? Probably a good

question. I have it installed in my Win10 VM and it does work,

it's just a whole lot more complicated (!!!). Instead of making a

single standalone EXE, even for a simple console app it makes an

EXE, a DLL, a PDB file (?) and a couple of JSON files, all but one

(the deps json) were required to run the program (a simple tiny

basic interpreter console app). Plus the dot net 6 run time which

was a bit hard to find.. MS edge knew I was using Win 7 (because

it told me) and "some results were removed", had to drill down a

bit to find an installer for 32 bit Windows 7. They really don't

want people running old OS's but that is not my reality. Old VB6

apps were so common that chances are the runtime is already

installed. So.. I don't know, will keep plugging with VB.Net but

in the mean time can be actually making stuff that works.

2023 So Far

11/10/23 - It's been awhile since I made an entry here, busy with

work and there hasn't been much of significance to report but I

have been dabbling with a few things.

Still using Ubuntu 20.04 with my custom "flashback" session with

Mate's Caja file manager handling the desktop, still using

SeaMonkey Composer to make my web pages, my VIMconvert script for

colorizing code works great and seems to work better after a

recent SeaMonkey update - colorized code copy/pastes very cleanly

from SeaMonkey Browser to Composer, no extra newlines. Still using

IrfanView under wine as my primary image editor, running a fairly

recent wine 8.0.2 from the winehq repository, it's not perfect but

works quite well for simpler Windows apps. I'm running MATE on the

shop machine, basically a twin of my main machine that I bought

after I thought my motherboard was on fire. MATE's panel

isn't as fancy as Gnome Panel but it works fine. Only thing I had

to do was install Caffeine Indicator (and remember to activate

it), otherwise it turns off the monitor after a period of time,

which is inconvenient when it is showing a schematic of something

I'm working on. Probably something to do with my hardware, doesn't

happen when running in VirtualBox.

The Ubuntu 20.04 operating system itself is mostly boring and

functional and lets me do my work, it might be slightly outdated

but for the most part that doesn't matter, more and more apps are

being packaged as AppImage, FlatPak or Snap which permits running

the latest versions of apps regardless of the OS version and

without having to replace other installed versions. AppImage is

the simplest, little or no OS support is needed, they're just

binaries that can be run from anywhere. I have to make my own menu

entries and launchers and manually update as needed, to keep

things easy I make short name symlinks to the appimage files so my

menu entries won't change when I update an app.

FlatPak and Snap have a repository and update system, and usually

take care of making menu entries and/or installing symlinks for

integrating into the system. I much prefer the FlatPak system the

apps start much faster and it's not so much "in my face". Snap on

the other hand would update whenever it wanted do to the point I

had to disable automatic snap updates, but the manually-run system

updater never worked properly for snaps. Here are the scripts I

use to keep FlatPak and Snap packages updated, and for Snaps

delete unused runtimes...

------- begin updateflatpak --------------

#!/bin/bash

if [ -t 0 ];then

echo

echo "This script will update all flatpak packages and"

echo "remove unused packages. Press any key to continue..."

echo

read -n 1

echo

flatpak update

echo

echo "Removing unused packages..."

echo

flatpak uninstall --unused

echo

echo "----- press any key ------"

read -n 1

else

xterm -geometry 120x40 -e "$0"

fi

------- end updateflatpak ----------------

------- begin updatesnaps ----------------

#!/bin/bash

# refresh all snaps then remove all disabled snaps

if [ -t 0 ];then

echo

echo "This script will update all snap packages as needed then will"

echo "remove all disabled snap packages. Press any key to continue..."

echo

read -n 1

echo

sudo snap refresh

echo

snap list --all | grep "disabled$" \

| awk '{ print "sudo snap remove --revision="$3 " --purge " $1 }' | bash

echo

echo "----- press any key ------"

read -n 1

else

xterm "$0"

fi

------- end updatesnaps ------------------

Flatpak is easier to deal with and as far as I can tell doesn't

hold on to dead dependencies, the script is mainly to run the

update command in a larger terminal to view its cool interface .

Snap made me write a command grep awk bash pipeline just to remove

old versions of dependencies, first time I ran that script it

recovered several gigabytes.

I have 22.04 running in VirtualBox, evaluating if I might want to

upgrade... not all that impressed. MATE is OK, but the "flashback"

session has deteriorated. Could only get it to work with the

Metacity window manager (although that is somewhat improved), on

my 20.04 system I usually use Mutter. Gnome Panel - my primary

interface on my 20.04 system, is obviously an unwanted cast-aside

at this point - logged into the flashback session (in which I had

already configured to use Caja for the desktop because they

lobotomized Nautilus but otherwise hadn't done much to), tried to

add a terminal to the panel... it added a clock instead. Really? I

cannot see myself continuing with Gnome past 20.04 when it can't

get even the most simplest basic things right, it's like nobody

even bothered to try it past booting to see if it even actually

worked. But of course, Gnome wants the panel with desktop icons

thing to go away as it doesn't meet their vision. But their vision

does not meet my needs and they took away the options that did

meet my needs. So I guess it's MATE or XFCE for future systems.

Then again I ran 12.04 for over 8 years until I finally got tired

of incompatibilities.

Updated my old minute timer script, now it

uses zenity's progress bar feature...

------- begin minutetimer -----------------

#!/bin/bash

# minutetimer 230115

# a simple timer.. enter minutes to delay then it pops up an

# alert after that many minutes have passed. Floating point ok.

# shows a progress bar now.. cancelling the progress bar closes

# the bar but does not cancel the actual timer

# caution... not much error checking when entering minutes

if [ "$1" = "showprogress" ];then

# sleep interval=seconds/100

interval=$(echo -|awk "{print $2 / 100}")

(for i in $(seq 1 100);do sleep $interval;echo $i;done)|zenity --progress \

--title "Minute Timer" --width 300 --text "Waiting $2 seconds..." --auto-close

exit

fi

minutes=$(zenity --title "Minute Timer" --width 300 --entry --text \

"Enter minutes to delay..." )

if (echo "$minutes"|grep " ">/dev/null);then minutes="";fi

seconds="";if [ "$minutes" != "" ];then

seconds=$(echo -|awk "{if ($minutes>0) {print $minutes*60}}")

fi

if [ "$seconds" = "" ];then

zenity --title "Minute Timer" --width 300 --info --text \

"Not running."

exit

fi

# show a progress bar display in a background process

$0 showprogress $seconds &

sleep $seconds

zenity --title "Minute Timer" --width 300 --info --text \

"It's been $minutes minutes ($seconds seconds)"

------- end minutetimer -------------------

Despite the progress bar running in a separate shell and calling

sleep 100 times with an awk-calculated period, at least for short

intervals the progress bar closes pretty much exactly when the

"It's time" message pops up. I use this script so I won't burn my

pizza.

Artificial Intelligence - Recently large language models

(LLMs) have become available that have almost uncanny abilities -

although at their core they're just advanced auto-complete

engines, they exhibit emergent behaviors that can at times make

them appear intelligent. I have not interacted with the larger

models such as ChatGPT, just toy models that can run on a regular

PC, but even these can appear to be able to reason to a certain

degree. But it doesn't take long to figure out that it is mostly

just an illusion and the quality of the output can vary widely

depending on what it was trained on. Wizard Vicuna 30B passes the

dead duck test - which travels faster, a dead duck or a turtle?

correctly noticing that dead ducks cannot travel without help -

but when asked how to listen to a listen to a vinyl record without

a record player it didn't have a clue (suggesting I borrow one)

and when pressed on how to make a mechanical record player it gave

an impressive step by step guide that didn't make any sense. But

it acknowledged that my idea of using a spinning wheel and a cone

of paper with a sewing needle in the end would theoretically work

but cautioned me that it would scratch my records. When asked

about other technical subjects it gets some things right, but gets

other things spectacularly wrong, often confusing different fields

of science which happen to use similar terminology - it doesn't

actually understand the subject matter, it just outputs

plausible-sounding text which is occasionally correct. They love

to make stuff up, which is a huge issue when trying to use them

for serious research.

One hot application of AI's like these are programming

assistance, such as Microsoft's popular code copilot service. Some

of these coding-optimized models are small enough to run on my PC

- I typed in "Write a python program that plots the Mandelbrot Set

in a graphical window." into an LLM called Code LLaMa and it made

the following program...

import matplotlib.pyplot as plt

import numpy as np

def mandelbrot(x, y):

z = 0

for i in range(100):

z = z**2 + complex(x, y)

if abs(z) > 2:

return i

return -1

width = 800

height = 600

xmin = -2.5

xmax = 1.5

ymin = -1.5

ymax = 1.5

dx = (xmax - xmin) / width

dy = (ymax - ymin) / height

# Generate the Mandelbrot Set

data = np.zeros((width, height), dtype=np.uint8)

for i in range(width):

for j in range(height):

x = xmin + i * dx

y = ymin + j * dy

data[i, j] = mandelbrot(x, y)

# Plot the Mandelbrot Set

plt.imshow(data, cmap='Greys', vmin=0, vmax=100)

plt.axis('off')

plt.show()

...that when ran produced this in the console...

$ python3 aimp.py

aimp.py:27: DeprecationWarning: NumPy will stop allowing conversion of

out-of-bound Python integers to integer arrays. The conversion of -1 to

uint8 will fail in the future.

For the old behavior, usually:

np.array(value).astype(dtype)`

will give the desired result (the cast overflows).

data[i, j] = mandelbrot(x, y)

...and opened a window named "Figure 1" containing...

Not bad for a first try, normally it is drawn on its side, not

pointing up. Was not expecting it to use a matlib library for

graphics. But it seems to have gotten lucky with this example,

trying to get it to write other complete programs usually resulted

in non-functional code. It doesn't really understand the code, it

just outputs stuff that looks like code and sometimes works but

usually contains incorrect includes, syntax errors, unmatched

parenthesis etc. It works better when asked about short code

snippets with a limited number of functions, or command line

parameters.

There are unresolved questions and issues regarding LLMs. They

were trained on material from the internet and other sources, and

sometimes output copy-written material verbatim without

attribution. There is no known way to remove material already

encoded as weights in a neural network model (they don't really

know how the data maps to weights), best thing they can do in

response to take-down requests is to directly filter the output to

remove the material, or do additional training to try to make it

not talk about certain things which doesn't always work. These

models often require millions of dollars worth of compute to

encode the weights, retraining without the offending data is

usually not an option. When using for the purposes of code

creation (or any publication of the results) then the user needs

to do due diligence to ensure that the code or whatever it spit

out isn't copyrighted by someone. That little Mandelbrot program

is probably on the net somewhere in similar form. As output from

these things get posted to the internet, they begin to digest

their own (mis)information. A potential danger is someone might

believe what the AI says without fact-checking, this has happened

and sanctions imposed - don't submit AI-written documents to a

court, don't ask the AI if it wrote a particular passage, never

assume what an AI says is true. There are some people in society

that are vulnerable to plausible-sounding misinformation, this

happens all the time anyway but now we have another source of

junk.

As far as software for running models locally, I've had pretty

good luck with Ollama using a

simple HTML UI, and

the original llama.cpp with

its built-in web interface (examples/server). Keep in mind these

things involve huge downloads (even small models are more than 4

gigabytes), and things move fast - once it's working updating the

code usually means breaking existing models and having to download

new models. Ollama is written in go, didn't have to install much

to compile (basically golang then "go build ."), llama.cpp just

takes a simple make. Ollama is slower but was simpler to set up